@@ -47,4 +48,4 @@ Ultralytics YOLO repositories like YOLOv3, YOLOv5, or YOLOv8 are available under

- **AGPL-3.0 License**: See [LICENSE](https://github.com/ultralytics/ultralytics/blob/main/LICENSE) file for details.

- **Enterprise License**: Provides greater flexibility for commercial product development without the open-source requirements of AGPL-3.0. Typical use cases are embedding Ultralytics software and AI models in commercial products and applications. Request an Enterprise License at [Ultralytics Licensing](https://ultralytics.com/license).

-Please note our licensing approach ensures that any enhancements made to our open-source projects are shared back to the community. We firmly believe in the principles of open source, and we are committed to ensuring that our work can be used and improved upon in a manner that benefits everyone.

+Please note our licensing approach ensures that any enhancements made to our open-source projects are shared back to the community. We firmly believe in the principles of open source, and we are committed to ensuring that our work can be used and improved upon in a manner that benefits everyone.

\ No newline at end of file

diff --git a/docs/models/index.md b/docs/models/index.md

index 051bfb514d7..cce8af13f1d 100644

--- a/docs/models/index.md

+++ b/docs/models/index.md

@@ -1,6 +1,7 @@

---

comments: true

description: Learn about the supported models and architectures, such as YOLOv3, YOLOv5, and YOLOv8, and how to contribute your own model to Ultralytics.

+keywords: Ultralytics YOLO, YOLOv3, YOLOv4, YOLOv5, YOLOv6, YOLOv7, YOLOv8, SAM, YOLO-NAS, RT-DETR, object detection, instance segmentation, detection transformers, real-time detection, computer vision, CLI, Python

---

# Models

@@ -9,13 +10,15 @@ Ultralytics supports many models and architectures with more to come in the futu

In this documentation, we provide information on four major models:

-1. [YOLOv3](./yolov3.md): The third iteration of the YOLO model family, known for its efficient real-time object detection capabilities.

-2. [YOLOv5](./yolov5.md): An improved version of the YOLO architecture, offering better performance and speed tradeoffs compared to previous versions.

-3. [YOLOv6](./yolov6.md): Released by [Meituan](https://about.meituan.com/) in 2022 and is in use in many of the company's autonomous delivery robots.

-4. [YOLOv8](./yolov8.md): The latest version of the YOLO family, featuring enhanced capabilities such as instance segmentation, pose/keypoints estimation, and classification.

-5. [Segment Anything Model (SAM)](./sam.md): Meta's Segment Anything Model (SAM).

-6. [YOLO-NAS](./yolo-nas.md): YOLO Neural Architecture Search (NAS) Models.

-7. [Realtime Detection Transformers (RT-DETR)](./rtdetr.md): Baidu's PaddlePaddle Realtime Detection Transformer (RT-DETR) models.

+1. [YOLOv3](./yolov3.md): The third iteration of the YOLO model family originally by Joseph Redmon, known for its efficient real-time object detection capabilities.

+2. [YOLOv4](./yolov3.md): A darknet-native update to YOLOv3 released by Alexey Bochkovskiy in 2020.

+3. [YOLOv5](./yolov5.md): An improved version of the YOLO architecture by Ultralytics, offering better performance and speed tradeoffs compared to previous versions.

+4. [YOLOv6](./yolov6.md): Released by [Meituan](https://about.meituan.com/) in 2022 and is in use in many of the company's autonomous delivery robots.

+5. [YOLOv7](./yolov7.md): Updated YOLO models released in 2022 by the authors of YOLOv4.

+6. [YOLOv8](./yolov8.md): The latest version of the YOLO family, featuring enhanced capabilities such as instance segmentation, pose/keypoints estimation, and classification.

+7. [Segment Anything Model (SAM)](./sam.md): Meta's Segment Anything Model (SAM).

+8. [YOLO-NAS](./yolo-nas.md): YOLO Neural Architecture Search (NAS) Models.

+9. [Realtime Detection Transformers (RT-DETR)](./rtdetr.md): Baidu's PaddlePaddle Realtime Detection Transformer (RT-DETR) models.

You can use these models directly in the Command Line Interface (CLI) or in a Python environment. Below are examples of how to use the models with CLI and Python:

@@ -36,4 +39,4 @@ model.info() # display model information

model.train(data="coco128.yaml", epochs=100) # train the model

```

-For more details on each model, their supported tasks, modes, and performance, please visit their respective documentation pages linked above.

+For more details on each model, their supported tasks, modes, and performance, please visit their respective documentation pages linked above.

\ No newline at end of file

diff --git a/docs/models/rtdetr.md b/docs/models/rtdetr.md

index a38acbb3ef3..61d156abc59 100644

--- a/docs/models/rtdetr.md

+++ b/docs/models/rtdetr.md

@@ -1,6 +1,7 @@

---

comments: true

description: Dive into Baidu's RT-DETR, a revolutionary real-time object detection model built on the foundation of Vision Transformers (ViT). Learn how to use pre-trained PaddlePaddle RT-DETR models with the Ultralytics Python API for various tasks.

+keywords: RT-DETR, Transformer, ViT, Vision Transformers, Baidu RT-DETR, PaddlePaddle, Paddle Paddle RT-DETR, real-time object detection, Vision Transformers-based object detection, pre-trained PaddlePaddle RT-DETR models, Baidu's RT-DETR usage, Ultralytics Python API, object detector

---

# Baidu's RT-DETR: A Vision Transformer-Based Real-Time Object Detector

diff --git a/docs/models/sam.md b/docs/models/sam.md

index 12dd1159f8d..8dd1e35c24b 100644

--- a/docs/models/sam.md

+++ b/docs/models/sam.md

@@ -1,6 +1,7 @@

---

comments: true

description: Discover the Segment Anything Model (SAM), a revolutionary promptable image segmentation model, and delve into the details of its advanced architecture and the large-scale SA-1B dataset.

+keywords: Segment Anything, Segment Anything Model, SAM, Meta SAM, image segmentation, promptable segmentation, zero-shot performance, SA-1B dataset, advanced architecture, auto-annotation, Ultralytics, pre-trained models, SAM base, SAM large, instance segmentation, computer vision, AI, artificial intelligence, machine learning, data annotation, segmentation masks, detection model, YOLO detection model, bibtex, Meta AI

---

# Segment Anything Model (SAM)

@@ -95,4 +96,4 @@ If you find SAM useful in your research or development work, please consider cit

We would like to express our gratitude to Meta AI for creating and maintaining this valuable resource for the computer vision community.

-*keywords: Segment Anything, Segment Anything Model, SAM, Meta SAM, image segmentation, promptable segmentation, zero-shot performance, SA-1B dataset, advanced architecture, auto-annotation, Ultralytics, pre-trained models, SAM base, SAM large, instance segmentation, computer vision, AI, artificial intelligence, machine learning, data annotation, segmentation masks, detection model, YOLO detection model, bibtex, Meta AI.*

+*keywords: Segment Anything, Segment Anything Model, SAM, Meta SAM, image segmentation, promptable segmentation, zero-shot performance, SA-1B dataset, advanced architecture, auto-annotation, Ultralytics, pre-trained models, SAM base, SAM large, instance segmentation, computer vision, AI, artificial intelligence, machine learning, data annotation, segmentation masks, detection model, YOLO detection model, bibtex, Meta AI.*

\ No newline at end of file

diff --git a/docs/models/yolo-nas.md b/docs/models/yolo-nas.md

index 9da81756a14..4ce38e85a04 100644

--- a/docs/models/yolo-nas.md

+++ b/docs/models/yolo-nas.md

@@ -1,6 +1,7 @@

---

comments: true

description: Dive into YOLO-NAS, Deci's next-generation object detection model, offering breakthroughs in speed and accuracy. Learn how to utilize pre-trained models using the Ultralytics Python API for various tasks.

+keywords: YOLO-NAS, Deci AI, Ultralytics, object detection, deep learning, neural architecture search, Python API, pre-trained models, quantization

---

# YOLO-NAS

diff --git a/docs/models/yolov3.md b/docs/models/yolov3.md

index 0ca49ee7df4..da1415e0606 100644

--- a/docs/models/yolov3.md

+++ b/docs/models/yolov3.md

@@ -1,6 +1,7 @@

---

comments: true

description: YOLOv3, YOLOv3-Ultralytics and YOLOv3u by Ultralytics explained. Learn the evolution of these models and their specifications.

+keywords: YOLOv3, Ultralytics YOLOv3, YOLO v3, YOLOv3 models, object detection, models, machine learning, AI, image recognition, object recognition

---

# YOLOv3, YOLOv3-Ultralytics, and YOLOv3u

diff --git a/docs/models/yolov4.md b/docs/models/yolov4.md

new file mode 100644

index 00000000000..36a09ccba90

--- /dev/null

+++ b/docs/models/yolov4.md

@@ -0,0 +1,67 @@

+---

+comments: true

+description: Explore YOLOv4, a state-of-the-art, real-time object detector. Learn about its architecture, features, and performance.

+keywords: YOLOv4, object detection, real-time, CNN, GPU, Ultralytics, documentation, YOLOv4 architecture, YOLOv4 features, YOLOv4 performance

+---

+

+# YOLOv4: High-Speed and Precise Object Detection

+

+Welcome to the Ultralytics documentation page for YOLOv4, a state-of-the-art, real-time object detector launched in 2020 by Alexey Bochkovskiy at [https://github.com/AlexeyAB/darknet](https://github.com/AlexeyAB/darknet). YOLOv4 is designed to provide the optimal balance between speed and accuracy, making it an excellent choice for many applications.

+

+

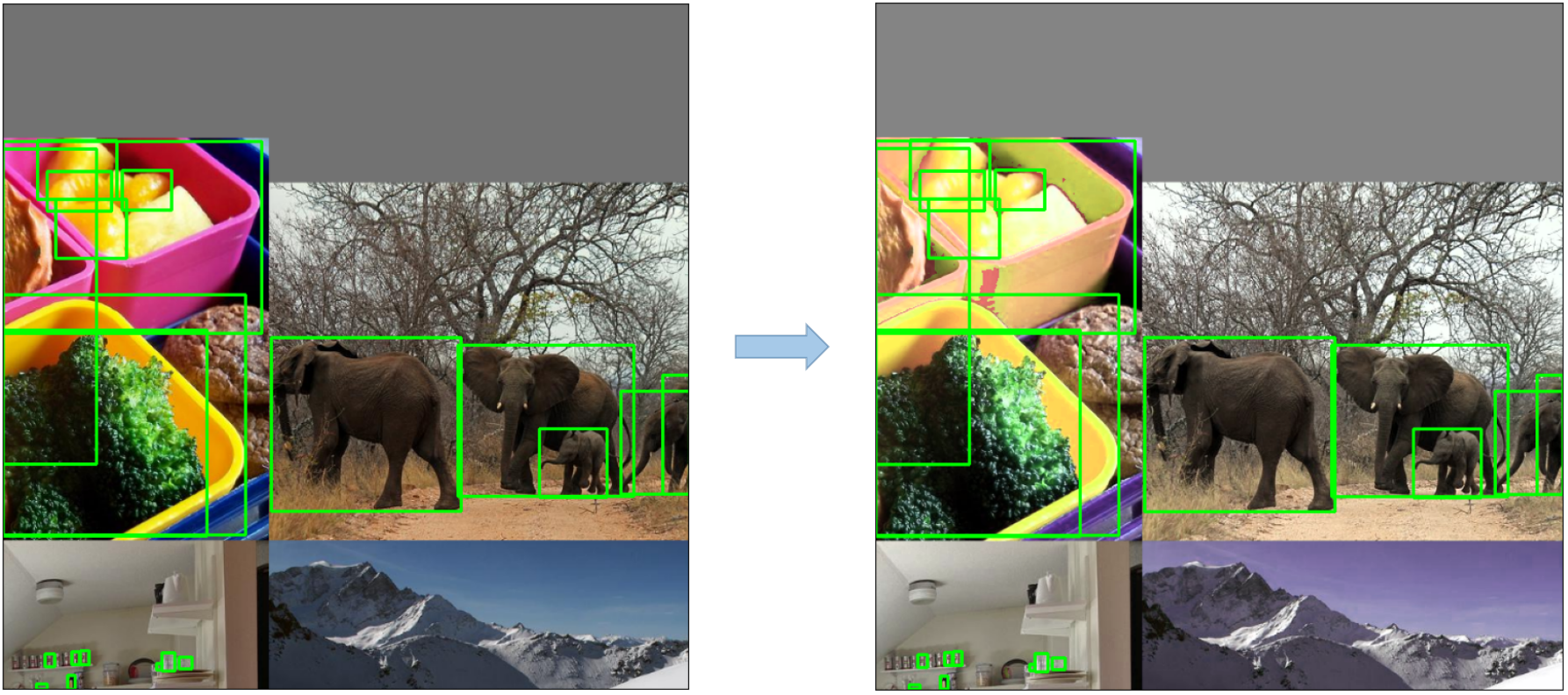

+**YOLOv4 architecture diagram**. Showcasing the intricate network design of YOLOv4, including the backbone, neck, and head components, and their interconnected layers for optimal real-time object detection.

+

+## Introduction

+

+YOLOv4 stands for You Only Look Once version 4. It is a real-time object detection model developed to address the limitations of previous YOLO versions like [YOLOv3](./yolov3.md) and other object detection models. Unlike other convolutional neural network (CNN) based object detectors, YOLOv4 is not only applicable for recommendation systems but also for standalone process management and human input reduction. Its operation on conventional graphics processing units (GPUs) allows for mass usage at an affordable price, and it is designed to work in real-time on a conventional GPU while requiring only one such GPU for training.

+

+## Architecture

+

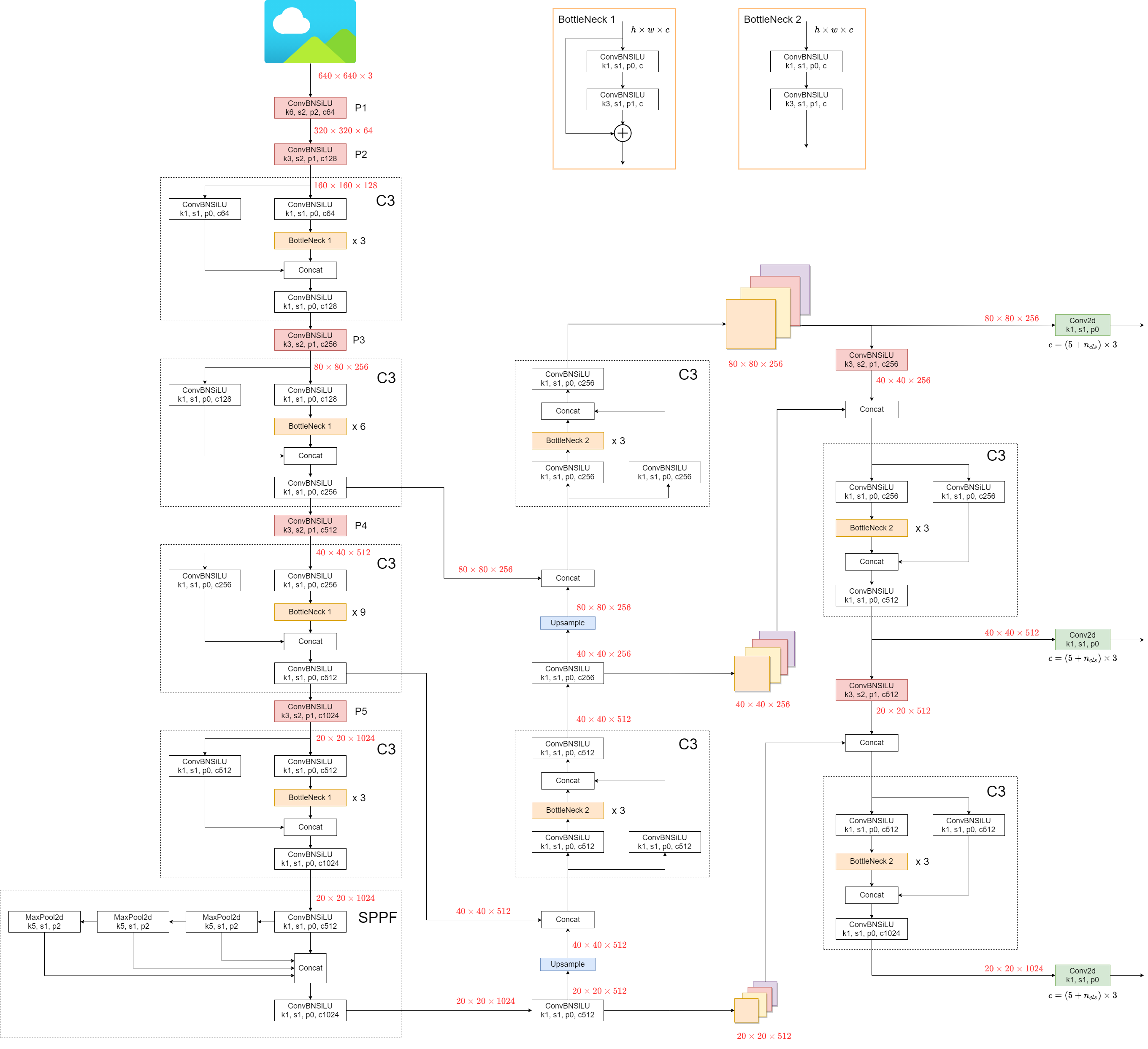

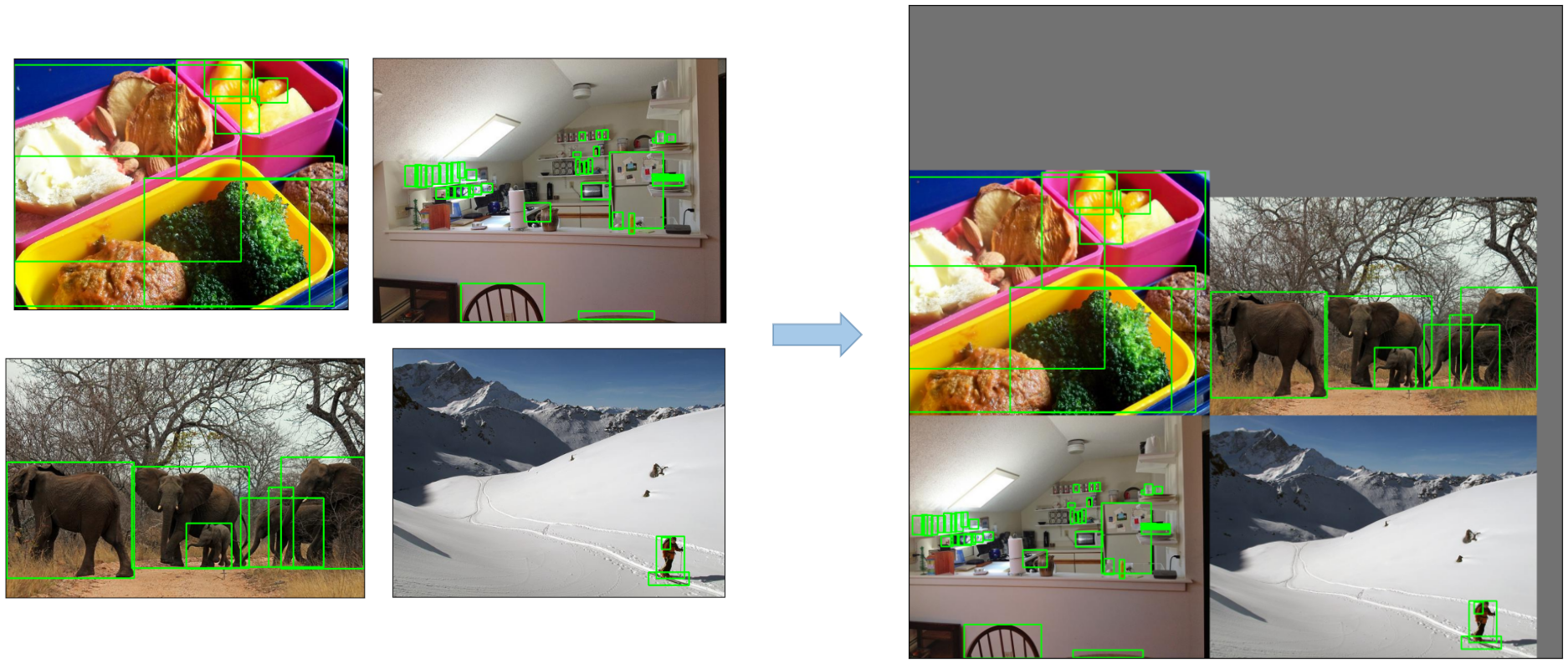

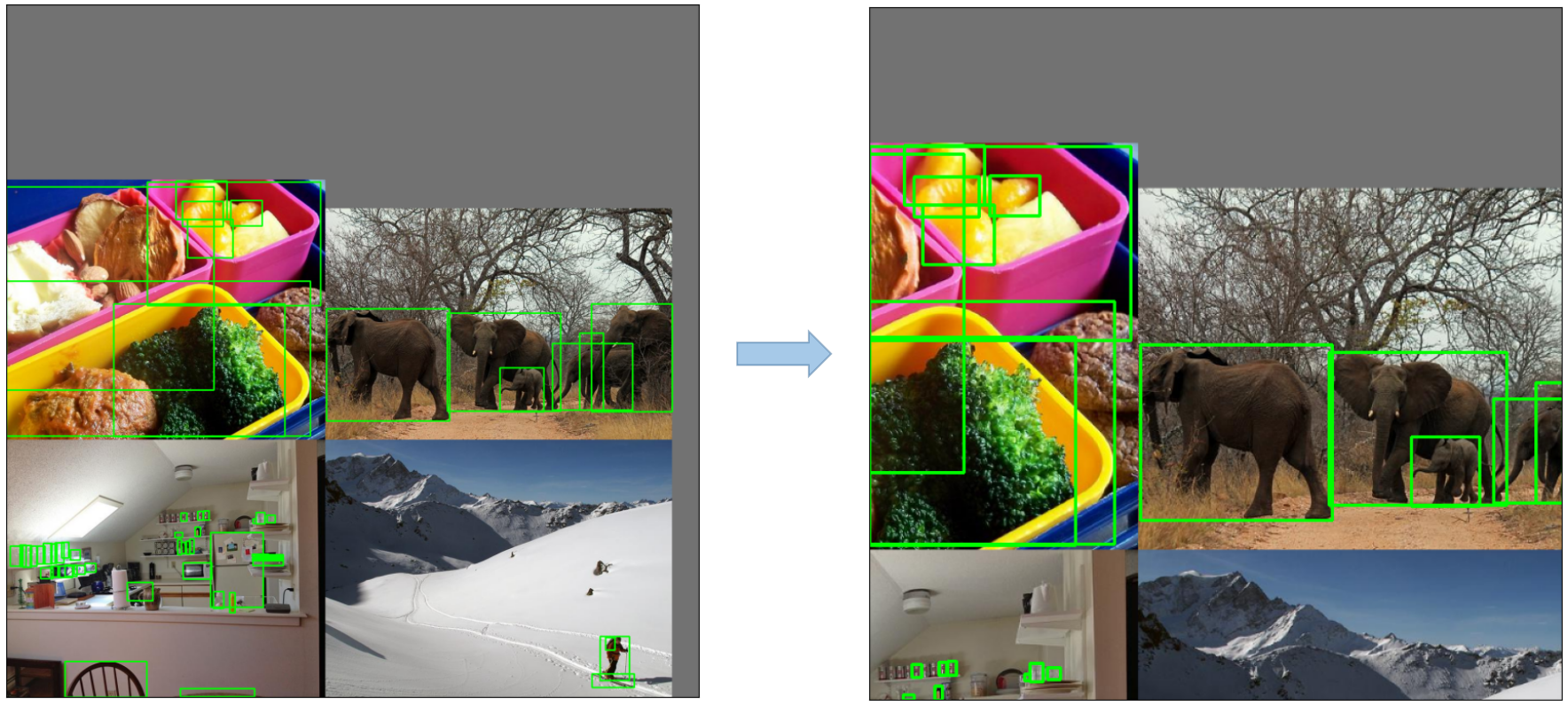

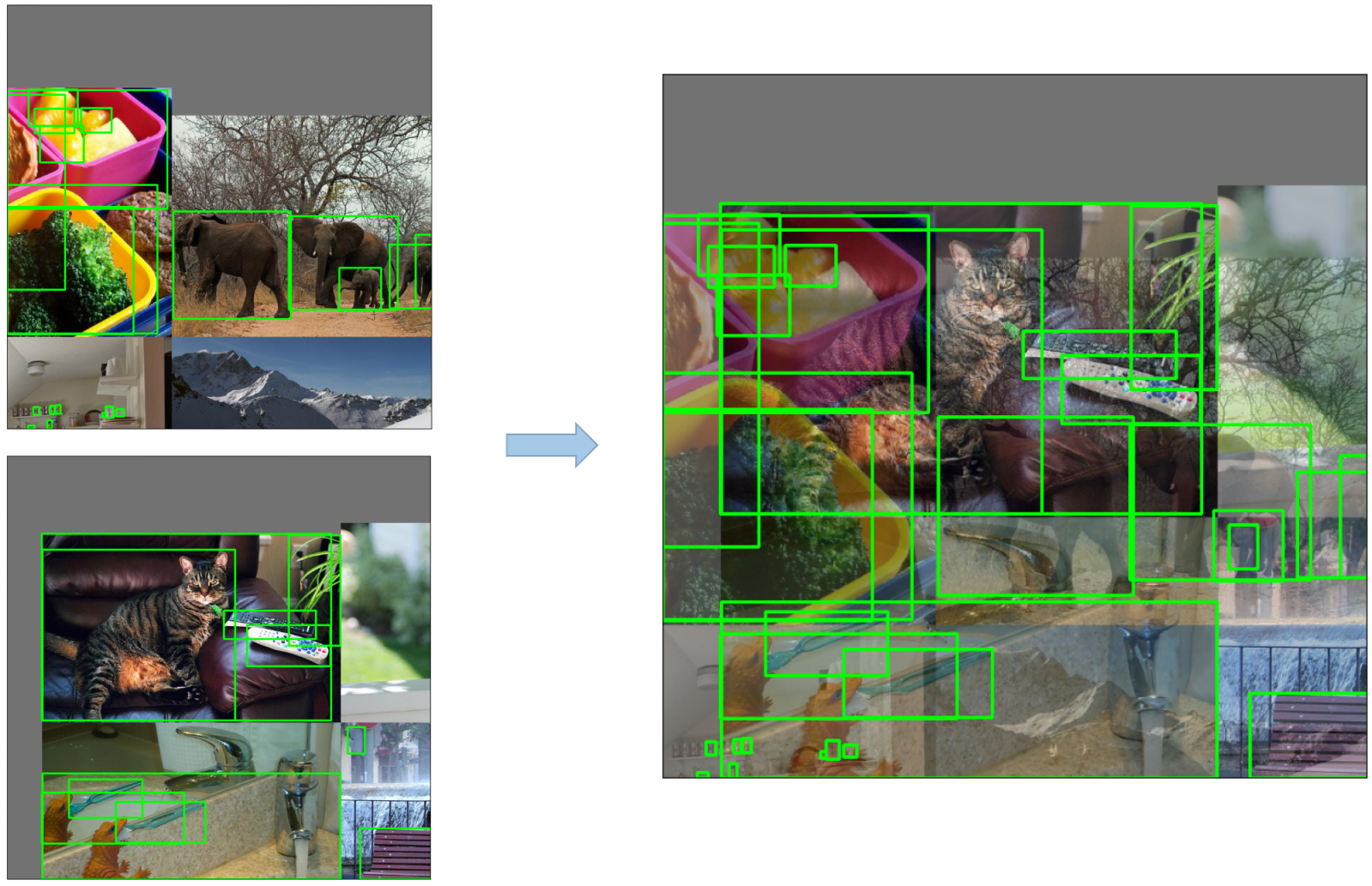

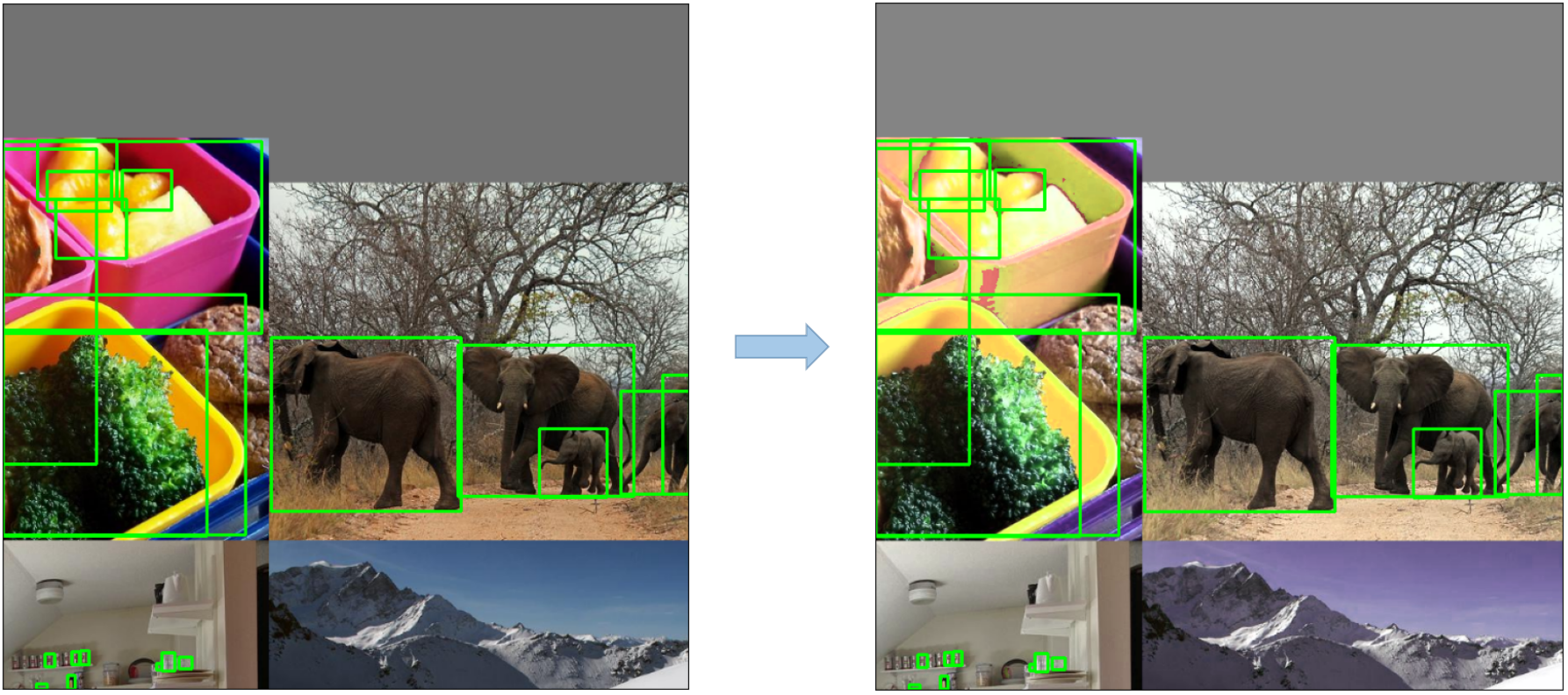

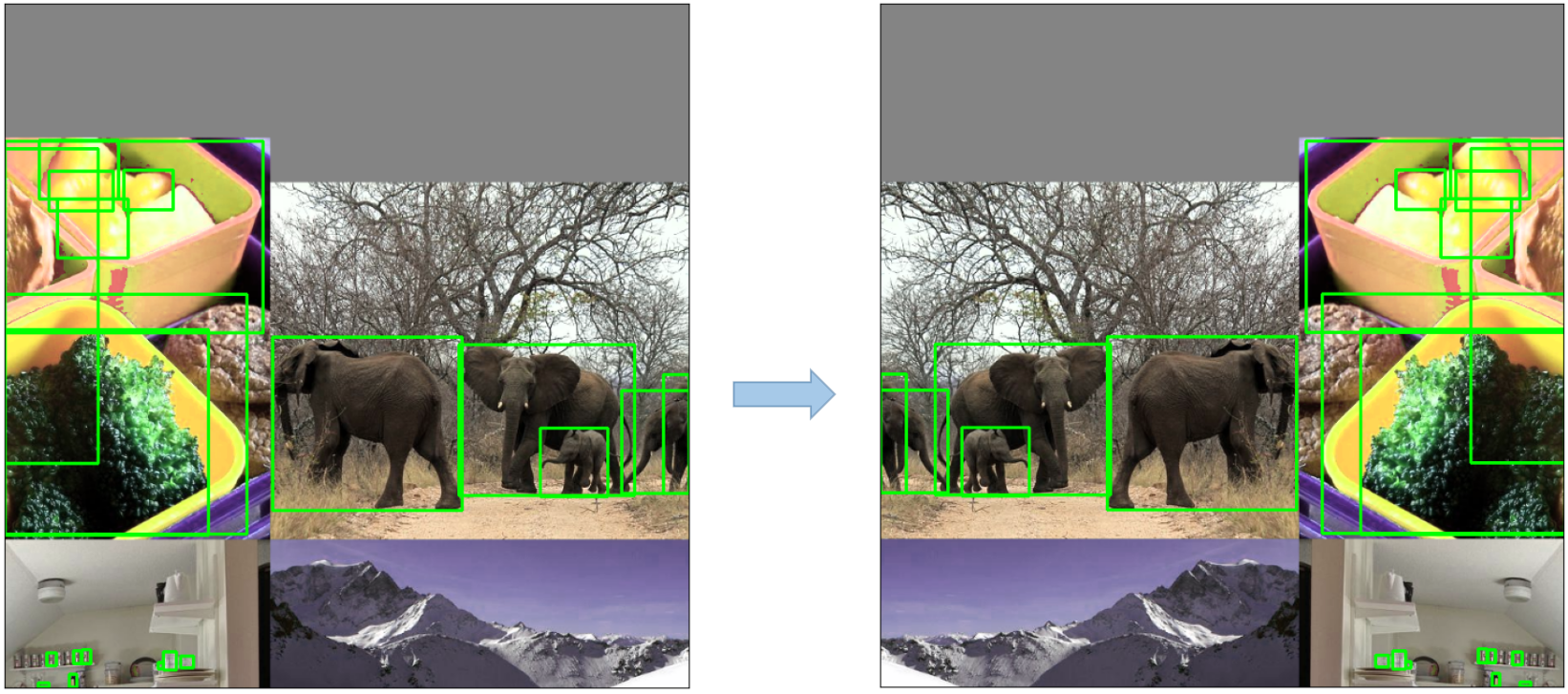

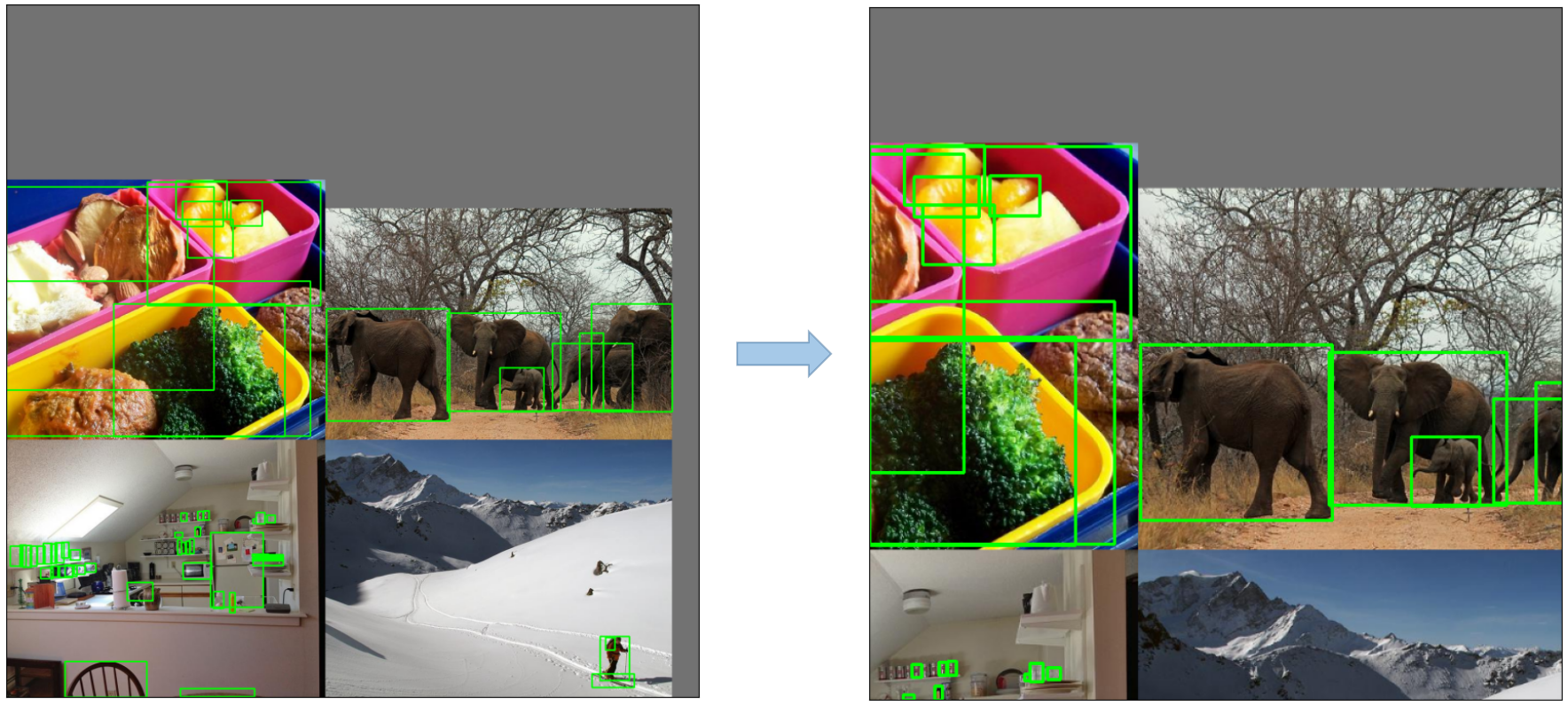

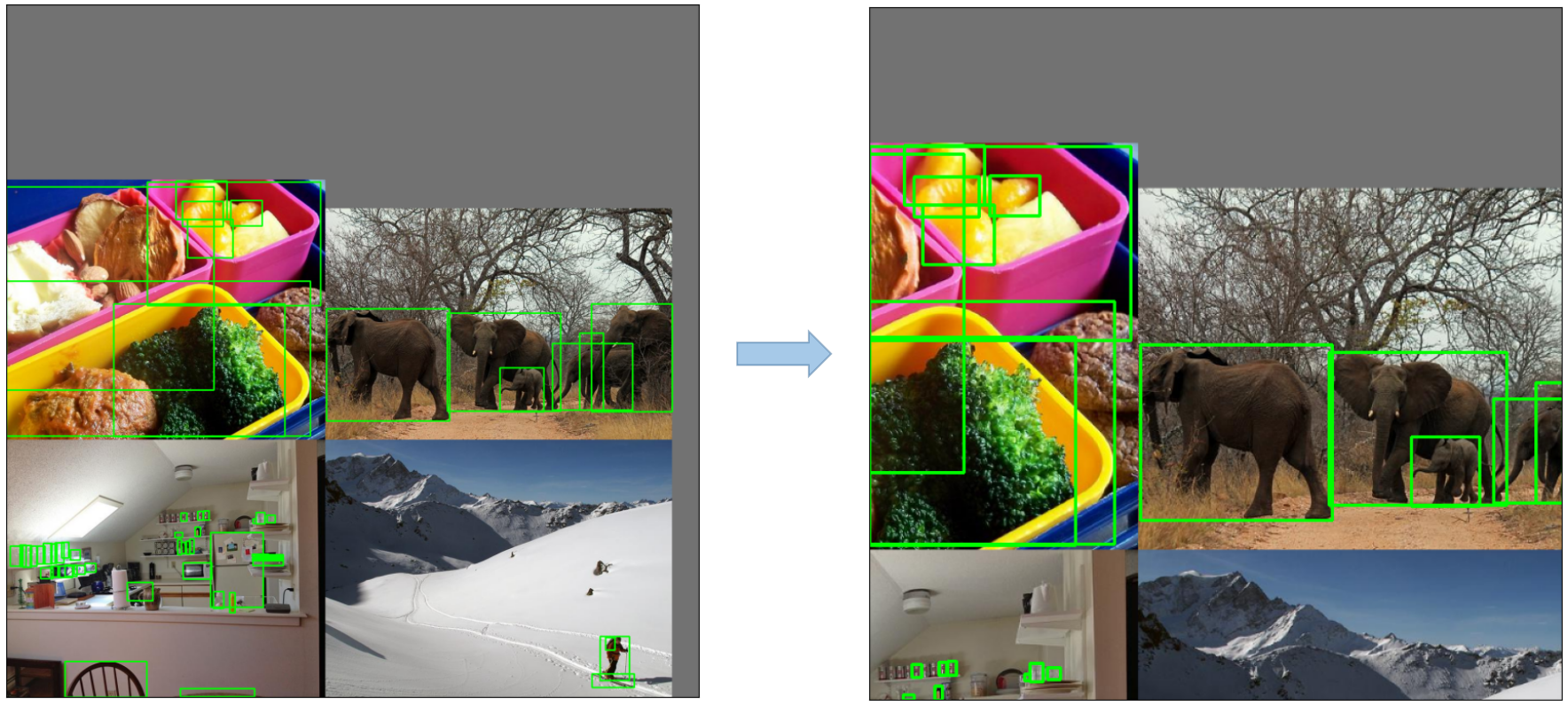

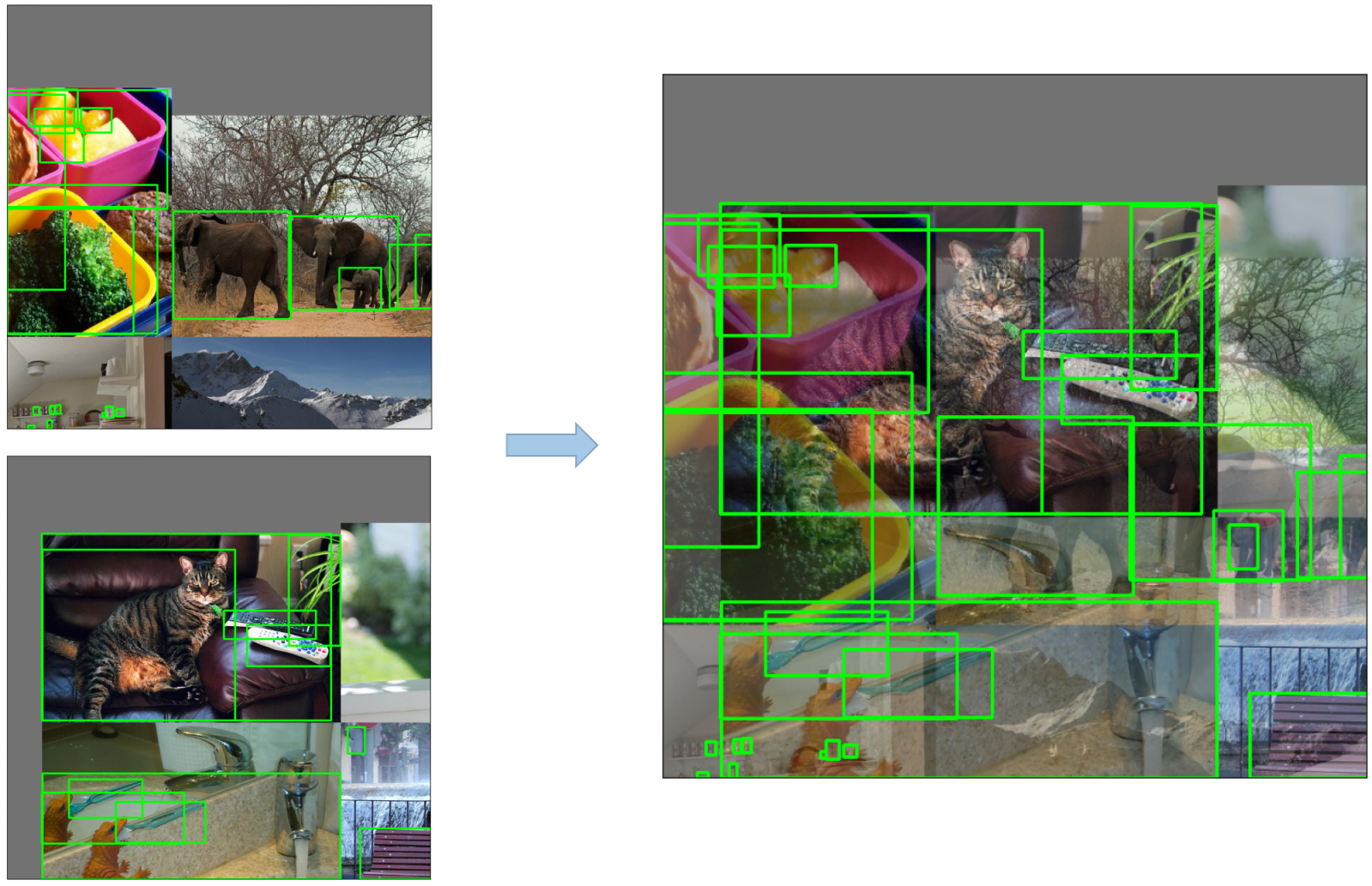

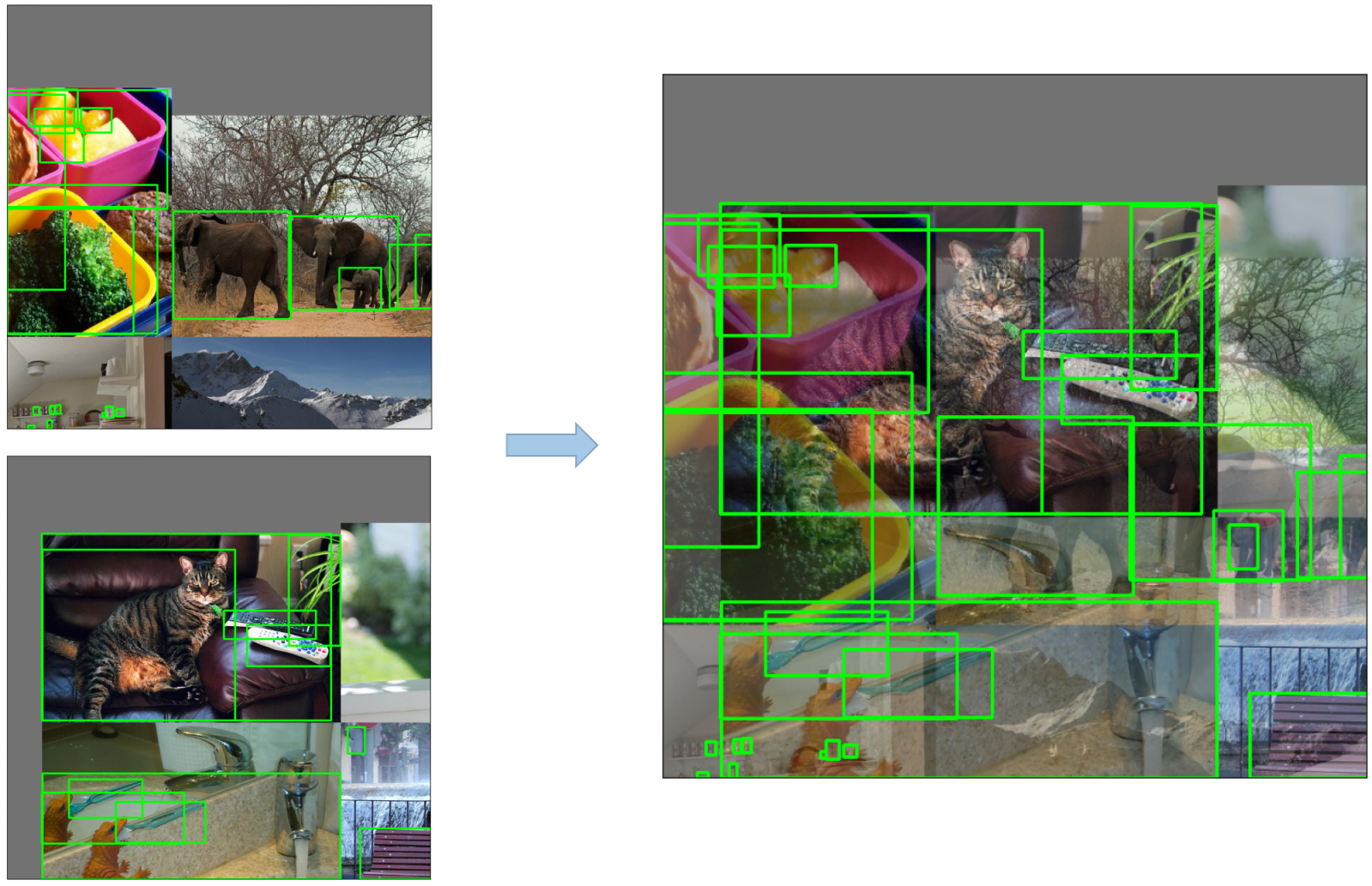

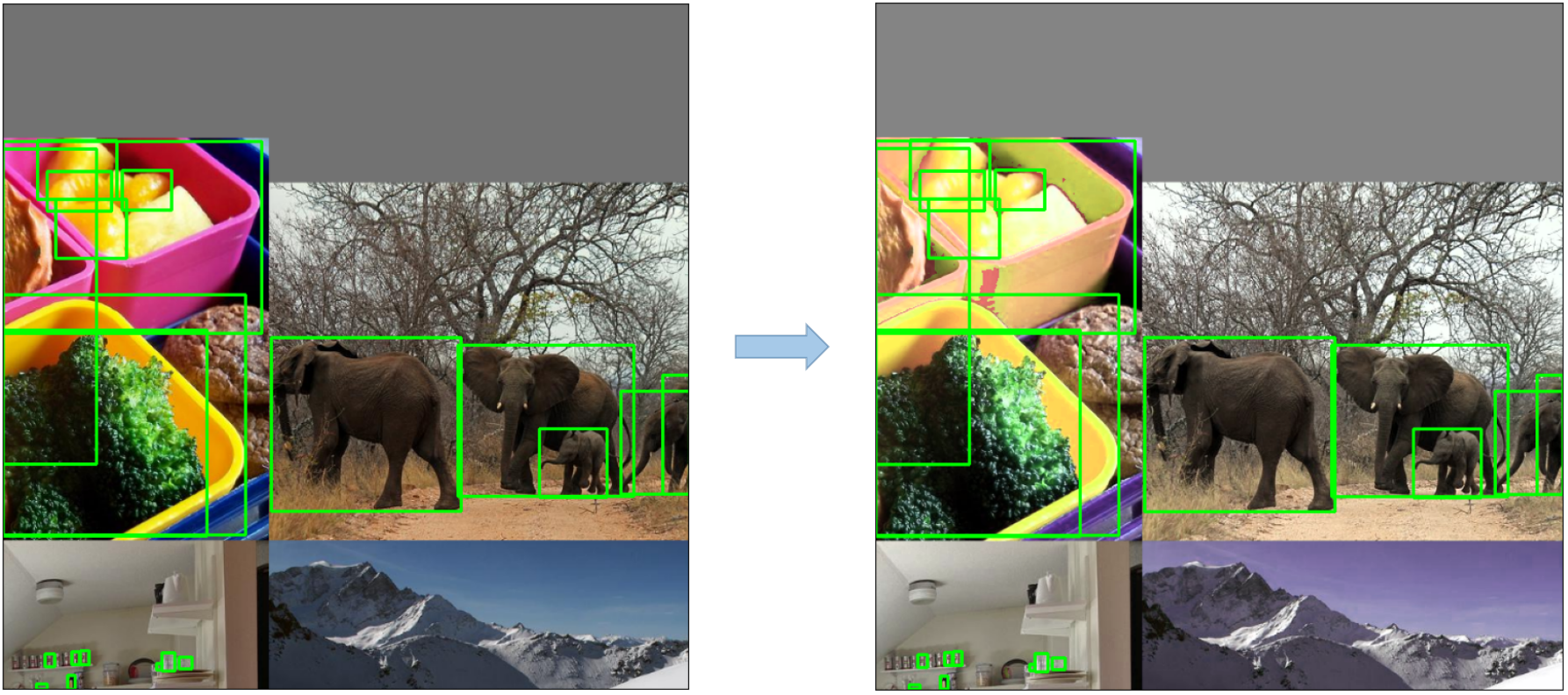

+YOLOv4 makes use of several innovative features that work together to optimize its performance. These include Weighted-Residual-Connections (WRC), Cross-Stage-Partial-connections (CSP), Cross mini-Batch Normalization (CmBN), Self-adversarial-training (SAT), Mish-activation, Mosaic data augmentation, DropBlock regularization, and CIoU loss. These features are combined to achieve state-of-the-art results.

+

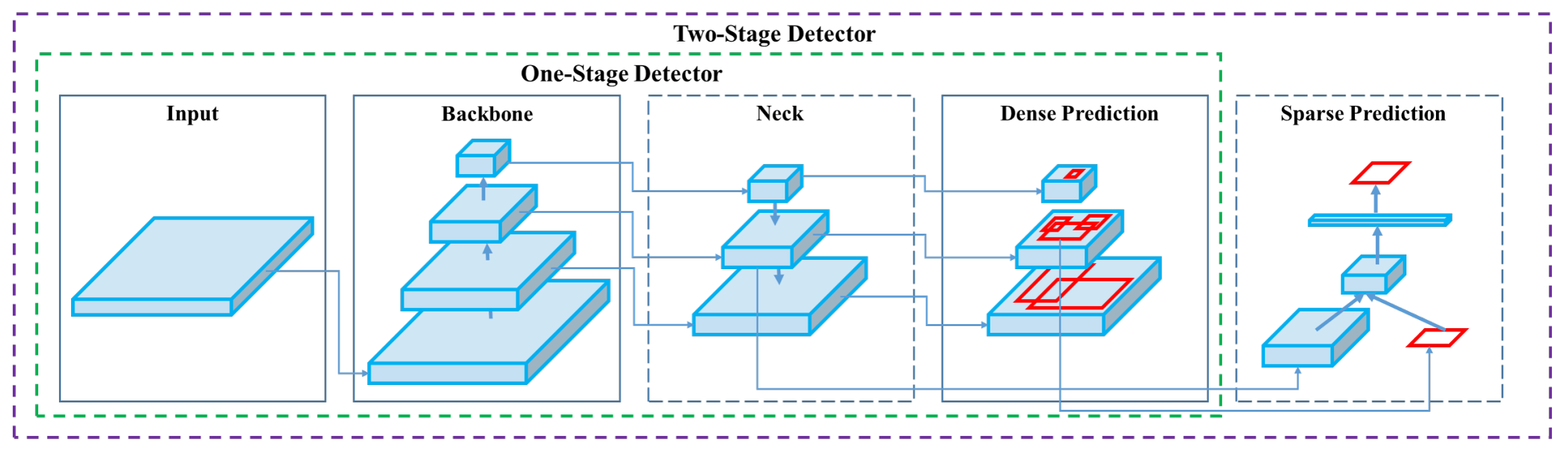

+A typical object detector is composed of several parts including the input, the backbone, the neck, and the head. The backbone of YOLOv4 is pre-trained on ImageNet and is used to predict classes and bounding boxes of objects. The backbone could be from several models including VGG, ResNet, ResNeXt, or DenseNet. The neck part of the detector is used to collect feature maps from different stages and usually includes several bottom-up paths and several top-down paths. The head part is what is used to make the final object detections and classifications.

+

+## Bag of Freebies

+

+YOLOv4 also makes use of methods known as "bag of freebies," which are techniques that improve the accuracy of the model during training without increasing the cost of inference. Data augmentation is a common bag of freebies technique used in object detection, which increases the variability of the input images to improve the robustness of the model. Some examples of data augmentation include photometric distortions (adjusting the brightness, contrast, hue, saturation, and noise of an image) and geometric distortions (adding random scaling, cropping, flipping, and rotating). These techniques help the model to generalize better to different types of images.

+

+## Features and Performance

+

+YOLOv4 is designed for optimal speed and accuracy in object detection. The architecture of YOLOv4 includes CSPDarknet53 as the backbone, PANet as the neck, and YOLOv3 as the detection head. This design allows YOLOv4 to perform object detection at an impressive speed, making it suitable for real-time applications. YOLOv4 also excels in accuracy, achieving state-of-the-art results in object detection benchmarks.

+

+## Usage Examples

+

+As of the time of writing, Ultralytics does not currently support YOLOv4 models. Therefore, any users interested in using YOLOv4 will need to refer directly to the YOLOv4 GitHub repository for installation and usage instructions.

+

+Here is a brief overview of the typical steps you might take to use YOLOv4:

+

+1. Visit the YOLOv4 GitHub repository: [https://github.com/AlexeyAB/darknet](https://github.com/AlexeyAB/darknet).

+

+2. Follow the instructions provided in the README file for installation. This typically involves cloning the repository, installing necessary dependencies, and setting up any necessary environment variables.

+

+3. Once installation is complete, you can train and use the model as per the usage instructions provided in the repository. This usually involves preparing your dataset, configuring the model parameters, training the model, and then using the trained model to perform object detection.

+

+Please note that the specific steps may vary depending on your specific use case and the current state of the YOLOv4 repository. Therefore, it is strongly recommended to refer directly to the instructions provided in the YOLOv4 GitHub repository.

+

+We regret any inconvenience this may cause and will strive to update this document with usage examples for Ultralytics once support for YOLOv4 is implemented.

+

+## Conclusion

+

+YOLOv4 is a powerful and efficient object detection model that strikes a balance between speed and accuracy. Its use of unique features and bag of freebies techniques during training allows it to perform excellently in real-time object detection tasks. YOLOv4 can be trained and used by anyone with a conventional GPU, making it accessible and practical for a wide range of applications.

+

+## Citations and Acknowledgements

+

+We would like to acknowledge the YOLOv4 authors for their significant contributions in the field of real-time object detection:

+

+```bibtex

+@misc{bochkovskiy2020yolov4,

+ title={YOLOv4: Optimal Speed and Accuracy of Object Detection},

+ author={Alexey Bochkovskiy and Chien-Yao Wang and Hong-Yuan Mark Liao},

+ year={2020},

+ eprint={2004.10934},

+ archivePrefix={arXiv},

+ primaryClass={cs.CV}

+}

+```

+

+The original YOLOv4 paper can be found on [arXiv](https://arxiv.org/pdf/2004.10934.pdf). The authors have made their work publicly available, and the codebase can be accessed on [GitHub](https://github.com/AlexeyAB/darknet). We appreciate their efforts in advancing the field and making their work accessible to the broader community.

\ No newline at end of file

diff --git a/docs/models/yolov5.md b/docs/models/yolov5.md

index e163f4272e4..959c06af140 100644

--- a/docs/models/yolov5.md

+++ b/docs/models/yolov5.md

@@ -1,6 +1,7 @@

---

comments: true

description: YOLOv5 by Ultralytics explained. Discover the evolution of this model and its key specifications. Experience faster and more accurate object detection.

+keywords: YOLOv5, Ultralytics YOLOv5, YOLO v5, YOLOv5 models, YOLO, object detection, model, neural network, accuracy, speed, pre-trained weights, inference, validation, training

---

# YOLOv5

diff --git a/docs/models/yolov6.md b/docs/models/yolov6.md

index b8239c86077..a8a2449f25c 100644

--- a/docs/models/yolov6.md

+++ b/docs/models/yolov6.md

@@ -1,6 +1,7 @@

---

comments: true

description: Discover Meituan YOLOv6, a robust real-time object detector. Learn how to utilize pre-trained models with Ultralytics Python API for a variety of tasks.

+keywords: Meituan, YOLOv6, object detection, Bi-directional Concatenation (BiC), anchor-aided training (AAT), pre-trained models, high-resolution input, real-time, ultra-fast computations

---

# Meituan YOLOv6

@@ -78,4 +79,4 @@ We would like to acknowledge the authors for their significant contributions in

}

```

-The original YOLOv6 paper can be found on [arXiv](https://arxiv.org/abs/2301.05586). The authors have made their work publicly available, and the codebase can be accessed on [GitHub](https://github.com/meituan/YOLOv6). We appreciate their efforts in advancing the field and making their work accessible to the broader community.

+The original YOLOv6 paper can be found on [arXiv](https://arxiv.org/abs/2301.05586). The authors have made their work publicly available, and the codebase can be accessed on [GitHub](https://github.com/meituan/YOLOv6). We appreciate their efforts in advancing the field and making their work accessible to the broader community.

\ No newline at end of file

diff --git a/docs/models/yolov7.md b/docs/models/yolov7.md

new file mode 100644

index 00000000000..d8b1ea62745

--- /dev/null

+++ b/docs/models/yolov7.md

@@ -0,0 +1,61 @@

+---

+comments: true

+description: Discover YOLOv7, a cutting-edge real-time object detector that surpasses competitors in speed and accuracy. Explore its unique trainable bag-of-freebies.

+keywords: object detection, real-time object detector, YOLOv7, MS COCO, computer vision, neural networks, AI, deep learning, deep neural networks, real-time, GPU, GitHub, arXiv

+---

+

+# YOLOv7: Trainable Bag-of-Freebies

+

+YOLOv7 is a state-of-the-art real-time object detector that surpasses all known object detectors in both speed and accuracy in the range from 5 FPS to 160 FPS. It has the highest accuracy (56.8% AP) among all known real-time object detectors with 30 FPS or higher on GPU V100. Moreover, YOLOv7 outperforms other object detectors such as YOLOR, YOLOX, Scaled-YOLOv4, YOLOv5, and many others in speed and accuracy. The model is trained on the MS COCO dataset from scratch without using any other datasets or pre-trained weights. Source code for YOLOv7 is available on GitHub.

+

+

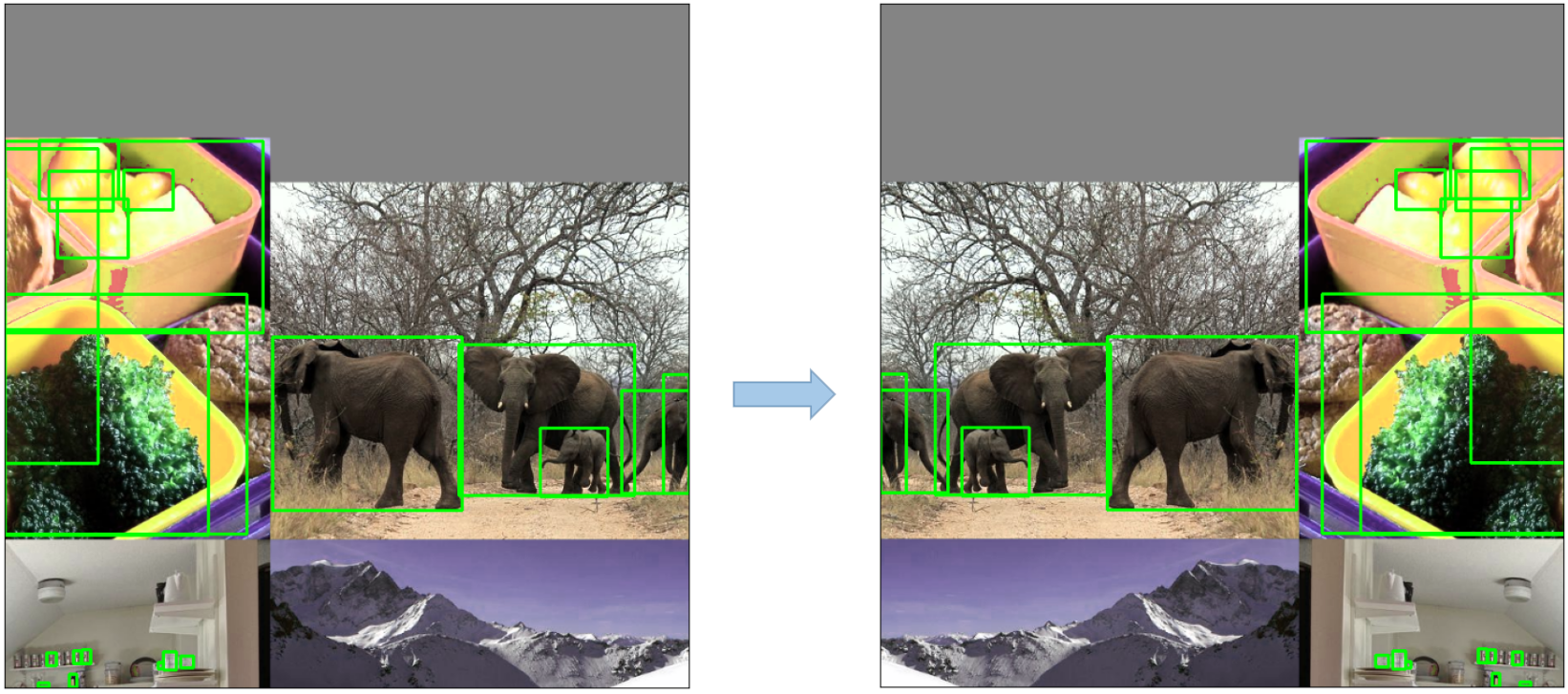

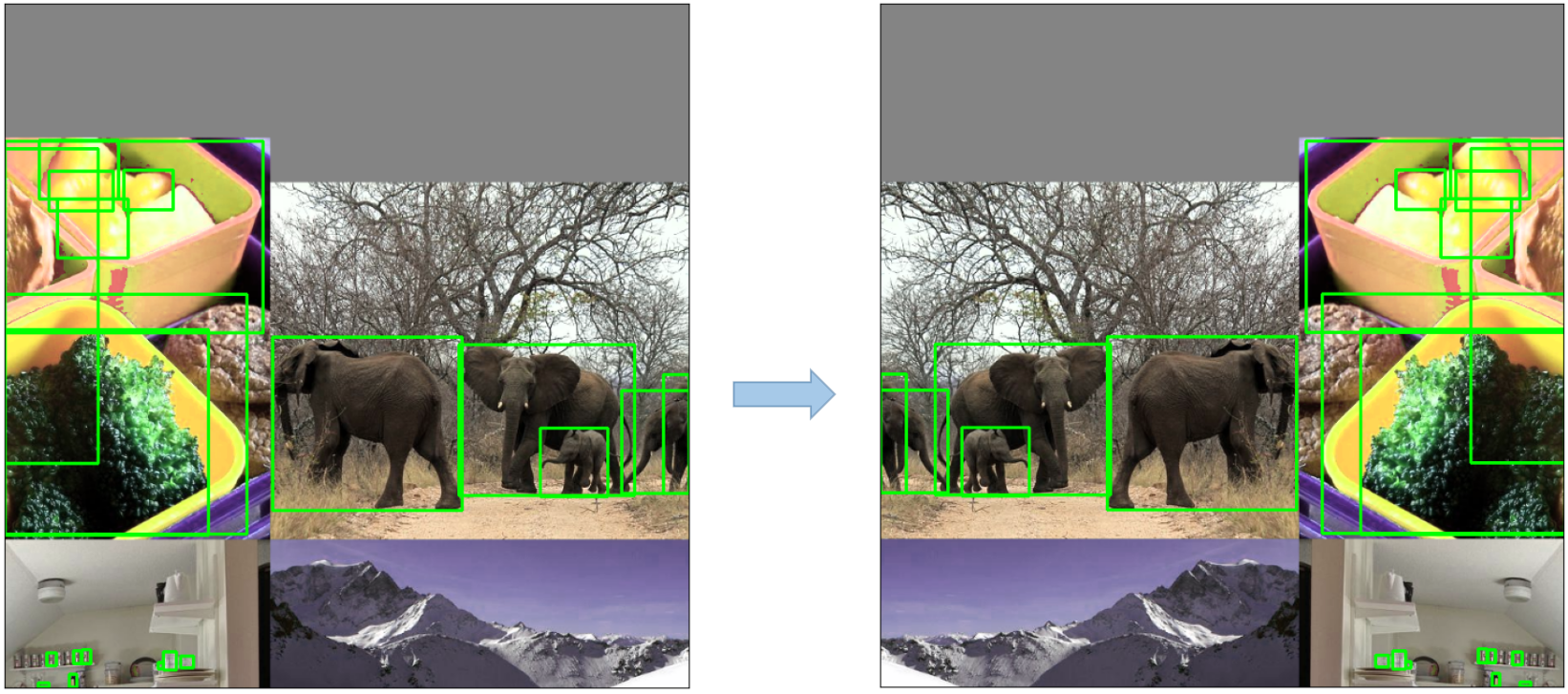

+**Comparison of state-of-the-art object detectors.** From the results in Table 2 we know that the proposed method has the best speed-accuracy trade-off comprehensively. If we compare YOLOv7-tiny-SiLU with YOLOv5-N (r6.1), our method is 127 fps faster and 10.7% more accurate on AP. In addition, YOLOv7 has 51.4% AP at frame rate of 161 fps, while PPYOLOE-L with the same AP has only 78 fps frame rate. In terms of parameter usage, YOLOv7 is 41% less than PPYOLOE-L. If we compare YOLOv7-X with 114 fps inference speed to YOLOv5-L (r6.1) with 99 fps inference speed, YOLOv7-X can improve AP by 3.9%. If YOLOv7-X is compared with YOLOv5-X (r6.1) of similar scale, the inference speed of YOLOv7-X is 31 fps faster. In addition, in terms the amount of parameters and computation, YOLOv7-X reduces 22% of parameters and 8% of computation compared to YOLOv5-X (r6.1), but improves AP by 2.2% ([Source](https://arxiv.org/pdf/2207.02696.pdf)).

+

+## Overview

+

+Real-time object detection is an important component in many computer vision systems, including multi-object tracking, autonomous driving, robotics, and medical image analysis. In recent years, real-time object detection development has focused on designing efficient architectures and improving the inference speed of various CPUs, GPUs, and neural processing units (NPUs). YOLOv7 supports both mobile GPU and GPU devices, from the edge to the cloud.

+

+Unlike traditional real-time object detectors that focus on architecture optimization, YOLOv7 introduces a focus on the optimization of the training process. This includes modules and optimization methods designed to improve the accuracy of object detection without increasing the inference cost, a concept known as the "trainable bag-of-freebies".

+

+## Key Features

+

+YOLOv7 introduces several key features:

+

+1. **Model Re-parameterization**: YOLOv7 proposes a planned re-parameterized model, which is a strategy applicable to layers in different networks with the concept of gradient propagation path.

+

+2. **Dynamic Label Assignment**: The training of the model with multiple output layers presents a new issue: "How to assign dynamic targets for the outputs of different branches?" To solve this problem, YOLOv7 introduces a new label assignment method called coarse-to-fine lead guided label assignment.

+

+3. **Extended and Compound Scaling**: YOLOv7 proposes "extend" and "compound scaling" methods for the real-time object detector that can effectively utilize parameters and computation.

+

+4. **Efficiency**: The method proposed by YOLOv7 can effectively reduce about 40% parameters and 50% computation of state-of-the-art real-time object detector, and has faster inference speed and higher detection accuracy.

+

+## Usage Examples

+

+As of the time of writing, Ultralytics does not currently support YOLOv7 models. Therefore, any users interested in using YOLOv7 will need to refer directly to the YOLOv7 GitHub repository for installation and usage instructions.

+

+Here is a brief overview of the typical steps you might take to use YOLOv7:

+

+1. Visit the YOLOv7 GitHub repository: [https://github.com/WongKinYiu/yolov7](https://github.com/WongKinYiu/yolov7).

+

+2. Follow the instructions provided in the README file for installation. This typically involves cloning the repository, installing necessary dependencies, and setting up any necessary environment variables.

+

+3. Once installation is complete, you can train and use the model as per the usage instructions provided in the repository. This usually involves preparing your dataset, configuring the model parameters, training the model, and then using the trained model to perform object detection.

+

+Please note that the specific steps may vary depending on your specific use case and the current state of the YOLOv7 repository. Therefore, it is strongly recommended to refer directly to the instructions provided in the YOLOv7 GitHub repository.

+

+We regret any inconvenience this may cause and will strive to update this document with usage examples for Ultralytics once support for YOLOv7 is implemented.

+

+## Citations and Acknowledgements

+

+We would like to acknowledge the YOLOv7 authors for their significant contributions in the field of real-time object detection:

+

+```bibtex

+@article{wang2022yolov7,

+ title={{YOLOv7}: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors},

+ author={Wang, Chien-Yao and Bochkovskiy, Alexey and Liao, Hong-Yuan Mark},

+ journal={arXiv preprint arXiv:2207.02696},

+ year={2022}

+}

+```

+

+The original YOLOv7 paper can be found on [arXiv](https://arxiv.org/pdf/2207.02696.pdf). The authors have made their work publicly available, and the codebase can be accessed on [GitHub](https://github.com/WongKinYiu/yolov7). We appreciate their efforts in advancing the field and making their work accessible to the broader community.

\ No newline at end of file

diff --git a/docs/models/yolov8.md b/docs/models/yolov8.md

index 6e3adb5078f..8c78d87e77f 100644

--- a/docs/models/yolov8.md

+++ b/docs/models/yolov8.md

@@ -1,6 +1,7 @@

---

comments: true

description: Learn about YOLOv8's pre-trained weights supporting detection, instance segmentation, pose, and classification tasks. Get performance details.

+keywords: YOLOv8, real-time object detection, object detection, deep learning, machine learning

---

# YOLOv8

diff --git a/docs/modes/benchmark.md b/docs/modes/benchmark.md

index a7600e055e6..c1c159e6bed 100644

--- a/docs/modes/benchmark.md

+++ b/docs/modes/benchmark.md

@@ -1,6 +1,7 @@

---

comments: true

description: Benchmark mode compares speed and accuracy of various YOLOv8 export formats like ONNX or OpenVINO. Optimize formats for speed or accuracy.

+keywords: YOLOv8, Benchmark Mode, Export Formats, ONNX, OpenVINO, TensorRT, Ultralytics Docs

---

diff --git a/docs/modes/export.md b/docs/modes/export.md

index 2352cf4910a..42e45272aae 100644

--- a/docs/modes/export.md

+++ b/docs/modes/export.md

@@ -1,6 +1,7 @@

---

comments: true

description: 'Export mode: Create a deployment-ready YOLOv8 model by converting it to various formats. Export to ONNX or OpenVINO for up to 3x CPU speedup.'

+keywords: ultralytics docs, YOLOv8, export YOLOv8, YOLOv8 model deployment, exporting YOLOv8, ONNX, OpenVINO, TensorRT, CoreML, TF SavedModel, PaddlePaddle, TorchScript, ONNX format, OpenVINO format, TensorRT format, CoreML format, TF SavedModel format, PaddlePaddle format

---

diff --git a/docs/modes/index.md b/docs/modes/index.md

index c9ae14aabd7..5a00afa5d39 100644

--- a/docs/modes/index.md

+++ b/docs/modes/index.md

@@ -1,6 +1,7 @@

---

comments: true

description: Use Ultralytics YOLOv8 Modes (Train, Val, Predict, Export, Track, Benchmark) to train, validate, predict, track, export or benchmark.

+keywords: yolov8, yolo, ultralytics, training, validation, prediction, export, tracking, benchmarking, real-time object detection, object tracking

---

# Ultralytics YOLOv8 Modes

diff --git a/docs/modes/predict.md b/docs/modes/predict.md

index 3deee7c7564..581d4221d52 100644

--- a/docs/modes/predict.md

+++ b/docs/modes/predict.md

@@ -1,6 +1,7 @@

---

comments: true

description: Get started with YOLOv8 Predict mode and input sources. Accepts various input sources such as images, videos, and directories.

+keywords: YOLOv8, predict mode, generator, streaming mode, input sources, video formats, arguments customization

---

@@ -300,4 +301,4 @@ Here's a Python script using OpenCV (cv2) and YOLOv8 to run inference on video f

# Release the video capture object and close the display window

cap.release()

cv2.destroyAllWindows()

- ```

+ ```

\ No newline at end of file

diff --git a/docs/modes/track.md b/docs/modes/track.md

index c0d8adb5069..7b9a83a714b 100644

--- a/docs/modes/track.md

+++ b/docs/modes/track.md

@@ -1,6 +1,7 @@

---

comments: true

description: Explore YOLOv8n-based object tracking with Ultralytics' BoT-SORT and ByteTrack. Learn configuration, usage, and customization tips.

+keywords: object tracking, YOLO, trackers, BoT-SORT, ByteTrack

---

@@ -97,5 +98,4 @@ any configurations(expect the `tracker_type`) you need to.

```

Please refer to [ultralytics/tracker/cfg](https://github.com/ultralytics/ultralytics/tree/main/ultralytics/tracker/cfg)

-page

-

+page

\ No newline at end of file

diff --git a/docs/modes/train.md b/docs/modes/train.md

index 1738975c1f2..882c0d1f1eb 100644

--- a/docs/modes/train.md

+++ b/docs/modes/train.md

@@ -1,6 +1,7 @@

---

comments: true

description: Learn how to train custom YOLOv8 models on various datasets, configure hyperparameters, and use Ultralytics' YOLO for seamless training.

+keywords: YOLOv8, train mode, train a custom YOLOv8 model, hyperparameters, train a model, Comet, ClearML, TensorBoard, logging, loggers

---

diff --git a/docs/modes/val.md b/docs/modes/val.md

index bc294ecbf3e..79fdf6f8e36 100644

--- a/docs/modes/val.md

+++ b/docs/modes/val.md

@@ -1,6 +1,7 @@

---

comments: true

description: Validate and improve YOLOv8n model accuracy on COCO128 and other datasets using hyperparameter & configuration tuning, in Val mode.

+keywords: Ultralytics, YOLO, YOLOv8, Val, Validation, Hyperparameters, Performance, Accuracy, Generalization, COCO, Export Formats, PyTorch

---

diff --git a/docs/quickstart.md b/docs/quickstart.md

index b1fe2afc543..b762e5ab461 100644

--- a/docs/quickstart.md

+++ b/docs/quickstart.md

@@ -1,6 +1,7 @@

---

comments: true

description: Install and use YOLOv8 via CLI or Python. Run single-line commands or integrate with Python projects for object detection, segmentation, and classification.

+keywords: YOLOv8, object detection, segmentation, classification, pip, git, CLI, Python

---

## Install

diff --git a/docs/reference/hub/auth.md b/docs/reference/hub/auth.md

index 6c19030704c..a8b7f9dbef7 100644

--- a/docs/reference/hub/auth.md

+++ b/docs/reference/hub/auth.md

@@ -1,8 +1,9 @@

---

description: Learn how to use Ultralytics hub authentication in your projects with examples and guidelines from the Auth page on Ultralytics Docs.

+keywords: Ultralytics, ultralytics hub, api keys, authentication, collab accounts, requests, hub management, monitoring

---

# Auth

---

:::ultralytics.hub.auth.Auth

-

+

\ No newline at end of file

diff --git a/docs/reference/hub/session.md b/docs/reference/hub/session.md

index 2d7033340e6..3b2115ca5af 100644

--- a/docs/reference/hub/session.md

+++ b/docs/reference/hub/session.md

@@ -1,8 +1,9 @@

---

description: Accelerate your AI development with the Ultralytics HUB Training Session. High-performance training of object detection models.

+keywords: YOLOv5, object detection, HUBTrainingSession, custom models, Ultralytics Docs

---

# HUBTrainingSession

---

:::ultralytics.hub.session.HUBTrainingSession

-

+

\ No newline at end of file

diff --git a/docs/reference/hub/utils.md b/docs/reference/hub/utils.md

index 5b710580cf2..bacefc84534 100644

--- a/docs/reference/hub/utils.md

+++ b/docs/reference/hub/utils.md

@@ -1,5 +1,6 @@

---

description: Explore Ultralytics events, including 'request_with_credentials' and 'smart_request', to improve your project's performance and efficiency.

+keywords: Ultralytics, Hub Utils, API Documentation, Python, requests_with_progress, Events, classes, usage, examples

---

# Events

@@ -20,4 +21,4 @@ description: Explore Ultralytics events, including 'request_with_credentials' an

# smart_request

---

:::ultralytics.hub.utils.smart_request

-

+

\ No newline at end of file

diff --git a/docs/reference/nn/autobackend.md b/docs/reference/nn/autobackend.md

index 2166e7c80a6..9feb71b032e 100644

--- a/docs/reference/nn/autobackend.md

+++ b/docs/reference/nn/autobackend.md

@@ -1,5 +1,6 @@

---

description: Ensure class names match filenames for easy imports. Use AutoBackend to automatically rename and refactor model files.

+keywords: AutoBackend, ultralytics, nn, autobackend, check class names, neural network

---

# AutoBackend

@@ -10,4 +11,4 @@ description: Ensure class names match filenames for easy imports. Use AutoBacken

# check_class_names

---

:::ultralytics.nn.autobackend.check_class_names

-

+

\ No newline at end of file

diff --git a/docs/reference/nn/autoshape.md b/docs/reference/nn/autoshape.md

index 2c5745e33a8..e17976ef8e8 100644

--- a/docs/reference/nn/autoshape.md

+++ b/docs/reference/nn/autoshape.md

@@ -1,5 +1,6 @@

---

description: Detect 80+ object categories with bounding box coordinates and class probabilities using AutoShape in Ultralytics YOLO. Explore Detections now.

+keywords: Ultralytics, YOLO, docs, autoshape, detections, object detection, customized shapes, bounding boxes, computer vision

---

# AutoShape

@@ -10,4 +11,4 @@ description: Detect 80+ object categories with bounding box coordinates and clas

# Detections

---

:::ultralytics.nn.autoshape.Detections

-

+

\ No newline at end of file

diff --git a/docs/reference/nn/modules/block.md b/docs/reference/nn/modules/block.md

index 58a23ed63f5..687e3080340 100644

--- a/docs/reference/nn/modules/block.md

+++ b/docs/reference/nn/modules/block.md

@@ -1,5 +1,6 @@

---

description: Explore ultralytics.nn.modules.block to build powerful YOLO object detection models. Master DFL, HGStem, SPP, CSP components and more.

+keywords: Ultralytics, NN Modules, Blocks, DFL, HGStem, SPP, C1, C2f, C3x, C3TR, GhostBottleneck, BottleneckCSP, Computer Vision

---

# DFL

@@ -85,4 +86,4 @@ description: Explore ultralytics.nn.modules.block to build powerful YOLO object

# BottleneckCSP

---

:::ultralytics.nn.modules.block.BottleneckCSP

-

+

\ No newline at end of file

diff --git a/docs/reference/nn/modules/conv.md b/docs/reference/nn/modules/conv.md

index 7cfaf012cb3..60d0345f4b8 100644

--- a/docs/reference/nn/modules/conv.md

+++ b/docs/reference/nn/modules/conv.md

@@ -1,5 +1,6 @@

---

description: Explore convolutional neural network modules & techniques such as LightConv, DWConv, ConvTranspose, GhostConv, CBAM & autopad with Ultralytics Docs.

+keywords: Ultralytics, Convolutional Neural Network, Conv2, DWConv, ConvTranspose, GhostConv, ChannelAttention, CBAM, autopad

---

# Conv

@@ -70,4 +71,4 @@ description: Explore convolutional neural network modules & techniques such as L

# autopad

---

:::ultralytics.nn.modules.conv.autopad

-

+

\ No newline at end of file

diff --git a/docs/reference/nn/modules/head.md b/docs/reference/nn/modules/head.md

index 17488da73fd..b21124e21a2 100644

--- a/docs/reference/nn/modules/head.md

+++ b/docs/reference/nn/modules/head.md

@@ -1,5 +1,6 @@

---

description: 'Learn about Ultralytics YOLO modules: Segment, Classify, and RTDETRDecoder. Optimize object detection and classification in your project.'

+keywords: Ultralytics, YOLO, object detection, pose estimation, RTDETRDecoder, modules, classes, documentation

---

# Detect

@@ -25,4 +26,4 @@ description: 'Learn about Ultralytics YOLO modules: Segment, Classify, and RTDET

# RTDETRDecoder

---

:::ultralytics.nn.modules.head.RTDETRDecoder

-

+

\ No newline at end of file

diff --git a/docs/reference/nn/modules/transformer.md b/docs/reference/nn/modules/transformer.md

index 654917d8284..8d6429d73aa 100644

--- a/docs/reference/nn/modules/transformer.md

+++ b/docs/reference/nn/modules/transformer.md

@@ -1,5 +1,6 @@

---

description: Explore the Ultralytics nn modules pages on Transformer and MLP blocks, LayerNorm2d, and Deformable Transformer Decoder Layer.

+keywords: Ultralytics, NN Modules, TransformerEncoderLayer, TransformerLayer, MLPBlock, LayerNorm2d, DeformableTransformerDecoderLayer, examples, code snippets, tutorials

---

# TransformerEncoderLayer

@@ -50,4 +51,4 @@ description: Explore the Ultralytics nn modules pages on Transformer and MLP blo

# DeformableTransformerDecoder

---

:::ultralytics.nn.modules.transformer.DeformableTransformerDecoder

-

+

\ No newline at end of file

diff --git a/docs/reference/nn/modules/utils.md b/docs/reference/nn/modules/utils.md

index 877c52c01b7..f7aa43f0ded 100644

--- a/docs/reference/nn/modules/utils.md

+++ b/docs/reference/nn/modules/utils.md

@@ -1,5 +1,6 @@

---

description: 'Learn about Ultralytics NN modules: get_clones, linear_init_, and multi_scale_deformable_attn_pytorch. Code examples and usage tips.'

+keywords: Ultralytics, NN Utils, Docs, PyTorch, bias initialization, linear initialization, multi-scale deformable attention

---

# _get_clones

@@ -25,4 +26,4 @@ description: 'Learn about Ultralytics NN modules: get_clones, linear_init_, and

# multi_scale_deformable_attn_pytorch

---

:::ultralytics.nn.modules.utils.multi_scale_deformable_attn_pytorch

-

+

\ No newline at end of file

diff --git a/docs/reference/nn/tasks.md b/docs/reference/nn/tasks.md

index 502b82d7495..3258e4ff920 100644

--- a/docs/reference/nn/tasks.md

+++ b/docs/reference/nn/tasks.md

@@ -1,5 +1,6 @@

---

description: Learn how to work with Ultralytics YOLO Detection, Segmentation & Classification Models, load weights and parse models in PyTorch.

+keywords: neural network, deep learning, computer vision, object detection, image segmentation, image classification, model ensemble, PyTorch

---

# BaseModel

@@ -70,4 +71,4 @@ description: Learn how to work with Ultralytics YOLO Detection, Segmentation & C

# guess_model_task

---

:::ultralytics.nn.tasks.guess_model_task

-

+

\ No newline at end of file

diff --git a/docs/reference/tracker/track.md b/docs/reference/tracker/track.md

index 51c48c9f317..156ee0002ab 100644

--- a/docs/reference/tracker/track.md

+++ b/docs/reference/tracker/track.md

@@ -1,5 +1,6 @@

---

description: Learn how to register custom event-tracking and track predictions with Ultralytics YOLO via on_predict_start and register_tracker methods.

+keywords: Ultralytics YOLO, tracker registration, on_predict_start, object detection

---

# on_predict_start

@@ -15,4 +16,4 @@ description: Learn how to register custom event-tracking and track predictions w

# register_tracker

---

:::ultralytics.tracker.track.register_tracker

-

+

\ No newline at end of file

diff --git a/docs/reference/tracker/trackers/basetrack.md b/docs/reference/tracker/trackers/basetrack.md

index d21f29e1cce..9b767eca3ff 100644

--- a/docs/reference/tracker/trackers/basetrack.md

+++ b/docs/reference/tracker/trackers/basetrack.md

@@ -1,5 +1,6 @@

---

description: 'TrackState: A comprehensive guide to Ultralytics tracker''s BaseTrack for monitoring model performance. Improve your tracking capabilities now!'

+keywords: object detection, object tracking, Ultralytics YOLO, TrackState, workflow improvement

---

# TrackState

@@ -10,4 +11,4 @@ description: 'TrackState: A comprehensive guide to Ultralytics tracker''s BaseTr

# BaseTrack

---

:::ultralytics.tracker.trackers.basetrack.BaseTrack

-

+

\ No newline at end of file

diff --git a/docs/reference/tracker/trackers/bot_sort.md b/docs/reference/tracker/trackers/bot_sort.md

index b2d0f9bbcdf..f3f50132ed0 100644

--- a/docs/reference/tracker/trackers/bot_sort.md

+++ b/docs/reference/tracker/trackers/bot_sort.md

@@ -1,5 +1,6 @@

---

description: '"Optimize tracking with Ultralytics BOTrack. Easily sort and track bots with BOTSORT. Streamline data collection for improved performance."'

+keywords: BOTrack, Ultralytics YOLO Docs, features, usage

---

# BOTrack

@@ -10,4 +11,4 @@ description: '"Optimize tracking with Ultralytics BOTrack. Easily sort and track

# BOTSORT

---

:::ultralytics.tracker.trackers.bot_sort.BOTSORT

-

+

\ No newline at end of file

diff --git a/docs/reference/tracker/trackers/byte_tracker.md b/docs/reference/tracker/trackers/byte_tracker.md

index c96f85a62eb..cbaf90a910d 100644

--- a/docs/reference/tracker/trackers/byte_tracker.md

+++ b/docs/reference/tracker/trackers/byte_tracker.md

@@ -1,5 +1,6 @@

---

description: Learn how to track ByteAI model sizes and tips for model optimization with STrack, a byte tracking tool from Ultralytics.

+keywords: Byte Tracker, Ultralytics STrack, application monitoring, bytes sent, bytes received, code examples, setup instructions

---

# STrack

@@ -10,4 +11,4 @@ description: Learn how to track ByteAI model sizes and tips for model optimizati

# BYTETracker

---

:::ultralytics.tracker.trackers.byte_tracker.BYTETracker

-

+

\ No newline at end of file

diff --git a/docs/reference/tracker/utils/gmc.md b/docs/reference/tracker/utils/gmc.md

index b208a4a1b3c..461b7fd88b0 100644

--- a/docs/reference/tracker/utils/gmc.md

+++ b/docs/reference/tracker/utils/gmc.md

@@ -1,8 +1,9 @@

---

description: '"Track Google Marketing Campaigns in GMC with Ultralytics Tracker. Learn to set up and use GMC for detailed analytics. Get started now."'

+keywords: Ultralytics, YOLO, object detection, tracker, optimization, models, documentation

---

# GMC

---

:::ultralytics.tracker.utils.gmc.GMC

-

+

\ No newline at end of file

diff --git a/docs/reference/tracker/utils/kalman_filter.md b/docs/reference/tracker/utils/kalman_filter.md

index baa749c03d5..93217151cdb 100644

--- a/docs/reference/tracker/utils/kalman_filter.md

+++ b/docs/reference/tracker/utils/kalman_filter.md

@@ -1,5 +1,6 @@

---

description: Improve object tracking with KalmanFilterXYAH in Ultralytics YOLO - an efficient and accurate algorithm for state estimation.

+keywords: KalmanFilterXYAH, Ultralytics Docs, Kalman filter algorithm, object tracking, computer vision, YOLO

---

# KalmanFilterXYAH

@@ -10,4 +11,4 @@ description: Improve object tracking with KalmanFilterXYAH in Ultralytics YOLO -

# KalmanFilterXYWH

---

:::ultralytics.tracker.utils.kalman_filter.KalmanFilterXYWH

-

+

\ No newline at end of file

diff --git a/docs/reference/tracker/utils/matching.md b/docs/reference/tracker/utils/matching.md

index 5d8474bee0d..4f1725dbbe8 100644

--- a/docs/reference/tracker/utils/matching.md

+++ b/docs/reference/tracker/utils/matching.md

@@ -1,5 +1,6 @@

---

description: Learn how to match and fuse object detections for accurate target tracking using Ultralytics' YOLO merge_matches, iou_distance, and embedding_distance.

+keywords: Ultralytics, multi-object tracking, object tracking, detection, recognition, matching, indices, iou distance, gate cost matrix, fuse iou, bbox ious

---

# merge_matches

@@ -60,4 +61,4 @@ description: Learn how to match and fuse object detections for accurate target t

# bbox_ious

---

:::ultralytics.tracker.utils.matching.bbox_ious

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/data/annotator.md b/docs/reference/yolo/data/annotator.md

index 9e97df62775..25ca21aaf8b 100644

--- a/docs/reference/yolo/data/annotator.md

+++ b/docs/reference/yolo/data/annotator.md

@@ -1,8 +1,9 @@

---

description: Learn how to use auto_annotate in Ultralytics YOLO to generate annotations automatically for your dataset. Simplify object detection workflows.

+keywords: Ultralytics YOLO, Auto Annotator, AI, image annotation, object detection, labelling, tool

---

# auto_annotate

---

:::ultralytics.yolo.data.annotator.auto_annotate

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/data/augment.md b/docs/reference/yolo/data/augment.md

index 1cb38d06581..1bff44f60d8 100644

--- a/docs/reference/yolo/data/augment.md

+++ b/docs/reference/yolo/data/augment.md

@@ -1,5 +1,6 @@

---

description: Use Ultralytics YOLO Data Augmentation transforms with Base, MixUp, and Albumentations for object detection and classification.

+keywords: YOLO, data augmentation, transforms, BaseTransform, MixUp, RandomHSV, Albumentations, ToTensor, classify_transforms, classify_albumentations

---

# BaseTransform

@@ -95,4 +96,4 @@ description: Use Ultralytics YOLO Data Augmentation transforms with Base, MixUp,

# classify_albumentations

---

:::ultralytics.yolo.data.augment.classify_albumentations

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/data/base.md b/docs/reference/yolo/data/base.md

index 2f14c1dd9ba..eb6defed282 100644

--- a/docs/reference/yolo/data/base.md

+++ b/docs/reference/yolo/data/base.md

@@ -1,8 +1,9 @@

---

description: Learn about BaseDataset in Ultralytics YOLO, a flexible dataset class for object detection. Maximize your YOLO performance with custom datasets.

+keywords: BaseDataset, Ultralytics YOLO, object detection, real-world applications, documentation

---

# BaseDataset

---

:::ultralytics.yolo.data.base.BaseDataset

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/data/build.md b/docs/reference/yolo/data/build.md

index b84cefffac6..d1f05084486 100644

--- a/docs/reference/yolo/data/build.md

+++ b/docs/reference/yolo/data/build.md

@@ -1,5 +1,6 @@

---

description: Maximize YOLO performance with Ultralytics' InfiniteDataLoader, seed_worker, build_dataloader, and load_inference_source functions.

+keywords: Ultralytics, YOLO, object detection, data loading, build dataloader, load inference source

---

# InfiniteDataLoader

@@ -35,4 +36,4 @@ description: Maximize YOLO performance with Ultralytics' InfiniteDataLoader, see

# load_inference_source

---

:::ultralytics.yolo.data.build.load_inference_source

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/data/converter.md b/docs/reference/yolo/data/converter.md

index 34856337415..7f8833aa9ee 100644

--- a/docs/reference/yolo/data/converter.md

+++ b/docs/reference/yolo/data/converter.md

@@ -1,5 +1,6 @@

---

description: Convert COCO-91 to COCO-80 class, RLE to polygon, and merge multi-segment images with Ultralytics YOLO data converter. Improve your object detection.

+keywords: Ultralytics, YOLO, converter, COCO91, COCO80, rle2polygon, merge_multi_segment, annotations

---

# coco91_to_coco80_class

@@ -30,4 +31,4 @@ description: Convert COCO-91 to COCO-80 class, RLE to polygon, and merge multi-s

# delete_dsstore

---

:::ultralytics.yolo.data.converter.delete_dsstore

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/data/dataloaders/stream_loaders.md b/docs/reference/yolo/data/dataloaders/stream_loaders.md

index afecea7aac6..536aa8d455c 100644

--- a/docs/reference/yolo/data/dataloaders/stream_loaders.md

+++ b/docs/reference/yolo/data/dataloaders/stream_loaders.md

@@ -1,5 +1,6 @@

---

description: 'Ultralytics YOLO Docs: Learn about stream loaders for image and tensor data, as well as autocasting techniques. Check out SourceTypes and more.'

+keywords: Ultralytics YOLO, data loaders, stream load images, screenshots, tensor data, autocast list, youtube URL retriever

---

# SourceTypes

@@ -40,4 +41,4 @@ description: 'Ultralytics YOLO Docs: Learn about stream loaders for image and te

# get_best_youtube_url

---

:::ultralytics.yolo.data.dataloaders.stream_loaders.get_best_youtube_url

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/data/dataloaders/v5augmentations.md b/docs/reference/yolo/data/dataloaders/v5augmentations.md

index c75e57d5c4d..aa5f3f71005 100644

--- a/docs/reference/yolo/data/dataloaders/v5augmentations.md

+++ b/docs/reference/yolo/data/dataloaders/v5augmentations.md

@@ -1,5 +1,6 @@

---

description: Enhance image data with Albumentations CenterCrop, normalize, augment_hsv, replicate, random_perspective, cutout, & box_candidates.

+keywords: YOLO, object detection, data loaders, V5 augmentations, CenterCrop, normalize, random_perspective

---

# Albumentations

@@ -85,4 +86,4 @@ description: Enhance image data with Albumentations CenterCrop, normalize, augme

# classify_transforms

---

:::ultralytics.yolo.data.dataloaders.v5augmentations.classify_transforms

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/data/dataloaders/v5loader.md b/docs/reference/yolo/data/dataloaders/v5loader.md

index d8b3110445a..20161f8b7c9 100644

--- a/docs/reference/yolo/data/dataloaders/v5loader.md

+++ b/docs/reference/yolo/data/dataloaders/v5loader.md

@@ -1,5 +1,6 @@

---

description: Efficiently load images and labels to models using Ultralytics YOLO's InfiniteDataLoader, LoadScreenshots, and LoadStreams.

+keywords: YOLO, data loader, image classification, object detection, Ultralytics

---

# InfiniteDataLoader

@@ -90,4 +91,4 @@ description: Efficiently load images and labels to models using Ultralytics YOLO

# create_classification_dataloader

---

:::ultralytics.yolo.data.dataloaders.v5loader.create_classification_dataloader

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/data/dataset.md b/docs/reference/yolo/data/dataset.md

index c0d181e3563..f5bd9a10116 100644

--- a/docs/reference/yolo/data/dataset.md

+++ b/docs/reference/yolo/data/dataset.md

@@ -1,5 +1,6 @@

---

description: Create custom YOLOv5 datasets with Ultralytics YOLODataset and SemanticDataset. Streamline your object detection and segmentation projects.

+keywords: YOLODataset, SemanticDataset, Ultralytics YOLO Docs, Object Detection, Segmentation

---

# YOLODataset

@@ -15,4 +16,4 @@ description: Create custom YOLOv5 datasets with Ultralytics YOLODataset and Sema

# SemanticDataset

---

:::ultralytics.yolo.data.dataset.SemanticDataset

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/data/dataset_wrappers.md b/docs/reference/yolo/data/dataset_wrappers.md

index 04e2997b99c..49a24af652b 100644

--- a/docs/reference/yolo/data/dataset_wrappers.md

+++ b/docs/reference/yolo/data/dataset_wrappers.md

@@ -1,8 +1,9 @@

---

description: Create a custom dataset of mixed and oriented rectangular objects with Ultralytics YOLO's MixAndRectDataset.

+keywords: Ultralytics YOLO, MixAndRectDataset, dataset wrapper, image-level annotations, object-level annotations, rectangular object detection

---

# MixAndRectDataset

---

:::ultralytics.yolo.data.dataset_wrappers.MixAndRectDataset

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/data/utils.md b/docs/reference/yolo/data/utils.md

index 3a0e9a4acf7..19bd739a5c2 100644

--- a/docs/reference/yolo/data/utils.md

+++ b/docs/reference/yolo/data/utils.md

@@ -1,5 +1,6 @@

---

description: Efficiently handle data in YOLO with Ultralytics. Utilize HUBDatasetStats and customize dataset with these data utility functions.

+keywords: YOLOv4, Object Detection, Computer Vision, Deep Learning, Convolutional Neural Network, CNN, Ultralytics Docs

---

# HUBDatasetStats

@@ -65,4 +66,4 @@ description: Efficiently handle data in YOLO with Ultralytics. Utilize HUBDatase

# zip_directory

---

:::ultralytics.yolo.data.utils.zip_directory

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/engine/exporter.md b/docs/reference/yolo/engine/exporter.md

index 4b19204243e..aef271b5c65 100644

--- a/docs/reference/yolo/engine/exporter.md

+++ b/docs/reference/yolo/engine/exporter.md

@@ -1,5 +1,6 @@

---

description: Learn how to export your YOLO model in various formats using Ultralytics' exporter package - iOS, GDC, and more.

+keywords: Ultralytics, YOLO, exporter, iOS detect model, gd_outputs, export

---

# Exporter

@@ -30,4 +31,4 @@ description: Learn how to export your YOLO model in various formats using Ultral

# export

---

:::ultralytics.yolo.engine.exporter.export

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/engine/model.md b/docs/reference/yolo/engine/model.md

index d9b90d766c7..be36339923c 100644

--- a/docs/reference/yolo/engine/model.md

+++ b/docs/reference/yolo/engine/model.md

@@ -1,8 +1,9 @@

---

description: Discover the YOLO model of Ultralytics engine to simplify your object detection tasks with state-of-the-art models.

+keywords: YOLO, object detection, model, architecture, usage, customization, Ultralytics Docs

---

# YOLO

---

:::ultralytics.yolo.engine.model.YOLO

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/engine/predictor.md b/docs/reference/yolo/engine/predictor.md

index 52540e738c2..ec17842f51c 100644

--- a/docs/reference/yolo/engine/predictor.md

+++ b/docs/reference/yolo/engine/predictor.md

@@ -1,8 +1,9 @@

---

description: '"The BasePredictor class in Ultralytics YOLO Engine predicts object detection in images and videos. Learn to implement YOLO with ease."'

+keywords: Ultralytics, YOLO, BasePredictor, Object Detection, Computer Vision, Fast Model, Insights

---

# BasePredictor

---

:::ultralytics.yolo.engine.predictor.BasePredictor

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/engine/results.md b/docs/reference/yolo/engine/results.md

index e64e603563f..504864cb147 100644

--- a/docs/reference/yolo/engine/results.md

+++ b/docs/reference/yolo/engine/results.md

@@ -1,5 +1,6 @@

---

description: Learn about BaseTensor & Boxes in Ultralytics YOLO Engine. Check out Ultralytics Docs for quality tutorials and resources on object detection.

+keywords: YOLO, Engine, Results, Masks, Probs, Ultralytics

---

# BaseTensor

@@ -21,3 +22,13 @@ description: Learn about BaseTensor & Boxes in Ultralytics YOLO Engine. Check ou

---

:::ultralytics.yolo.engine.results.Masks

+

+# Keypoints

+---

+:::ultralytics.yolo.engine.results.Keypoints

+

+

+# Probs

+---

+:::ultralytics.yolo.engine.results.Probs

+

\ No newline at end of file

diff --git a/docs/reference/yolo/engine/trainer.md b/docs/reference/yolo/engine/trainer.md

index 1892bbd88ca..fc51c24bc83 100644

--- a/docs/reference/yolo/engine/trainer.md

+++ b/docs/reference/yolo/engine/trainer.md

@@ -1,13 +1,9 @@

---

description: Train faster with mixed precision. Learn how to use BaseTrainer with Advanced Mixed Precision to optimize YOLOv3 and YOLOv4 models.

+keywords: Ultralytics YOLO, BaseTrainer, object detection models, training guide

---

# BaseTrainer

---

:::ultralytics.yolo.engine.trainer.BaseTrainer

-

-

-# check_amp

----

-:::ultralytics.yolo.engine.trainer.check_amp

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/engine/validator.md b/docs/reference/yolo/engine/validator.md

index e499fa78b48..d99b062559f 100644

--- a/docs/reference/yolo/engine/validator.md

+++ b/docs/reference/yolo/engine/validator.md

@@ -1,8 +1,9 @@

---

description: Ensure YOLOv5 models meet constraints and standards with the BaseValidator class. Learn how to use it here.

+keywords: Ultralytics, YOLO, BaseValidator, models, validation, object detection

---

# BaseValidator

---

:::ultralytics.yolo.engine.validator.BaseValidator

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/nas/model.md b/docs/reference/yolo/nas/model.md

new file mode 100644

index 00000000000..c5fe258469b

--- /dev/null

+++ b/docs/reference/yolo/nas/model.md

@@ -0,0 +1,9 @@

+---

+description: Learn about the Neural Architecture Search (NAS) feature available in Ultralytics YOLO. Find out how NAS can improve object detection models and increase accuracy. Get started today!.

+keywords: Ultralytics YOLO, object detection, NAS, Neural Architecture Search, model optimization, accuracy improvement

+---

+

+# NAS

+---

+:::ultralytics.yolo.nas.model.NAS

+

\ No newline at end of file

diff --git a/docs/reference/yolo/nas/predict.md b/docs/reference/yolo/nas/predict.md

new file mode 100644

index 00000000000..0b8a62d1fbe

--- /dev/null

+++ b/docs/reference/yolo/nas/predict.md

@@ -0,0 +1,9 @@

+---

+description: Learn how to use NASPredictor in Ultralytics YOLO for deploying efficient CNN models with search algorithms in neural architecture search.

+keywords: Ultralytics YOLO, NASPredictor, neural architecture search, efficient CNN models, search algorithms

+---

+

+# NASPredictor

+---

+:::ultralytics.yolo.nas.predict.NASPredictor

+

\ No newline at end of file

diff --git a/docs/reference/yolo/nas/val.md b/docs/reference/yolo/nas/val.md

new file mode 100644

index 00000000000..6f849a471fb

--- /dev/null

+++ b/docs/reference/yolo/nas/val.md

@@ -0,0 +1,9 @@

+---

+description: Learn about NASValidator in the Ultralytics YOLO Docs. Properly validate YOLO neural architecture search results for optimal performance.

+keywords: NASValidator, YOLO, neural architecture search, validation, performance, Ultralytics

+---

+

+# NASValidator

+---

+:::ultralytics.yolo.nas.val.NASValidator

+

\ No newline at end of file

diff --git a/docs/reference/yolo/utils/autobatch.md b/docs/reference/yolo/utils/autobatch.md

index a2bf4a3c690..dc7c0a8b8c1 100644

--- a/docs/reference/yolo/utils/autobatch.md

+++ b/docs/reference/yolo/utils/autobatch.md

@@ -1,5 +1,6 @@

---

description: Dynamically adjusts input size to optimize GPU memory usage during training. Learn how to use check_train_batch_size with Ultralytics YOLO.

+keywords: YOLOv5, batch size, training, Ultralytics Autobatch, object detection, model performance

---

# check_train_batch_size

@@ -10,4 +11,4 @@ description: Dynamically adjusts input size to optimize GPU memory usage during

# autobatch

---

:::ultralytics.yolo.utils.autobatch.autobatch

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/utils/benchmarks.md b/docs/reference/yolo/utils/benchmarks.md

index e3abcade64a..39112eac232 100644

--- a/docs/reference/yolo/utils/benchmarks.md

+++ b/docs/reference/yolo/utils/benchmarks.md

@@ -1,5 +1,6 @@

---

description: Improve your YOLO's performance and measure its speed. Benchmark utility for YOLOv5.

+keywords: Ultralytics YOLO, ProfileModels, benchmark, model inference, detection

---

# ProfileModels

@@ -10,4 +11,4 @@ description: Improve your YOLO's performance and measure its speed. Benchmark ut

# benchmark

---

:::ultralytics.yolo.utils.benchmarks.benchmark

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/utils/callbacks/base.md b/docs/reference/yolo/utils/callbacks/base.md

index a448dac8c66..d82f0fd287e 100644

--- a/docs/reference/yolo/utils/callbacks/base.md

+++ b/docs/reference/yolo/utils/callbacks/base.md

@@ -1,5 +1,6 @@

---

description: Learn about YOLO's callback functions from on_train_start to add_integration_callbacks. See how these callbacks modify and save models.

+keywords: YOLO, Ultralytics, callbacks, object detection, training, inference

---

# on_pretrain_routine_start

@@ -135,4 +136,4 @@ description: Learn about YOLO's callback functions from on_train_start to add_in

# add_integration_callbacks

---

:::ultralytics.yolo.utils.callbacks.base.add_integration_callbacks

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/utils/callbacks/clearml.md b/docs/reference/yolo/utils/callbacks/clearml.md

index 8b7bbfce0ea..7dc01a38dfb 100644

--- a/docs/reference/yolo/utils/callbacks/clearml.md

+++ b/docs/reference/yolo/utils/callbacks/clearml.md

@@ -1,5 +1,6 @@

---

description: Improve your YOLOv5 model training with callbacks from ClearML. Learn about log debug samples, pre-training routines, validation and more.

+keywords: Ultralytics YOLO, callbacks, log plots, epoch monitoring, training end events

---

# _log_debug_samples

@@ -35,4 +36,4 @@ description: Improve your YOLOv5 model training with callbacks from ClearML. Lea

# on_train_end

---

:::ultralytics.yolo.utils.callbacks.clearml.on_train_end

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/utils/callbacks/comet.md b/docs/reference/yolo/utils/callbacks/comet.md

index 9e81dfc87b0..2e1bffe8344 100644

--- a/docs/reference/yolo/utils/callbacks/comet.md

+++ b/docs/reference/yolo/utils/callbacks/comet.md

@@ -1,5 +1,6 @@

---

description: Learn about YOLO callbacks using the Comet.ml platform, enhancing object detection training and testing with custom logging and visualizations.

+keywords: Ultralytics, YOLO, callbacks, Comet ML, log images, log predictions, log plots, fetch metadata, fetch annotations, create experiment data, format experiment data

---

# _get_comet_mode

@@ -120,4 +121,4 @@ description: Learn about YOLO callbacks using the Comet.ml platform, enhancing o

# on_train_end

---

:::ultralytics.yolo.utils.callbacks.comet.on_train_end

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/utils/callbacks/dvc.md b/docs/reference/yolo/utils/callbacks/dvc.md

new file mode 100644

index 00000000000..1ca463697dd

--- /dev/null

+++ b/docs/reference/yolo/utils/callbacks/dvc.md

@@ -0,0 +1,54 @@

+---

+description: Explore Ultralytics YOLO Utils DVC Callbacks such as logging images, plots, confusion matrices, and training progress.

+keywords: Ultralytics, YOLO, Utils, DVC, Callbacks, images, plots, confusion matrices, training progress

+---

+

+# _logger_disabled

+---

+:::ultralytics.yolo.utils.callbacks.dvc._logger_disabled

+

+

+# _log_images

+---

+:::ultralytics.yolo.utils.callbacks.dvc._log_images

+

+

+# _log_plots

+---

+:::ultralytics.yolo.utils.callbacks.dvc._log_plots

+

+

+# _log_confusion_matrix

+---

+:::ultralytics.yolo.utils.callbacks.dvc._log_confusion_matrix

+

+

+# on_pretrain_routine_start

+---

+:::ultralytics.yolo.utils.callbacks.dvc.on_pretrain_routine_start

+

+

+# on_pretrain_routine_end

+---

+:::ultralytics.yolo.utils.callbacks.dvc.on_pretrain_routine_end

+

+

+# on_train_start

+---

+:::ultralytics.yolo.utils.callbacks.dvc.on_train_start

+

+

+# on_train_epoch_start

+---

+:::ultralytics.yolo.utils.callbacks.dvc.on_train_epoch_start

+

+

+# on_fit_epoch_end

+---

+:::ultralytics.yolo.utils.callbacks.dvc.on_fit_epoch_end

+

+

+# on_train_end

+---

+:::ultralytics.yolo.utils.callbacks.dvc.on_train_end

+

\ No newline at end of file

diff --git a/docs/reference/yolo/utils/callbacks/hub.md b/docs/reference/yolo/utils/callbacks/hub.md

index aa751e3909f..7337c86c527 100644

--- a/docs/reference/yolo/utils/callbacks/hub.md

+++ b/docs/reference/yolo/utils/callbacks/hub.md

@@ -1,5 +1,6 @@

---

description: Improve YOLOv5 model training with Ultralytics' on-train callbacks. Boost performance on-pretrain-routine-end, model-save, train/predict start.

+keywords: Ultralytics, YOLO, callbacks, on_pretrain_routine_end, on_fit_epoch_end, on_train_start, on_val_start, on_predict_start, on_export_start

---

# on_pretrain_routine_end

@@ -40,4 +41,4 @@ description: Improve YOLOv5 model training with Ultralytics' on-train callbacks.

# on_export_start

---

:::ultralytics.yolo.utils.callbacks.hub.on_export_start

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/utils/callbacks/mlflow.md b/docs/reference/yolo/utils/callbacks/mlflow.md

index 8c0d717c91a..b6708904981 100644

--- a/docs/reference/yolo/utils/callbacks/mlflow.md

+++ b/docs/reference/yolo/utils/callbacks/mlflow.md

@@ -1,5 +1,6 @@

---

description: Track model performance and metrics with MLflow in YOLOv5. Use callbacks like on_pretrain_routine_end or on_train_end to log information.

+keywords: Ultralytics, YOLO, Utils, MLflow, callbacks, on_pretrain_routine_end, on_train_end, Tracking, Model Management, training

---

# on_pretrain_routine_end

@@ -15,4 +16,4 @@ description: Track model performance and metrics with MLflow in YOLOv5. Use call

# on_train_end

---

:::ultralytics.yolo.utils.callbacks.mlflow.on_train_end

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/utils/callbacks/neptune.md b/docs/reference/yolo/utils/callbacks/neptune.md

index 2b4597875e5..195c9beef9c 100644

--- a/docs/reference/yolo/utils/callbacks/neptune.md

+++ b/docs/reference/yolo/utils/callbacks/neptune.md

@@ -1,5 +1,6 @@

---

description: Improve YOLOv5 training with Neptune, a powerful logging tool. Track metrics like images, plots, and epochs for better model performance.

+keywords: Ultralytics, YOLO, Neptune, Callbacks, log scalars, log images, log plots, training, validation

---

# _log_scalars

@@ -40,4 +41,4 @@ description: Improve YOLOv5 training with Neptune, a powerful logging tool. Trac

# on_train_end

---

:::ultralytics.yolo.utils.callbacks.neptune.on_train_end

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/utils/callbacks/raytune.md b/docs/reference/yolo/utils/callbacks/raytune.md

index d20f4eada7e..fa1267d356d 100644

--- a/docs/reference/yolo/utils/callbacks/raytune.md

+++ b/docs/reference/yolo/utils/callbacks/raytune.md

@@ -1,8 +1,9 @@

---

description: '"Improve YOLO model performance with on_fit_epoch_end callback. Learn to integrate with Ray Tune for hyperparameter tuning. Ultralytics YOLO docs."'

+keywords: on_fit_epoch_end, Ultralytics YOLO, callback function, training, model tuning

---

# on_fit_epoch_end

---

:::ultralytics.yolo.utils.callbacks.raytune.on_fit_epoch_end

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/utils/callbacks/tensorboard.md b/docs/reference/yolo/utils/callbacks/tensorboard.md

index 95291dc6ddf..bf0f4c42cba 100644

--- a/docs/reference/yolo/utils/callbacks/tensorboard.md

+++ b/docs/reference/yolo/utils/callbacks/tensorboard.md

@@ -1,5 +1,6 @@

---

description: Learn how to monitor the training process with Tensorboard using Ultralytics YOLO's "_log_scalars" and "on_batch_end" methods.

+keywords: TensorBoard callbacks, YOLO training, ultralytics YOLO

---

# _log_scalars

@@ -20,4 +21,4 @@ description: Learn how to monitor the training process with Tensorboard using Ul

# on_fit_epoch_end

---

:::ultralytics.yolo.utils.callbacks.tensorboard.on_fit_epoch_end

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/utils/callbacks/wb.md b/docs/reference/yolo/utils/callbacks/wb.md

index 48a6c812c5c..03045e2953b 100644

--- a/docs/reference/yolo/utils/callbacks/wb.md

+++ b/docs/reference/yolo/utils/callbacks/wb.md

@@ -1,7 +1,13 @@

---

description: Learn how to use Ultralytics YOLO's built-in callbacks `on_pretrain_routine_start` and `on_train_epoch_end` for improved training performance.

+keywords: Ultralytics, YOLO, callbacks, weights, biases, training

---

+# _log_plots

+---

+:::ultralytics.yolo.utils.callbacks.wb._log_plots

+

+

# on_pretrain_routine_start

---

:::ultralytics.yolo.utils.callbacks.wb.on_pretrain_routine_start

@@ -20,4 +26,4 @@ description: Learn how to use Ultralytics YOLO's built-in callbacks `on_pretrain

# on_train_end

---

:::ultralytics.yolo.utils.callbacks.wb.on_train_end

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/utils/checks.md b/docs/reference/yolo/utils/checks.md

index 82b661b8256..4995371a5bc 100644

--- a/docs/reference/yolo/utils/checks.md

+++ b/docs/reference/yolo/utils/checks.md

@@ -1,5 +1,6 @@

---

description: 'Check functions for YOLO utils: image size, version, font, requirements, filename suffix, YAML file, YOLO, and Git version.'

+keywords: YOLO, Ultralytics, Utils, Checks, image sizing, version updates, font compatibility, Python requirements, file suffixes, YAML syntax, image showing, AMP

---

# is_ascii

@@ -72,6 +73,11 @@ description: 'Check functions for YOLO utils: image size, version, font, require

:::ultralytics.yolo.utils.checks.check_yolo

+# check_amp

+---

+:::ultralytics.yolo.utils.checks.check_amp

+

+

# git_describe

---

:::ultralytics.yolo.utils.checks.git_describe

@@ -80,4 +86,4 @@ description: 'Check functions for YOLO utils: image size, version, font, require

# print_args

---

:::ultralytics.yolo.utils.checks.print_args

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/utils/dist.md b/docs/reference/yolo/utils/dist.md

index ef70e99c8e6..c5505446eea 100644

--- a/docs/reference/yolo/utils/dist.md

+++ b/docs/reference/yolo/utils/dist.md

@@ -1,5 +1,6 @@

---

description: Learn how to find free network port and generate DDP (Distributed Data Parallel) command in Ultralytics YOLO with easy examples.

+keywords: ultralytics, YOLO, utils, dist, distributed deep learning, DDP file, DDP cleanup

---

# find_free_network_port

@@ -20,4 +21,4 @@ description: Learn how to find free network port and generate DDP (Distributed D

# ddp_cleanup

---

:::ultralytics.yolo.utils.dist.ddp_cleanup

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/utils/downloads.md b/docs/reference/yolo/utils/downloads.md

index 76580f12ca4..5206e02c9a6 100644

--- a/docs/reference/yolo/utils/downloads.md

+++ b/docs/reference/yolo/utils/downloads.md

@@ -1,5 +1,6 @@

---

description: Download and unzip YOLO pretrained models. Ultralytics YOLO docs utils.downloads.unzip_file, checks disk space, downloads and attempts assets.

+keywords: Ultralytics YOLO, downloads, trained models, datasets, weights, deep learning, computer vision

---

# is_url

@@ -30,4 +31,4 @@ description: Download and unzip YOLO pretrained models. Ultralytics YOLO docs ut

# download

---

:::ultralytics.yolo.utils.downloads.download

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/utils/errors.md b/docs/reference/yolo/utils/errors.md

index fced2117fbd..a498db258d2 100644

--- a/docs/reference/yolo/utils/errors.md

+++ b/docs/reference/yolo/utils/errors.md

@@ -1,8 +1,9 @@

---

description: Learn about HUBModelError in Ultralytics YOLO Docs. Resolve the error and get the most out of your YOLO model.

+keywords: HUBModelError, Ultralytics YOLO, YOLO Documentation, Object detection errors, YOLO Errors, HUBModelError Solutions

---

# HUBModelError

---

:::ultralytics.yolo.utils.errors.HUBModelError

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/utils/files.md b/docs/reference/yolo/utils/files.md

index 6ba88554bdd..4ca39c721e8 100644

--- a/docs/reference/yolo/utils/files.md

+++ b/docs/reference/yolo/utils/files.md

@@ -1,5 +1,6 @@

---

description: 'Learn about Ultralytics YOLO files and directory utilities: WorkingDirectory, file_age, file_size, and make_dirs.'

+keywords: YOLO, object detection, file utils, file age, file size, working directory, make directories, Ultralytics Docs

---

# WorkingDirectory

@@ -35,4 +36,4 @@ description: 'Learn about Ultralytics YOLO files and directory utilities: Workin

# make_dirs

---

:::ultralytics.yolo.utils.files.make_dirs

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/utils/instance.md b/docs/reference/yolo/utils/instance.md

index 455669e8d8d..1b32a808827 100644

--- a/docs/reference/yolo/utils/instance.md

+++ b/docs/reference/yolo/utils/instance.md

@@ -1,5 +1,6 @@

---

description: Learn about Bounding Boxes (Bboxes) and _ntuple in Ultralytics YOLO for object detection. Improve accuracy and speed with these powerful tools.

+keywords: Ultralytics, YOLO, Bboxes, _ntuple, object detection, instance segmentation

---

# Bboxes

@@ -15,4 +16,4 @@ description: Learn about Bounding Boxes (Bboxes) and _ntuple in Ultralytics YOLO

# _ntuple

---

:::ultralytics.yolo.utils.instance._ntuple

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/utils/loss.md b/docs/reference/yolo/utils/loss.md

index b01e3efd433..ad4aa688210 100644

--- a/docs/reference/yolo/utils/loss.md

+++ b/docs/reference/yolo/utils/loss.md

@@ -1,5 +1,6 @@

---

description: Learn about Varifocal Loss and Keypoint Loss in Ultralytics YOLO for advanced bounding box and pose estimation. Visit our docs for more.

+keywords: Ultralytics, YOLO, loss functions, object detection, keypoint detection, segmentation, classification

---

# VarifocalLoss

@@ -35,4 +36,4 @@ description: Learn about Varifocal Loss and Keypoint Loss in Ultralytics YOLO fo

# v8ClassificationLoss

---

:::ultralytics.yolo.utils.loss.v8ClassificationLoss

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/utils/metrics.md b/docs/reference/yolo/utils/metrics.md

index 10d4728ca2d..4cb1158f121 100644

--- a/docs/reference/yolo/utils/metrics.md

+++ b/docs/reference/yolo/utils/metrics.md

@@ -1,5 +1,6 @@

---

description: Explore Ultralytics YOLO's FocalLoss, DetMetrics, PoseMetrics, ClassifyMetrics, and more with Ultralytics Metrics documentation.

+keywords: YOLOv5, metrics, losses, confusion matrix, detection metrics, pose metrics, classification metrics, intersection over area, intersection over union, keypoint intersection over union, average precision, per class average precision, Ultralytics Docs

---

# FocalLoss

@@ -95,4 +96,4 @@ description: Explore Ultralytics YOLO's FocalLoss, DetMetrics, PoseMetrics, Clas

# ap_per_class

---

:::ultralytics.yolo.utils.metrics.ap_per_class

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/utils/ops.md b/docs/reference/yolo/utils/ops.md

index b55c6b88499..0a8aa35650b 100644

--- a/docs/reference/yolo/utils/ops.md

+++ b/docs/reference/yolo/utils/ops.md

@@ -1,5 +1,6 @@

---

description: Learn about various utility functions in Ultralytics YOLO, including x, y, width, height conversions, non-max suppression, and more.

+keywords: Ultralytics, YOLO, Utils Ops, Functions, coco80_to_coco91_class, scale_boxes, non_max_suppression, clip_coords, xyxy2xywh, xywhn2xyxy, xyn2xy, xyxy2ltwh, ltwh2xyxy, resample_segments, process_mask_upsample, process_mask_native, masks2segments, clean_str

---

# Profile

@@ -135,4 +136,4 @@ description: Learn about various utility functions in Ultralytics YOLO, includin

# clean_str

---

:::ultralytics.yolo.utils.ops.clean_str

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/utils/plotting.md b/docs/reference/yolo/utils/plotting.md

index 801032ed1bd..f402f4814dc 100644

--- a/docs/reference/yolo/utils/plotting.md

+++ b/docs/reference/yolo/utils/plotting.md

@@ -1,5 +1,6 @@

---

description: 'Discover the power of YOLO''s plotting functions: Colors, Labels and Images. Code examples to output targets and visualize features. Check it now.'

+keywords: YOLO, object detection, plotting, visualization, annotator, save one box, plot results, feature visualization, Ultralytics

---

# Colors

@@ -40,4 +41,4 @@ description: 'Discover the power of YOLO''s plotting functions: Colors, Labels a

# feature_visualization

---

:::ultralytics.yolo.utils.plotting.feature_visualization

-

+

\ No newline at end of file

diff --git a/docs/reference/yolo/utils/tal.md b/docs/reference/yolo/utils/tal.md

index b6835073209..1f309322012 100644

--- a/docs/reference/yolo/utils/tal.md

+++ b/docs/reference/yolo/utils/tal.md

@@ -1,5 +1,6 @@

---

description: Improve your YOLO models with Ultralytics' TaskAlignedAssigner, select_highest_overlaps, and dist2bbox utilities. Streamline your workflow today.