📌AiDB : 一个使用C++完成的深度学习模型部署工具箱。将主流深度学习推理框架抽象成统一接口,包括ONNXRUNTIME、MNN、NCNN、TNN、PaddleLite和OpenVINO。提供多种场景、语言的部署Demo。这个项目不仅仅用来记录学习,同时也是对个人几年工作的一个汇总。如果这个项目对您有所启发,或是帮助,欢迎⭐Star支持⭐,这是我持续更新的动力!🍔

📌Article

AiDB:一个集合了6大推理框架的AI工具箱 | 加速你的模型部署

如何使用“LoRa”的方式加载ONNX模型:StableDiffusion相关模型 的C++推理

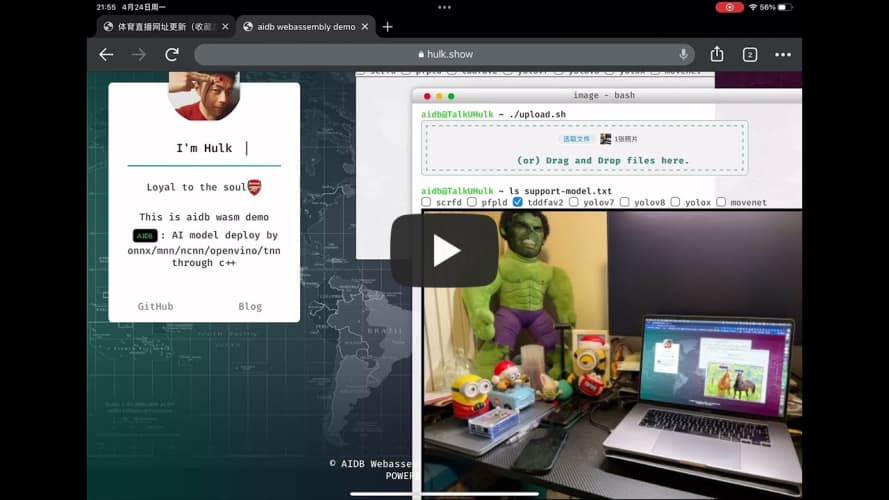

| aidb-webassembly-摄像头-demo | aidb-webassembly-capture-demo |

|---|---|

|

|

- 涵盖所有主流推理框架: 集成了目前主流的推理框架,包括OnnxRuntime、MNN、NCNN、TNN、PaddleLite和OpenVINO;

- 使用友好: 将所有推理框架抽象成统一的接口,通过配置文件选择,使用方便;

- 多种场景: 支持Linux、MacOS、Android(Win64占坑,最近一直犯懒、IOS--贫穷限制了我的工作),提供了桌面Demo(Qt) 、 Android Demo(Kotlin) 、 Lua Demo 以及极简的服务端部署Demo( Go Zeros 和 Python FastAPI )

- 多种语言: 提供Python、Lua、Go的调用实例;

设计了两种模型,S-mode和H-mode,即C api和c++ api. S-mode比较灵活,可以随意注册,然后根据任务id调用,其中Go zeros和Qt demo使用了这种模式;H-mode,直白点说就是写死了,Android demo使用了这种模式。

推荐使用docker

```java docker pull mister5ive/ai.deploy.box ```git lfs clone https://github.com/TalkUHulk/ai.deploy.box.git

cd ai.deploy.box.git

docker build -t aidb-dev .

docker run -it --name aidb-test aidb-devgit clone https://github.com/TalkUHulk/ai.deploy.box.git

cd ai.deploy.box.git

mkdir build && cd build

cmake .. -DC_API={ON/OFF} -DBUILD_SAMPLE={ON/OFF} -DBUILD_PYTHON={ON/OFF} -DBUILD_LUA={ON/OFF} -DENGINE_NCNN_WASM={ON/OFF} -DOPENCV_HAS_FREETYPE={ON/OFF} -DENGINE_MNN={ON/OFF} -DENGINE_ORT={ON/OFF} -DENGINE_NCNN={ON/OFF} -DENGINE_TNN={ON/OFF} -DENGINE_OPV={ON/OFF} -DENGINE_PPLite={ON/OFF}

make -j8- C_API: compile C library;

- BUILD_PYTHON: compile Python api;

- BUILD_LUA: compile Lua api;

- OPENCV_HAS_FREETYPE: opencv-contrib complied with freetype or not. cv::putText can put chinese;

- BUILD_SAMPLE: compile sample;

- ENGINE_NCNN_WASM: compile sample;

- ENGINE_MNN: enable mnn;

- ENGINE_ORT: enable onnxruntim;

- ENGINE_NCNN: enable ncnn;

- ENGINE_TNN: enable tnn;

- ENGINE_OPV: enable openvino;

- ENGINE_PPLite: enable paddle-lite;

- ENABLE_SD: enable stable diffusion;

Model Lite: MEGA | Baidu: 92e8

[更新!!!] 所有模型已上传到 🤗.

Model List

例: 模型scrfd, 后端框架mnn,做人脸检测:

#include <opencv2/opencv.hpp>

#include "Interpreter.h"

#include "utility/Utility.h"

auto interpreter = AIDB::Interpreter::createInstance("scrfd_500m_kps", "mnn");

auto bgr = cv::imread("./doc/test/face.jpg");

cv::Mat blob = *det_ins << bgr;

std::vector<std::vector<float>> outputs;

std::vector<std::vector<int>> outputs_shape;

det_ins->forward((float*)blob.data, det_ins->width(), det_ins->height(), det_ins->channel(), outputs, outputs_shape);

std::vector<std::shared_ptr<AIDB::FaceMeta>> face_metas;

assert(face_detect_input->scale_h() == face_detect_input->scale_w());

AIDB::Utility::scrfd_post_process(outputs, face_metas, det_ins->width(), det_ins->height(), det_ins->scale_h());

linux上, 在运行sample前,先执行source set_env.sh.

Sample Usage

人脸检测

./build/samples/FaceDetect model_name backend type inputfile-

model_name

- scrfd_10g_kps

- scrfd_2.5g_kps

- scrfd_500m_kps

-

backend

- ONNX

- MNN

- NCNN

- OpenVINO

- TNN

- PaddleLite

-

type

- 0 - 图片

- 1 - 视频

-

inputfile: 0 is 摄像头

人脸关键点

./build/samples/FaceDetectWithLandmark model_name backend pfpld backend type inputfile-

model_name

- scrfd_10g_kps

- scrfd_2.5g_kps

- scrfd_500m_kps

-

backend

- ONNX

- MNN

- NCNN

- OpenVINO

- TNN

- PaddleLite

-

type

- 0 - 图片

- 1 - 视频

-

inputfile: 0 is 摄像头

人脸对齐

./build/samples/FaceDetectWith3DDFA det_model_name backend tddfa_model_name backend type inputfile-

det_backend

- scrfd_10g_kps

- scrfd_2.5g_kps

- scrfd_500m_kps

-

tddfa_model_name

- 3ddfa_mb1_bfm_base

- 3ddfa_mb1_bfm_dense

- 3ddfa_mb05_bfm_base

- 3ddfa_mb05_bfm_dense

-

backend

- ONNX

- MNN

- NCNN

- OpenVINO

- TNN

- PaddleLite

-

type

- 0 - 图片

- 1 - 视频

-

inputfile: 0 is 摄像头

人脸解析

./build/samples/FaceParsing bisenet backend type inputfile-

backend

- ONNX

- MNN

- NCNN

- OpenVINO

- TNN

- PaddleLite

-

type

- 0 - 图片

- 1 - 视频

-

inputfile: 0 is 摄像头

Ocr

./build/samples/PPOcr ppocr_det det_backend ppocr_cls cls_backend ppocr_ret rec_backend type inputfile-

det_backend/cls_backend/rec_backend

- ONNX

- MNN

- NCNN

- OpenVINO

- PaddleLite

-

type

- 0 - 图片

- 1 - 视频

-

inputfile: 0 is 摄像头

YoloX

./build/samples/YoloX model_name backend type inputfile-

model_name

- yolox_tiny

- yolox_nano

- yolox_s

- yolox_m

- yolox_l

- yolox_x

- yolox_darknet

-

backend

- ONNX

- MNN

- NCNN

- OpenVINO

- TNN

- PaddleLite

-

type

- 0 - 图片

- 1 - 视频

-

inputfile: 0 is 摄像头

YoloV7

./build/samples/YoloV7 model_name backend type inputfile-

model_name

- yolov7_tiny

- yolov7_tiny_grid

- yolov7

- yolov7_grid

- yolov7x

- yolov7x_grid

- yolov7_d6_grid

- yolov7_e6_grid

-

backend

- ONNX

- MNN

- NCNN

- OpenVINO

- TNN

- PaddleLite

-

type

- 0 - 图片

- 1 - 视频

-

inputfile: 0 is 摄像头

YoloV8

./build/samples/YoloV8 model_name backend type inputfile-

model_name

- yolov8n

- yolov8s

- yolov8m

- yolov8l

- yolov8x

-

backend

- ONNX

- MNN

- NCNN

- OpenVINO

- TNN

- PaddleLite

-

type

- 0 - 图片

- 1 - 视频

-

inputfile: 0 is 摄像头

MobileVit

./build/samples/MobileViT model_name backend inputfile-

model_name

- mobilevit_xxs

- mobilevit_s

-

backend

- ONNX

- MNN

- OpenVINO

MoveNet

./build/samples/Movenet movenet backend type inputfile-

backend

- ONNX

- MNN

- NCNN

- OpenVINO

- TNN

- PaddleLite

-

type

- 0 - 图片

- 1 - 视频

-

inputfile: 0 is 摄像头

MobileStyleGan

./build/samples/MobileStyleGan mobilestylegan_mappingnetwork map_backend mobilestylegan_synthesisnetwork syn_backend- map_backend/syn_backend

- ONNX

- MNN

- OpenVINO

AnimeGan

./build/samples/AnimeGan model_name backend 0 inputfile-

model_name

- animeganv2_celeba_distill

- animeganv2_celeba_distill_dynamic

- animeganv2_face_paint_v1

- animeganv2_face_paint_v1_dynamic

- animeganv2_face_paint_v2

- animeganv2_face_paint_v2_dynamic

- animeganv2_paprika

- animeganv2_paprika_dynamic

-

backend

- ONNX

- MNN

- OpenVINO

踩坑

- Android-rtti

Q:

XXXX/3rdparty/yaml-cpp/depthguard.h:54:9: error: cannot use 'throw' with exceptions disabled

In file included from XXXX/opencv/native/jni/include/opencv2/opencv.hpp:65:

In file included from XXXX/opencv/native/jni/include/opencv2/flann.hpp:48:

In file included from XXXX/opencv/native/jni/include/opencv2/flann/flann_base.hpp:41:

In file included from XXXX/opencv/native/jni/include/opencv2/flann/params.h:35:

XXXX/opencv/native/jni/include/opencv2/flann/any.h:60:63: error: use of typeid requires -frttiA: app->build.gradle add "-fexceptions"

externalNativeBuild {

ndkBuild {

cppFlags "-std=c++11", "-fexceptions"

arguments "APP_OPTIM=release", "NDK_DEBUG=0"

abiFilters "arm64-v8a"

}

}if complie with ncnn, disable rtti.

cmake -DCMAKE_TOOLCHAIN_FILE=../../android-ndk-r25c/build/cmake/android.toolchain.cmake -DANDROID_ABI="arm64-v8a" -DANDROID_PLATFORM=android-24 -DNCNN_SHARED_LIB=ON -DANDROID_ARM_NEON=ON -DNCNN_DISABLE_EXCEPTION=OFF -DNCNN_DISABLE_RTTI=OFF ..reference: issues/3231

- Android-mnn

Q:

I/MNNJNI: Can't Find type=3 backend, use 0 instead A:

java load so 时,显式调 System.load("libMNN_CL.so") 。在 CMakeLists.txt 里面让你的 so link libMNN_CL 貌似是不行的。

init {

System.loadLibrary("aidb")

System.loadLibrary("MNN");

try {

System.loadLibrary("MNN_CL")

System.loadLibrary("MNN_Express")

System.loadLibrary("MNN_Vulkan")

} catch (ce: Throwable) {

Log.w("MNNJNI", "load MNN GPU so exception=%s", ce)

}

System.loadLibrary("mnncore")

}- Android Studio

Q:

Out of memory:Java heap space

A:

gradle.properties->org.gradle.jvmargs=-Xmx4g -Dfile.encoding=UTF-8- Android paddle-lite

Q: because kernel for 'calib' is not supported by Paddle-Lite. A: 使用带fp16标签的库

Q: kernel for 'conv2d' is not supported by Paddle-lite. A: 转模型--valid_targets =arm, 打开fp16,opt\lib版本对应 5. Android OpenVINO

Q: How to deploy models in android with openvino(reference).

A:

-

step1

- build openvino library. Here are my compilation instructions:

cmake -DCMAKE_BUILD_TYPE=Release -DCMAKE_TOOLCHAIN_FILE=android-ndk-r25c/build/cmake/android.toolchain.cmake -DANDROID_ABI=arm64-v8a -DANDROID_PLATFORM=30 -DANDROID_STL=c++_shared -DENABLE_SAMPLES=OFF -DENABLE_OPENCV=OFF -DENABLE_CLDNN=OFF -DENABLE_VPU=OFF -DENABLE_GNA=OFF -DENABLE_MYRIAD=OFF -DENABLE_TESTS=OFF -DENABLE_GAPI_TESTS=OFF -DENABLE_BEH_TESTS=OFF ..

- build openvino library. Here are my compilation instructions:

-

step2

- Put openvino library(*.so) to assets.(plugin you need)

-

step3

- If your device is not root, put libc++.so and libc++_shared.so to assets.

Q:

dlopen failed: library “libc++_shared.so“ not foundA:

cmakelist.txt

add_library(libc++_shared STATIC IMPORTED)

set_target_properties(libc++_shared PROPERTIES IMPORTED_LOCATION ${CMAKE_CURRENT_LIST_DIR}/../libs/android/opencv/native/libs/${ANDROID_ABI}/libc++_shared.so)Q:

java.lang.UnsatisfiedLinkError: dlopen failed: cannot locate symbol "__emutls_get_address" referenced by "/data/app/~~DMBfRqeqFvKzb9yUIkUZiQ==/com.hulk.aidb_demo-HrziaiyGs2adLTT-aQqemg==/lib/arm64/libopenvino_arm_cpu_plugin.so"...A: Build openvino arm library, and put *.so in app/libs/${ANDROID_ABI}/. (need add jniLibs.srcDirs = ['libs'] in build.gradle)

Q:

library "/system/lib64/libc++.so" ("/system/lib64/libc++.so") needed or dlopened by "/apex/com.android.art/lib64/libnativeloader.so" is not accessible for the namespace: [name="classloader-namespace", ld_library_paths="", default_library_paths="/data/app/~~IWBQrjWXHt7o71mstUGRHA==/com.hulk.aidb_demo-cfq3aSk8DN62UDtoKV4Vfg==/lib/arm64:/data/app/~~IWBQrjWXHt7o71mstUGRHA==/com.hulk.aidb_demo-cfq3aSk8DN62UDtoKV4Vfg==/base.apk!/lib/arm64-v8a", permitted_paths="/data:/mnt/expand:/data/data/com.hulk.aidb_demo"]A: Put libc++.so in android studio app/libs/${ANDROID_ABI}/. (need add jniLibs.srcDirs = ['libs'] in build.gradle)

Feel free to dive in! Open an issue or submit PRs.

AiDB follows the Contributor Covenant Code of Conduct.

This project exists thanks to all the people who contribute.

MIT © Hulk Wang