FastALPR is a high-performance, customizable Automatic License Plate Recognition (ALPR) system. We offer fast and efficient ONNX models by default, but you can easily swap in your own models if needed.

For Optical Character Recognition (OCR), we use fast-plate-ocr by default, and for license plate detection, we use open-image-models. However, you can integrate any OCR or detection model of your choice.

- ✨ Features

- 📦 Installation

- 🚀 Quick Start

- 🛠️ Customization and Flexibility

- 📖 Documentation

- 🤝 Contributing

- 🙏 Acknowledgements

- 📫 Contact

- High Accuracy: Uses advanced models for precise license plate detection and OCR.

- Customizable: Easily switch out detection and OCR models.

- Easy to Use: Quick setup with a simple API.

- Out-of-the-Box Models: Includes ready-to-use detection and OCR models

- Fast Performance: Optimized with ONNX Runtime for speed.

pip install fast-alprTip

Try fast-plate-ocr pre-trained models in Hugging Spaces.

Here's how to get started with FastALPR:

from fast_alpr import ALPR

# You can also initialize the ALPR with custom plate detection and OCR models.

alpr = ALPR(

detector_model="yolo-v9-t-384-license-plate-end2end",

ocr_model="global-plates-mobile-vit-v2-model",

)

# The "assets/test_image.png" can be found in repo root dit

alpr_results = alpr.predict("assets/test_image.png")

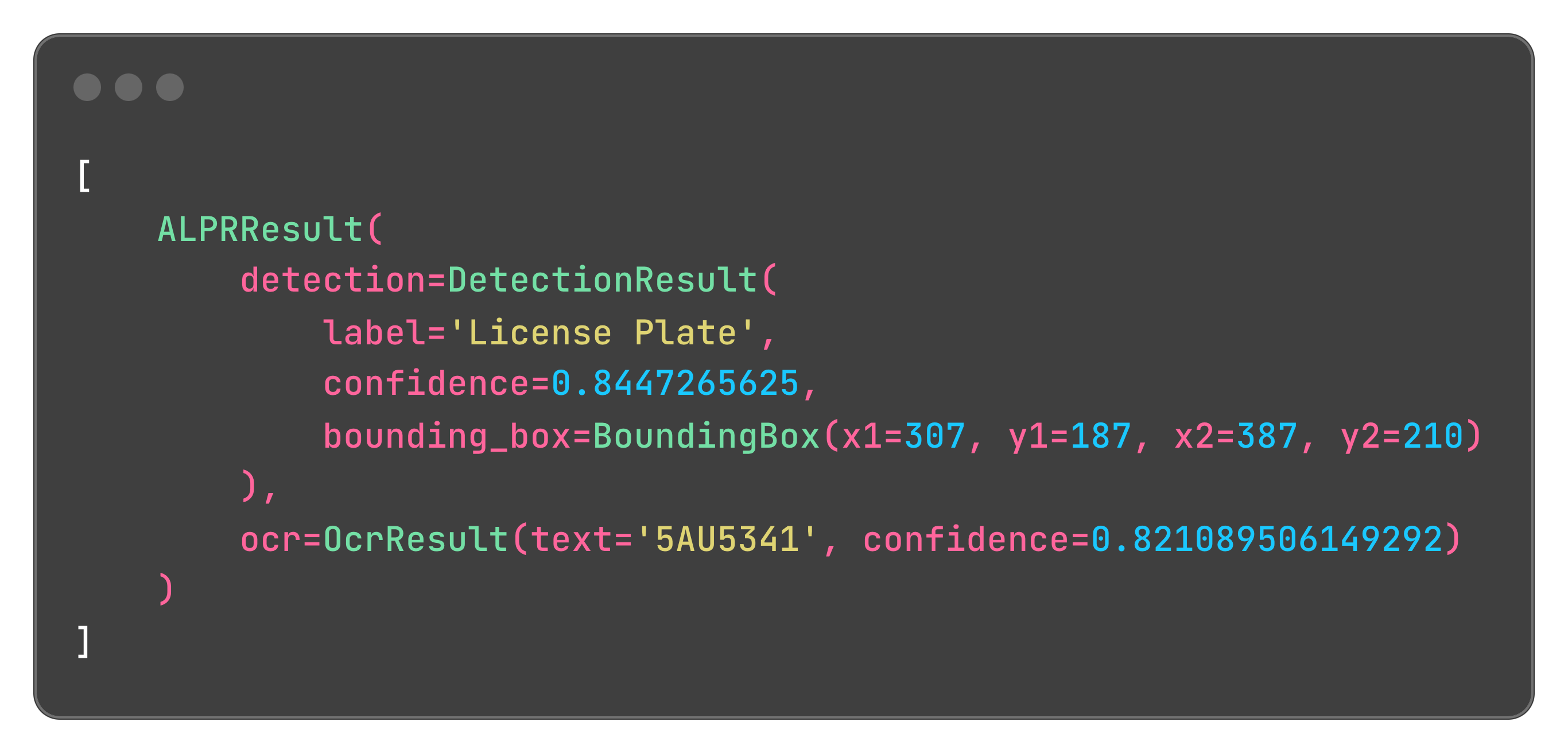

print(alpr_results)Output:

You can also draw the predictions directly on the image:

import cv2

from fast_alpr import ALPR

# Initialize the ALPR

alpr = ALPR(

detector_model="yolo-v9-t-384-license-plate-end2end",

ocr_model="global-plates-mobile-vit-v2-model",

)

# Load the image

image_path = "assets/test_image.png"

frame = cv2.imread(image_path)

# Draw predictions on the image

annotated_frame = alpr.draw_predictions(frame)

# Display the result

cv2.imshow("ALPR Result", annotated_frame)

cv2.waitKey(0)

cv2.destroyAllWindows()Output:

FastALPR is designed to be flexible. You can customize the detector and OCR models according to your requirements. You can very easily integrate with Tesseract OCR to leverage its capabilities:

import re

from statistics import mean

import numpy as np

import pytesseract

from fast_alpr.alpr import ALPR, BaseOCR, OcrResult

class PytesseractOCR(BaseOCR):

def __init__(self) -> None:

"""

Init PytesseractOCR.

"""

def predict(self, cropped_plate: np.ndarray) -> OcrResult | None:

if cropped_plate is None:

return None

# You can change 'eng' to the appropriate language code as needed

data = pytesseract.image_to_data(

cropped_plate,

lang="eng",

config="--oem 3 --psm 6",

output_type=pytesseract.Output.DICT,

)

plate_text = " ".join(data["text"]).strip()

plate_text = re.sub(r"[^A-Za-z0-9]", "", plate_text)

avg_confidence = mean(conf for conf in data["conf"] if conf > 0) / 100.0

return OcrResult(text=plate_text, confidence=avg_confidence)

alpr = ALPR(detector_model="yolo-v9-t-384-license-plate-end2end", ocr=PytesseractOCR())

alpr_results = alpr.predict("assets/test_image.png")

print(alpr_results)Tip

See the docs for more examples!

Comprehensive documentation is available here, including detailed API references and additional examples.

Contributions to the repo are greatly appreciated. Whether it's bug fixes, feature enhancements, or new models, your contributions are warmly welcomed.

To start contributing or to begin development, you can follow these steps:

- Clone repo

git clone https://github.com/ankandrew/fast-alpr.git

- Install all dependencies using Poetry:

poetry install --all-extras

- To ensure your changes pass linting and tests before submitting a PR:

make checks

- fast-plate-ocr for default OCR models.

- open-image-models for default plate detection models.

For questions or suggestions, feel free to open an issue or reach out through social networks.