I have always had a fascination with image processing algorithms. Growing up, my family did not have the the most powerful computer of the current generation. When everyone was running Windows 98 on the latest Internet Explorer browser, my family was still on Windows 3.1 and Netscape Navigator 3.

It sucked. My friends were playing the latest, and greatest online games like Diablo 2, and Half-Life, while the best I had was a bunch of edutainment games, a 2D Rogue-like, and a Gameboy emulator with a few ROMs. Up until I was able to build my own computer, I had always been using underpowered machines.

I remember our first family machine, an Intel 486 DX4, 100MHz system with a VLB SVGA display adapter. It was capable of displaying 16-bit color, but only at lower resolutions. We ran our computer on a 14" CRT, displaying at 256 colors. This system served as a family internet browsing machine up until 2001, when sharing digital photos over the web was starting to become more popular.

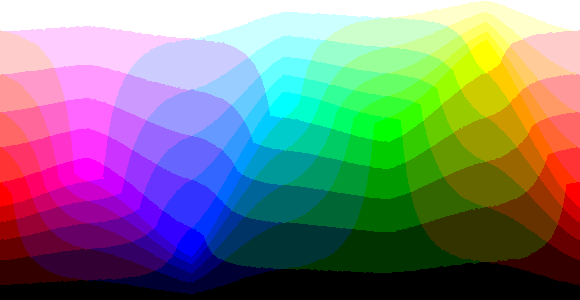

My monitor was showing colors from here:

- 8 bit color depth. Source. https://www.cambridgeincolour.com/tutorials/bit-depth.htm

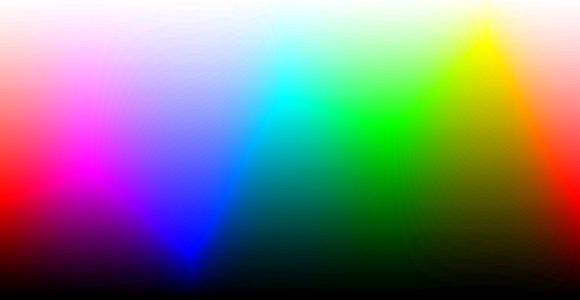

While the rest of the world, was seeing colors in full 16-bit ("High Color") glory!

- 16-bit color depth Source. https://www.cambridgeincolour.com/tutorials/bit-depth.htm

One of the earliest memories I had browsing the internet around this time was that photographs looked strange. A lot of the times they looked something like this:

Even my 10 year old self knew that it was not how images were supposed to look like! Occasionally, I would come across images that looked like this on the same monitor!

Wow! Much better! How could that be?

It turns out that the latter had used dithering. Dithering is a means to introduce noise to an image with a limited color space such that the final output has neighboring pixels whose colors are not too bright, or too dark from one another. This is a form of error distribution. If an image is not adjusted, then an image with limited colors will display color banding.

Why do we want to do this? Well, the most obvious benefit in reducing the color space of an image is that it allows the data to be represented with less bits. Less color information, means less bits needed, which ultimately leads to a smaller file size.

This can result in:

- Being able to increase the spatial resolution for the image (the resolution of the image in pixels in

$$W \times H$$ ) - Being able to increase the temporal resolution for a video due to having a smaller file size per frame. (longer running time for a movie)

Depending on the file format chosen, the limited color palette can be substituted with a color look-up table, or CLUT, thereby allowing each pixel to be a single index value to that table.

With less color data to be stored resulting in smaller file sizes, this became handy for operating systems to make use of the display memory in the best way possible. This was especially handy for days where video memory was scarce. My first graphics adapter only had 1 MB of display memory. Displaying 256 colors at 800 by 600 resolution, would have already occupied 480 KB of display memory.