diff --git a/00-course-setup/README.md b/00-course-setup/README.md

index 0fc05eb11..b67aa1303 100644

--- a/00-course-setup/README.md

+++ b/00-course-setup/README.md

@@ -1,67 +1,65 @@

-# Getting Started with this course

+# Getting Started with this course

-We are very excited for you to start this course and see what you get inspired to build with Generative AI!

+We are very excited for you to start this course and see what you get inspired to build with Generative AI!

-To make your time successful, we have created this page that outlines any setup steps, technical requirements, and how to get help when you need it.

+To make your time successful, we have created this page that outlines any setup steps, technical requirements, and how to get help when you need it.

+## Setup Steps

-## Setup Steps

-

+To start taking this course, you will need to complete the following steps.

### 1. Fork this Repo

-[Fork this entire repo](https://github.com/microsoft/generative-ai-for-beginners/fork) to your own GitHub account to be able to change any code and complete the challenges. You can also [star (🌟) this repo](https://docs.github.com/en/get-started/exploring-projects-on-github/saving-repositories-with-stars) to find it and related repos easier.

-

+[Fork this entire repo](https://github.com/microsoft/generative-ai-for-beginners/fork) to your own GitHub account to be able to change any code and complete the challenges. You can also [star (🌟) this repo](https://docs.github.com/en/get-started/exploring-projects-on-github/saving-repositories-with-stars) to find it and related repos easier.

-### 2. Create a Codespace

-To avoid any dependency issues when running the code, we recommend running this course in a GitHub codespace.

+### 2. Create a codespace

-This can be created by selecting the `Code` option on your forked version of this repo and selecting the `Codespaces` option.

+To avoid any dependency issues when running the code, we recommend running this course in a GitHub codespace.

-

+This can be created by selecting the `Code` option on your forked version of this repo and selecting the **Codespaces** option.

+

-### 3. Storing Your API Keys

-Keeping your API keys safe and secure is important when building any type of application. We encourage you not to store any API keys directly in the code you are working with as committing those details to a public repository could result in unwanted costs and issues.

+### 3. Storing Your API Keys

-One of the best ways to keep your API keys secure when using GitHub Codespaces is by using Codespace Secrets. Please follow this guide on how to [manage secrets for your codespaces](https://docs.github.com/en/codespaces/managing-your-codespaces/managing-secrets-for-your-codespaces).

+Keeping your API keys safe and secure is important when building any type of application. We encourage you not to store any API keys directly in the code you are working with as committing those details to a public repository could result in unwanted costs and issues.

+One of the best ways to keep your API keys secure when using GitHub Codespaces is by using Codespace Secrets. Please follow this guide on how to [manage secrets for your codespaces](https://docs.github.com/en/codespaces/managing-your-codespaces/managing-secrets-for-your-codespaces).

-## Lessons and Technical Requirements

+## Lessons and Technical Requirements

-The course has 6 concept lessons and 6 coding lessons.

+The course has 6 concept lessons and 6 coding lessons.

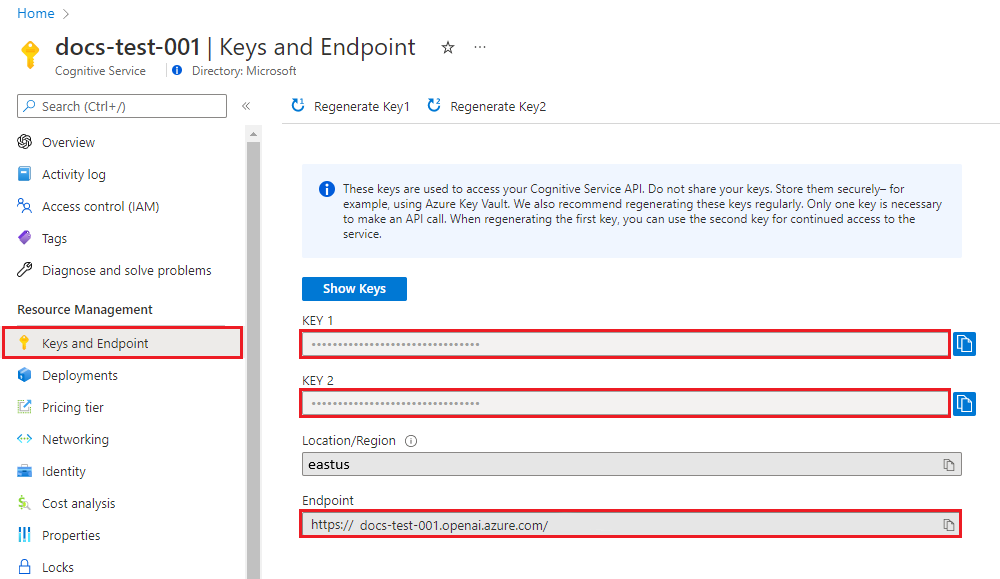

-For the coding lessons, we are using the Azure OpenAI Service. You will need access to the Azure OpenAI service and an API key to run this code. You can apply to get access by [completing this application](https://customervoice.microsoft.com/Pages/ResponsePage.aspx?id=v4j5cvGGr0GRqy180BHbR7en2Ais5pxKtso_Pz4b1_xUOFA5Qk1UWDRBMjg0WFhPMkIzTzhKQ1dWNyQlQCN0PWcu&culture=en-us&country=us).

+For the coding lessons, we are using the Azure OpenAI Service. You will need access to the Azure OpenAI service and an API key to run this code. You can apply to get access by [completing this application](https://customervoice.microsoft.com/Pages/ResponsePage.aspx?id=v4j5cvGGr0GRqy180BHbR7en2Ais5pxKtso_Pz4b1_xUOFA5Qk1UWDRBMjg0WFhPMkIzTzhKQ1dWNyQlQCN0PWcu&culture=en-us&country=us).

While you wait for your application to be processed, each coding lesson also includes a `README.md` file where you can view the code and outputs.

-## Using the Azure OpenAI Service for the First Time

-

-If this is your first time working with the Azure OpenAI service, please follow this guide on how to [create and deploy an Azure OpenAI Service resource.](https://learn.microsoft.com/en-us/azure/ai-services/openai/how-to/create-resource?pivots=web-portal)

+## Using the Azure OpenAI Service for the First Time

-## Meet Other Learners

+If this is your first time working with the Azure OpenAI service, please follow this guide on how to [create and deploy an Azure OpenAI Service resource.](https://learn.microsoft.com/azure/ai-services/openai/how-to/create-resource?pivots=web-portal)

-We have created channels in our official [AI Community Discord server](https://aka.ms/genai-discord) for meeting other learners. This is a great way to network with other like-minded entrepreneurs, builders, students, and anyone looking to level up in Generative AI.

+## Meet Other Learners

-[](https://aka.ms/genai-discord)

+We have created channels in our official [AI Community Discord server](https://aka.ms/genai-discord) for meeting other learners. This is a great way to network with other like-minded entrepreneurs, builders, students, and anyone looking to level up in Generative AI.

-The project team will also be on this Discord server to help any learners.

+[](https://aka.ms/genai-discord)

+The project team will also be on this Discord server to help any learners.

-## Contribute

+## Contribute

This course is an open-source initiative. If you see areas of improvement or issues, please create a [Pull Request](https://github.com/microsoft/generative-ai-for-beginners/pulls) or log a [Github issue](https://github.com/microsoft/generative-ai-for-beginners/issues).

-The project team will be tracking all contributions and contributing to open source is an amazing way to build your career in Generative AI.

+The project team will be tracking all contributions and contributing to open source is an amazing way to build your career in Generative AI.

-Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.microsoft.com.

+Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit [CLA, Contributor License Agreement website](https://cla.microsoft.com).

Important: when translating text in this repo, please ensure that you do not use machine translation. We will verify translations via the community, so please only volunteer for translations in languages where you are proficient.

When you submit a pull request, a CLA-bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., label, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repositories using our CLA.

-This project has adopted the Microsoft Open Source Code of Conduct. For more information read the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.

+This project has adopted the Microsoft Open Source Code of Conduct. For more information read the Code of Conduct FAQ or contact [Email opencode](opencode@microsoft.com) with any additional questions or comments.

-## Let's Get Started

+## Let's Get Started

Now that you have completed the needed steps to complete this course, let's get started by getting an [introduction to Generative AI and LLMs](../01-introduction-to-genai/README.md).

\ No newline at end of file

diff --git a/02-exploring-and-comparing-different-llms/README.md b/02-exploring-and-comparing-different-llms/README.md

index fab2875c7..4b8f9a6c1 100644

--- a/02-exploring-and-comparing-different-llms/README.md

+++ b/02-exploring-and-comparing-different-llms/README.md

@@ -2,7 +2,7 @@

[](https://youtu.be/J1mWzw0P74c)

-*(Click the image above to view video of this lesson)*

+> *Click the image above to view video of this lesson*

With the previous lesson, we have seen how Generative AI is changing the technology landscape, how Large Language Models (LLMs) work and how a business - like our startup - can apply them to their use cases and grow! In this chapter, we're looking to compare and contrast different types of large language models, LLMs to understand their pros and cons.

@@ -19,42 +19,44 @@ This lesson will cover:

## Learning Goals

After completing this lesson, you will be able to:

+

- Select the right model for your use case.

- Understand how to test, iterate, and improve performance of your model.

- Know how businesses deploy models.

-## Understand different types of LLMs

+## Understand different types of LLMs

-Large Language Models (LLMs) can have multiple categorizations based on their architecture, training data, and use case. Understanding these differences will help our startup select the right model for the scenario, and understand how to test, iterate, and improve performance.

+Large Language Models (LLMs) can have multiple categorizations based on their architecture, training data, and use case. Understanding these differences will help our startup select the right model for the scenario, and understand how to test, iterate, and improve performance.

-There are many different types of LLM models, your choice of model depends on what you aim to use them for, your data, how much you're ready to pay and more.

+There are many different types of LLM models, your choice of model depends on what you aim to use them for, your data, how much you're ready to pay and more.

-Depending on if you aim to use the models for text, audio, video, image generation and so on, you might opt for a differen type of model.

+Depending on if you aim to use the models for text, audio, video, image generation and so on, you might opt for a different type of model.

-- **Audio and speech recognition**. For this purpose, Whisper-type models are a great choice as they're general-purpose and aimed at speech recognition. It's trained on diverse audio and can perform multilingual speech recognition. As an example, you can use everything from a cheaper, but capable model like curie to the more costly but performat davinci type model. Learn more about [Whisper type models here](https://platform.openai.com/docs/models/whisper).

+- **Audio and speech recognition**. For this purpose, Whisper-type models are a great choice as they're general-purpose and aimed at speech recognition. It's trained on diverse audio and can perform multilingual speech recognition. As an example, you can use everything from a cheaper, but capable model like curie to the more costly but performant davinci type model. Learn more about [Whisper type models here](https://platform.openai.com/docs/models/whisper).

-- **Image generation**. For image generation, DALL-E and Midjourney are two very known choices. DALL-E is offered by Azure OpenAI. [Read more about DALL-E here](https://platform.openai.com/docs/models/dall-e) and also in Chapter 9 of this curriculum

+- **Image generation**. For image generation, DALL-E and Midjourney are two very known choices. DALL-E is offered by Azure OpenAI. [Read more about DALL-E here](https://platform.openai.com/docs/models/dall-e) and also in Chapter 9 of this curriculum.

- **Text generation**. Most models are trained on text generation and you have a large variety of choices from GPT-3.5 to GPT-4. They come at different costs with GPT-4 being the most expensive. It's worth looking into the [Azure Open AI playground](https://oai.azure.com/portal/playground) to evaluate which models best fit your needs in terms of capability and cost.

-Selecting a model means you get some basic capabilties, that might not be enough however. Often you have company specific data that you somehow need to tell the LLM about. There are a few different choices on how to approach that, more on that in the upcoming sections.

+Selecting a model means you get some basic capabilities, that might not be enough however. Often you have company specific data that you somehow need to tell the LLM about. There are a few different choices on how to approach that, more on that in the upcoming sections.

### Foundation Models versus LLMs

The term Foundation Model was [coined by Stanford researchers](https://arxiv.org/abs/2108.07258) and defined as an AI model that follows some criteria, such as:

-- **They are trained using unsupervised learning or self-supervised learning**, meaning they are trained on unlabeled multimodal data, and they do not require human annotation or labeling of data for their training process.

+

+- **They are trained using unsupervised learning or self-supervised learning**, meaning they are trained on unlabeled multi-modal data, and they do not require human annotation or labeling of data for their training process.

- **They are very large models**, based on very deep neural networks trained on billions of parameters.

- **They are normally intended to serve as a ‘foundation’ for other models**, meaning they can be used as a starting point for other models to be built on top of, which can be done by fine-tuning.

-

+

Image source: [Essential Guide to Foundation Models and Large Language Models | by Babar M Bhatti | Medium

-](https://thebabar.medium.com/essential-guide-to-foundation-models-and-large-language-models-27dab58f7404)

+](https://thebabar.medium.com/essential-guide-to-foundation-models-and-large-language-models-27dab58f7404)

-To further clarify this distinction, let’s take ChatGPT as an example. To build the first version of ChatGPT, a model called GPT-3.5 served as the foundation model. This means that OpenAI used some chat-specific data to create a tuned version of GPT-3.5 that was specialized in performing well in conversational scenarios, such as chatbots.

+To further clarify this distinction, let’s take ChatGPT as an example. To build the first version of ChatGPT, a model called GPT-3.5 served as the foundation model. This means that OpenAI used some chat-specific data to create a tuned version of GPT-3.5 that was specialized in performing well in conversational scenarios, such as chat bots.

-

+

Image source: [2108.07258.pdf (arxiv.org)](https://arxiv.org/pdf/2108.07258.pdf)

### Open Source versus Proprietary Models

@@ -65,7 +67,6 @@ Open-source models are models that are made available to the public and can be u

Proprietary models are models that are owned by a company and are not made available to the public. These models are often optimized for production use. However, they are not allowed to be inspected, modified, or customized for different use cases. Plus, they are not always available for free, and may require a subscription or payment to use. Also, users do not have control over the data that is used to train the model, which means they should entrust the model owner with ensuring commitment about data privacy and responsible use of AI. Examples of popular proprietary models include [OpenAI models](https://platform.openai.com/docs/models/overview), [Google Bard](https://sapling.ai/llm/bard) or [Claude 2](https://www.anthropic.com/index/claude-2).

-

### Embedding versus Image generation versus Text and Code generation

LLMs can also be categorized by the output they generate.

@@ -74,7 +75,7 @@ Embeddings are a set of models that can convert text into a numerical form, call

-Image generation models are models that generate images. These models are often used for image editing, image synthesis, and image translation. Image generation models are often trained on large datasets of images, such as [LAION-5B](https://laion.ai/blog/laion-5b/), and can be used to generate new images or to edit existing images with inpainting, super-resolution, and colorization techniques. Examples include [DALLE3](https://openai.com/dall-e-3) and [Stable Diffusion models](https://github.com/Stability-AI/StableDiffusion).

+Image generation models are models that generate images. These models are often used for image editing, image synthesis, and image translation. Image generation models are often trained on large datasets of images, such as [LAION-5B](https://laion.ai/blog/laion-5b/), and can be used to generate new images or to edit existing images with inpainting, super-resolution, and colorization techniques. Examples include [DALL-E-3](https://openai.com/dall-e-3) and [Stable Diffusion models](https://github.com/Stability-AI/StableDiffusion).

@@ -84,7 +85,7 @@ Text and code generation models are models that generate text or code. These mod

### Encoder-Decoder versus Decoder-only

-To talk about the different types of architectures of LLMs, let's use an analogy.

+To talk about the different types of architectures of LLMs, let's use an analogy.

Imagine your manager gave you a task for writing a quiz for the students. You have two colleagues; one oversees creating the content and the other oversees reviewing them.

@@ -96,40 +97,41 @@ Imagine that we can have someone as well who could create and review the quiz, t

### Service versus Model

-Now, let's talk about the difference between a service and a model. A service is a product that is offered by a Cloud Service Provider, and is often a combination of models, data, and other components. A model is the core component of a service, and is often a foundation model, such as an LLM.

+Now, let's talk about the difference between a service and a model. A service is a product that is offered by a Cloud Service Provider, and is often a combination of models, data, and other components. A model is the core component of a service, and is often a foundation model, such as an LLM.

Services are often optimized for production use and are often easier to use than models, via a graphical user interface. However, services are not always available for free, and may require a subscription or payment to use, in exchange to leverage service owner’s equipment and resources, optimizing expenses and scaling easily. An example of service is [Azure OpenAI service](https://learn.microsoft.com/azure/ai-services/openai/overview), which offers a pay-as-you-go rate plan, meaning users are charged proportionally to how much they use the service Also, Azure OpenAI service offers enterprise-grade security and responsible AI framework on top of the models' capabilities.

Models are just the Neural Network, with the parameters, weights, and others. Allowing companies to run locally, however, would need to buy equipment, build structure to scale and buy a license or use an open-source model. A model like LLaMA is available to be used, requiring computational power to run the model.

-

-## How to test and iterate with different models to understand performance on Azure

+

+## How to test and iterate with different models to understand performance on Azure

Once our team has explored the current LLMs landscape and identified some good candidates for their scenarios, the next step is testing them on their data and on their workload. This is an iterative process, done by experiments and measures.

-Most of the models we mentioned in previous paragraphs (OpenAI models, open source models like Llama2, and Hugging Face transformers) are available in the [Foundation Models](https://learn.microsoft.com/en-us/azure/machine-learning/concept-foundation-models) catalog in [Azure Machine Learning studio](https://ml.azure.com/).

+Most of the models we mentioned in previous paragraphs (OpenAI models, open source models like Llama2, and Hugging Face transformers) are available in the [Foundation Models](https://learn.microsoft.com/azure/machine-learning/concept-foundation-models) catalog in [Azure Machine Learning studio](https://ml.azure.com/).

+

+[Azure Machine Learning](https://azure.microsoft.com/products/machine-learning/) is a Cloud Service designed for data scientists and ML engineers to manage the whole ML lifecycle (train, test, deploy and handle MLOps) in a single platform. The Machine Learning studio offers a graphical user interface to this service and enables the user to:

-[Azure Machine Learning](https://azure.microsoft.com/en-us/products/machine-learning/) is a Cloud Service designed for data scientists and ML engineers to manage the whole ML lifecycle (train, test, deploy and handle MLOps) in a single platform. The Machine Learning studio offers a graphical user interface to this service and enables the user to:

-- Find the Foundation Model of interest in the catalog, filtering by task, license, or name. It’s also possible to import new models that are not yet included in the catalog.

-- Review the model card, including a detailed description and code samples, and test it with the Sample Inference widget, by providing a sample prompt to test the result.

+- Find the Foundation Model of interest in the catalog, filtering by task, license, or name. It’s also possible to import new models that are not yet included in the catalog.

+- Review the model card, including a detailed description and code samples, and test it with the Sample Inference widget, by providing a sample prompt to test the result.

-

-- Evaluate model performance with objective evaluation metrics on a specific workload and a specific set of data provided in input.

+

+- Evaluate model performance with objective evaluation metrics on a specific workload and a specific set of data provided in input.

-

-- Fine-tune the model on custom training data to improve model performance in a specific workload, leveraging the experimentation and tracking capabilities of Azure Machine Learning.

+

+- Fine-tune the model on custom training data to improve model performance in a specific workload, leveraging the experimentation and tracking capabilities of Azure Machine Learning.

-

-- Deploy the original pre-trained model or the fine-tuned version to a remote real time inference or batch endpoint, to enable applications to consume it.

+

+- Deploy the original pre-trained model or the fine-tuned version to a remote real time inference or batch endpoint, to enable applications to consume it.

## Improving LLM results

+

We’ve explored with our startup team different kinds of LLMs and a Cloud Platform (Azure Machine Learning) enabling us to compare different models, evaluate them on test data, improve performance and deploy them on inference endpoints.

-But when shall they consider fine-tuning a model rather than using a pre-trained one? Are there other approaches to improve model performance on specific workloads?

-

+But when shall they consider fine-tuning a model rather than using a pre-trained one? Are there other approaches to improve model performance on specific workloads?

There are several approaches a business can use to get the results they need from an LLM, you can select different types of models with different degrees of training

@@ -141,9 +143,8 @@ deploy an LLM in production, with different levels of complexity, cost, and qual

- **Fine-tuned model**. Here, you trained the model further on your own data which leads to the model being more exact and responsive to your needs but might be costly.

-

-

+

Img source: [Four Ways that Enterprises Deploy LLMs | Fiddler AI Blog](https://www.fiddler.ai/blog/four-ways-that-enterprises-deploy-llms)

### Prompt Engineering with Context

@@ -156,7 +157,8 @@ Prompt engineering with context is the most cost-effective approach to kick-off

### Retrieval Augmented Generation (RAG)

LLMs have the limitation that they can use only the data that has been used during their training to generate an answer. This means that they don’t know anything about the facts that happened after their training process, and they cannot access non-public information (like company data).

-This can be overcome through RAG, a technique that augments prompt with external data in the form of chunks of documents, considering prompt length limits. This is supported by Vector database tools (like [Azure Vector Search](https://learn.microsoft.com/en-us/azure/search/vector-search-overview)) that retrieve the useful chunks from varied pre-defined data sources and add them to the prompt Context.

+This can be overcome through RAG, a technique that augments prompt with external data in the form of chunks of documents, considering prompt length limits. This is supported by Vector database tools (like [Azure Vector Search](https://learn.microsoft.com/azure/search/vector-search-overview)) that retrieve the useful chunks from varied pre-defined data sources and add them to the prompt Context.

+

This technique is very helpful when a business doesn’t have enough data, enough time, or resources to fine-tune an LLM, but still wishes to improve performance on a specific workload and reduce risks of hallucinations, i.e., mystification of reality or harmful content.

### Fine-tuned model

@@ -164,18 +166,19 @@ This technique is very helpful when a business doesn’t have enough data, enoug

Fine-tuning is a process that leverages transfer learning to ‘adapt’ the model to a downstream task or to solve a specific problem. Differently from few-shot learning and RAG, it results in a new model being generated, with updated weights and biases. It requires a set of training examples consisting of a single input (the prompt) and its associated output (the completion).

This would be the preferred approach if:

-- **Using fine-tuned models**. A business would like to use fine-tuned less capable models (like embedding models) rather than high performance models, resulting in a more cost effective and fast solution.

+- **Using fine-tuned models**. A business would like to use fine-tuned less capable models (like embedding models) rather than high performance models, resulting in a more cost effective and fast solution.

-- **Considering latency**. Latency is important for a specific use-case, so it’s not possible to use very long prompts or the number of examples that should be learnt from the model doesn’t fit with the prompt length limit.

+- **Considering latency**. Latency is important for a specific use-case, so it’s not possible to use very long prompts or the number of examples that should be learnt from the model doesn’t fit with the prompt length limit.

-- **Staying up to date**. A business has a lot of high-quality data and ground truth labels and the resources required to maintain this data up to date over time.

+- **Staying up to date**. A business has a lot of high-quality data and ground truth labels and the resources required to maintain this data up to date over time.

### Trained model

+

Training an LLM from scratch is without a doubt the most difficult and the most complex approach to adopt, requiring massive amounts of data, skilled resources, and appropriate computational power. This option should be considered only in a scenario where a business has a domain-specific use case and a large amount of domain-centric data.

## Knowledge check

-What could be a good approach to improve LLM completion results?

+What could be a good approach to improve LLM completion results?

1. Prompt engineering with context

1. RAG

@@ -185,14 +188,10 @@ A:3, if you have the time and resources and high quality data, fine-tuning is th

## 🚀 Challenge

-Read up more on how you can [use RAG](https://learn.microsoft.com/en-us/azure/search/retrieval-augmented-generation-overview) for your business.

+Read up more on how you can [use RAG](https://learn.microsoft.com/azure/search/retrieval-augmented-generation-overview) for your business.

-## Great Work, Continue Your Learning!

-

-

-Want to learn more about different Generative AI concepts? Go to the [contiuned learning page](../13-continued-learning/README.md) to find other great resources on this topic.

+## Great Work, Continue Your Learning

+Want to learn more about different Generative AI concepts? Go to the [continued learning page](../13-continued-learning/README.md) to find other great resources on this topic.

Head over to the Lesson 3 where we will look at how to [build with Generative AI Responsibly](/03-using-generative-ai-responsibly/README.MD)!

-

-

diff --git a/03-using-generative-ai-responsibly/README.MD b/03-using-generative-ai-responsibly/README.MD

index 060a66e00..c4882c061 100644

--- a/03-using-generative-ai-responsibly/README.MD

+++ b/03-using-generative-ai-responsibly/README.MD

@@ -1,126 +1,116 @@

-# Using Generative AI Responsibly

+# Using Generative AI Responsibly

-[]()

+[]()

-**Video Coming Soon**

+> **Video Coming Soon**

-It's easy to be fascinated with AI and generative AI in particular, but you need to consider how you would use it responsibly. You need to consider things like how to ensure the output is fair, non-harmful and more. This chapter aims to provide you with mentioned context, what to consider, and how to take active steps to improve your AI usage.

+It's easy to be fascinated with AI and generative AI in particular, but you need to consider how you would use it responsibly. You need to consider things like how to ensure the output is fair, non-harmful and more. This chapter aims to provide you with mentioned context, what to consider, and how to take active steps to improve your AI usage.

-## Introduction

+## Introduction

This lesson will cover:

+

- Why you should prioritize Responsible AI when building Generative AI applications.

- Core principles of Responsible AI and how they relate to Generative AI.

-- How to put these Responsible AI principles into practice through strategy and tooling.

+- How to put these Responsible AI principles into practice through strategy and tooling.

-## Learning Goals

+## Learning Goals

After completing this lesson you will know:

-- The importance of Responsible AI when building Generative AI applications.

-- When to think and apply the core principles of Responsible AI when building Generative AI applications.

-- What tools and strategies are available to you to put the concept of Responsible AI into practice.

+- The importance of Responsible AI when building Generative AI applications.

+- When to think and apply the core principles of Responsible AI when building Generative AI applications.

+- What tools and strategies are available to you to put the concept of Responsible AI into practice.

-## Responsible AI Principles

+## Responsible AI Principles

-The excitement of Generative AI has never been higher. This excitement has brought a lot of new developers, attention, and funding to this space. While this is very positive for anyone looking to build products and companies using Generative AI, it is also important we proceed responsibly.

+The excitement of Generative AI has never been higher. This excitement has brought a lot of new developers, attention, and funding to this space. While this is very positive for anyone looking to build products and companies using Generative AI, it is also important we proceed responsibly.

Throughout this course, we are focusing on building our startup and our AI education product. We’ll use the principles of Responsible AI: Fairness, Inclusiveness, Reliability/Safety, Security & Privacy, Transparency and Accountability. With these principles, we will explore how they relate to our use of Generative AI in our products.

+## Why Should You Prioritize Responsible AI

-## Why Should You Prioritise Responsible AI

-

-When building a product, taking a human-centric approach by keeping your user's best interest in mind leads to the best results.

+When building a product, taking a human-centric approach by keeping your user's best interest in mind leads to the best results.

-The uniqueness of Generative AI is its power to create helpful answers, information, guidance, and content for users. This can be done without many manual steps which can lead to very impressive results. Without proper planning and strategies, it can also unfortunately lead to some harmful results for your users, your product, and society as a whole.

+The uniqueness of Generative AI is its power to create helpful answers, information, guidance, and content for users. This can be done without many manual steps which can lead to very impressive results. Without proper planning and strategies, it can also unfortunately lead to some harmful results for your users, your product, and society as a whole.

-Let's look at some (but not all) of these potentially harmful results:

+Let's look at some (but not all) of these potentially harmful results:

-### Hallucinations

+### Hallucinations

-Hallucinations are a term used to describe when an LLM produces content that is either completely nonsensical or something we know is factually wrong based on other sources of information.

+Hallucinations are a term used to describe when an LLM produces content that is either completely nonsensical or something we know is factually wrong based on other sources of information.

Let's take for example we build a feature for our startup that allows students to ask historical questions to a model. A student asks the question `Who was the sole survivor of Titanic?`

The model produces a response like the one below:

+_11zon.webp)

-_11zon.webp)

-

-*(Source: https://flyingbisons.com)*

+> *(Source: [Flying bisons](https://flyingbisons.com))*

This is a very confident and thorough answer. Unfortunately, it is incorrect. Even with a minimal amount of research, one would discover there was more than one survivor of the Titanic survivor. For a student who is just starting to research this topic, this answer can be persuasive enough to not be questioned and treated as fact. The consequences of this can lead to AI system to be unreliably and negatively impact the reputation of our startup.

-With each iteration of any given LLM, we have seen performance improvements around minimizing hallucinations. Even with this improvement, we as application builders and users still need to remain aware of these limitations.

+With each iteration of any given LLM, we have seen performance improvements around minimizing hallucinations. Even with this improvement, we as application builders and users still need to remain aware of these limitations.

+

+### Harmful Content

-### Harmful Content

+We covered in the earlier section when an LLM produces incorrect or nonsensical responses. Another risk we need to be aware of is when a model responds with harmful content.

-We covered in the earlier section when an LLM produces incorrect or nonsensical responses. Another risk we need to be aware of is when a model responds with harmful content.

+Harmful content can be defined as:

-Harmful content can be defined as:

-- Providing instructions or encouraging self-harm or harm to certain groups

-- Hateful or demeaning content

-- Guiding planning any type of attack or violent acts

-- Providing instructions on how to find illegal content or commit illegal acts

-- Displaying sexually explicit content

+- Providing instructions or encouraging self-harm or harm to certain groups.

+- Hateful or demeaning content.

+- Guiding planning any type of attack or violent acts.

+- Providing instructions on how to find illegal content or commit illegal acts.

+- Displaying sexually explicit content.

-For our startup, we want to make sure we have the right tools and strategies in place to prevent this type of content from being seen by students.

+For our startup, we want to make sure we have the right tools and strategies in place to prevent this type of content from being seen by students.

-### Lack of Fairness

+### Lack of Fairness

Fairness is defined as “ensuring that an AI system is free from bias and discrimination and that they treat everyone fairly and equally.” In the world of Generative AI, we want to ensure that exclusionary worldviews of marginalized groups are not reinforced by the model’s output.

These types of outputs are not only destructive to building positive product experiences for our users, but they also cause further societal harm. As application builders, we should always keep a wide and diverse user base in mind when building solutions with Generative AI.

-## How to Use Generative AI Responsibly

+## How to Use Generative AI Responsibly

-Now that we have identified the importance of Responsible Generative AI, let's look at 4 steps we can take to build our AI solutions responsibly:

+Now that we have identified the importance of Responsible Generative AI, let's look at 4 steps we can take to build our AI solutions responsibly:

-### Measure Potential Harms

-In software testing, we test the expected actions of a user on an application. Similarly, testing a diverse set of prompts users are most likely going to use is a good way to measure potential harm.

-

-Since our startup is building an education product, it would be good to prepare a list of education-related prompts. This could be to cover a certain subject, historical facts, and prompts about student life.

-

+### Measure Potential Harms

-### Mitigate Potential Harms

-It is now time to find ways where we can prevent or limit the potential harm caused by the model and its responses. We can look at this in 4 different layers:

+In software testing, we test the expected actions of a user on an application. Similarly, testing a diverse set of prompts users are most likely going to use is a good way to measure potential harm.

-

+Since our startup is building an education product, it would be good to prepare a list of education-related prompts. This could be to cover a certain subject, historical facts, and prompts about student life.

-**Model**

+### Mitigate Potential Harms

-Choosing the right model for the right use case. Larger and more complex models like GPT-4 can cause more of a risk of harmful content when applied to smaller and more specific use cases. Using your training data to fine-tune also reduces the risk of harmful content.

+It is now time to find ways where we can prevent or limit the potential harm caused by the model and its responses. We can look at this in 4 different layers:

-**Safety System**

+

-A safety system is a set of tools and configurations on the platform serving the model that help mitigate harm. An example of this is the content filtering system on the Azure OpenAI service. Systems should also detect jailbreak attacks and unwanted activity like requests from bots.

+- **Model**. Choosing the right model for the right use case. Larger and more complex models like GPT-4 can cause more of a risk of harmful content when applied to smaller and more specific use cases. Using your training data to fine-tune also reduces the risk of harmful content.

-**Metaprompt**

+- **Safety System**. A safety system is a set of tools and configurations on the platform serving the model that help mitigate harm. An example of this is the content filtering system on the Azure OpenAI service. Systems should also detect jailbreak attacks and unwanted activity like requests from bots.

-Metaprompts and grounding are ways we can direct or limit the model based on certain behaviors and information. This could be using system inputs to define certain limits of the model. In addition, providing outputs that are more relevant to scope or domain of system.

+- **Metaprompt**. Metaprompts and grounding are ways we can direct or limit the model based on certain behaviors and information. This could be using system inputs to define certain limits of the model. In addition, providing outputs that are more relevant to scope or domain of system.

It can also be using techniques like Retrieval Augmented Generation (RAG) to have the model only pull information from a selection of trusted sources. There is a lesson later in this course for [building search applications](../08-building-search-applications/README.md)

-**User Experience**

-

-The final layer is where the user interacts directly with the model through our application’s interface in some way. In this way we can design the UI/UX to limit the user on the types of inputs they can send to the model as well as text or images displayed to the user. When deploying the AI application, we also must be transparent about what our Generative AI application can and can’t do.

+- **User Experience**. The final layer is where the user interacts directly with the model through our application’s interface in some way. In this way we can design the UI/UX to limit the user on the types of inputs they can send to the model as well as text or images displayed to the user. When deploying the AI application, we also must be transparent about what our Generative AI application can and can’t do.

We have an entire lesson dedicated to [Designing UX for AI Applications](../12-designing-ux-for-ai-applications/README.md)

-**Evaluate model**

-

-Working with LLMs can be challenging because we don’t always have control over the data the model was trained on. Regardless, we should always evaluate the model’s performance and outputs. It’s still important to measure the model’s accuracy, similarity, groundedness, and relevance of the output. This helps provide transparency and trust to stakeholders and users.

+- **Evaluate model**. Working with LLMs can be challenging because we don’t always have control over the data the model was trained on. Regardless, we should always evaluate the model’s performance and outputs. It’s still important to measure the model’s accuracy, similarity, groundedness, and relevance of the output. This helps provide transparency and trust to stakeholders and users.

### Operate a Responsible Generative AI solution

-Building an operational practice around your AI applications is the final stage. This includes partnering with other parts of our startup like Legal and Security to ensure we are compliant with all regulatory policies. Before launching, we also want to build plans around delivery, handling incidents, and rollback to prevent any harm to our users from growing.

+Building an operational practice around your AI applications is the final stage. This includes partnering with other parts of our startup like Legal and Security to ensure we are compliant with all regulatory policies. Before launching, we also want to build plans around delivery, handling incidents, and rollback to prevent any harm to our users from growing.

+## Tools

-## Tools

-

-While the work of developing Responsible AI solutions may seem like a lot, it is work well worth the effort. As the area of Generative AI grows, more tooling to help developers efficiently integrate responsibility into their workflows will mature. For example, the [Azure AI Content Safety](https://learn.microsoft.com/en-us/azure/ai-services/content-safety/overview ) can help detect harmful content and images via an API request.

+While the work of developing Responsible AI solutions may seem like a lot, it is work well worth the effort. As the area of Generative AI grows, more tooling to help developers efficiently integrate responsibility into their workflows will mature. For example, the [Azure AI Content Safety](https://learn.microsoft.com/azure/ai-services/content-safety/overview ) can help detect harmful content and images via an API request.

## Knowledge check

@@ -134,13 +124,10 @@ A: 2 and 3 is correct. Responsible AI helps you consider how to mitigate harmful

## 🚀 Challenge

-Read up on [Azure AI Content Saftey](https://learn.microsoft.com/en-us/azure/ai-services/content-safety/overview ) and see what you can adopt for your usage.

-

-## Great Work, Continue Your Learning!

+Read up on [Azure AI Content Saftey](https://learn.microsoft.com/en-us/azure/ai-services/content-safety/overview ) and see what you can adopt for your usage.

+## Great Work, Continue Your Learning

-Want to learn more about how to build with Generative AI responsibly? Go to the [contiuned learning page](../13-continued-learning/README.md) to find other great resources on this topic.

-

+Want to learn more about how to build with Generative AI responsibly? Go to the [continued learning page](../13-continued-learning/README.md) to find other great resources on this topic.

Head over to Lesson 4 where we will look at [Prompt Engineering Fundamentals](/4-prompt-engineering-fundamentals/README.md)!

-

diff --git a/04-prompt-engineering-fundamentals/README.md b/04-prompt-engineering-fundamentals/README.md

index 14cefac04..b5ee9fa1a 100644

--- a/04-prompt-engineering-fundamentals/README.md

+++ b/04-prompt-engineering-fundamentals/README.md

@@ -1,14 +1,13 @@

-# Prompt Engineering Fundamentals

+# Prompt Engineering Fundamentals

-[](https://youtu.be/r2ItK3UMVTk)

+[](https://youtu.be/r2ItK3UMVTk)

How you write your prompt to the LLM matters, a carefully crafted prompt can achieve achieve a better result than one that isn't. But what even are these concepts, prompt, prompt engineering and how do I improve what I send to the LLM? Questions like these is what this chapter and the upcoming chapter is looking to answer.

+_Generative AI_ is capable of creating new content (e.g., text, images, audio, code etc.) in response to user requests. It achieves this using _Large Language Models_ (LLMs) like OpenAI's GPT ("Generative Pre-trained Transformer") series that are trained for using natural language and code.

-_Generative AI_ is capable of creating new content (e.g., text, images, audio, code etc.) in response to user requests. It achieves this using _Large Language Models_ (LLMs) like OpenAI's GPT ("Generative Pre-trained Transformer") series that are trained for using natural language and code.

-

-Users can now interact with these models using familiar pardigms like chat, without needing any technical expertise or training. The models are _prompt-based_ - users send a text input (prompt) and get back the AI response (completion). They can then "chat with the AI" iteratively, in multi-turn conversations, refining their prompt till the response matches their expectations.

+Users can now interact with these models using familiar paradigms like chat, without needing any technical expertise or training. The models are _prompt-based_ - users send a text input (prompt) and get back the AI response (completion). They can then "chat with the AI" iteratively, in multi-turn conversations, refining their prompt till the response matches their expectations.

"Prompts" now become the primary _programming interface_ for generative AI apps, telling the models what to do and influencing the quality of returned responses. "Prompt Engineering" is a fast-growing field of study that focuses on the _design and optimization_ of prompts to deliver consistent and quality responses at scale.

@@ -43,10 +42,10 @@ The notebook comes with _starter_ exercises - but you are encouraged to add your

Now, let's talk about how _this topic_ relates to our startup mission to [bring AI innovation to education](https://educationblog.microsoft.com/2023/06/collaborating-to-bring-ai-innovation-to-education). We want to build AI-powered applications of _personalized learning_ - so let's think about how different users of our application might "design" prompts:

- - **Administrators** might ask the AI to _analyze curriculum data to identify gaps in coverage_. The AI can summarize results or visualize them with code.

- - **Educators** might ask the AI to _generate a lesson plan for a target audience and topic_. The AI can build the personalized plan in a specified format.

- - **Students** might ask the AI to _tutor them in a difficult subject_. The AI can now guide students with lessons, hints & examples tailored to their level.

-

+- **Administrators** might ask the AI to _analyze curriculum data to identify gaps in coverage_. The AI can summarize results or visualize them with code.

+- **Educators** might ask the AI to _generate a lesson plan for a target audience and topic_. The AI can build the personalized plan in a specified format.

+- **Students** might ask the AI to _tutor them in a difficult subject_. The AI can now guide students with lessons, hints & examples tailored to their level.

+

That's just the tip of the iceberg. Check out [Prompts For Education](https://github.com/microsoft/prompts-for-edu/tree/main) - an open-source prompts library curated by education experts - to get a broader sense of the possibilities! _Try running some of those prompts in the sandbox or using the OpenAI Playground to see what happens!_

-

-## Code Challenge

+## Assignment

Congratulations! You made it to the end of the lesson! It's time to put some of those concepts and techniques to the test with real examples!

-For our code challenge, we'll be using a Jupyter Notebook with exercises you can complete interactively. You can also extend the Notebook with your own Markdown and Code cells to explore ideas and techniques on your own.

+For our assignment, we'll be using a Jupyter Notebook with exercises you can complete interactively. You can also extend the Notebook with your own Markdown and Code cells to explore ideas and techniques on your own.

+

+### To get started, fork the repo, then

-**To get started, fork the repo, then:**

- (Recommended) Launch GitHub Codespaces

-- (Alternatively) Clone the repo to your local device and use it with Docker Desktop

+- (Alternatively) Clone the repo to your local device and use it with Docker Desktop

- (Alternatively) Open the Notebook with your preferred Notebook runtime environment.

-**Next, configure your environment variables**

+### Next, configure your environment variables

+

- Copt the `.env.copy` file in repo root to `.env` and fill in the `OPENAI_API_KEY` value. You can find your API Key in your [OpenAI Dashboard](https://beta.openai.com/account/api-keys).

-**Next, open the Jupyter Notebook**

+### Next, open the Jupyter Notebook

+

- Select the runtime kernel. If using options 1 or 2, simply select the default Python 3.10.x kernel provided by the dev container.

You're all set to run the exercises. Note that there are no _right and wrong_ answers here - just exploring options by trial-and-error and building intuition for what works for a given model and application domain.

_For this reason there are no Code Solution segments in this lesson. Instead, the Notebook will have Markdown cells titled "My Solution:" that show one example output for reference._

-

-

-## Great Work! Continue Your Learning

-

+## Great Work! Continue Your Learning

Want to learn more about different Prompt Engineering concepts? Go to the [contiuned learning page](../13-continued-learning/README.md) to find other great resources on this topic.

-

Head over to the Lesson 5 where we will look at [advance prompting techniques](../05-advanced-prompts/README.md)!

diff --git a/05-advanced-prompts/README.md b/05-advanced-prompts/README.md

index ac7310b9e..a86bc502c 100644

--- a/05-advanced-prompts/README.md

+++ b/05-advanced-prompts/README.md

@@ -1,12 +1,12 @@

# Creating Advanced prompts

+[](https://youtu.be/32GBH6BTWZQ)

-[](https://youtu.be/32GBH6BTWZQ)

+Let's recap some learnings from the previous chapter:

-Let's recap some learnings from the previous chapter:

-> Prompt _engineering_ is the process by which we **guide the model towards more relevant responses** by providing more useful instructions or context.

+> Prompt _engineering_ is the process by which we **guide the model towards more relevant responses** by providing more useful instructions or context.

-There's also two steps to writing prompts, constructing the prompt, by providing relevant context and the second part is *optimization*, how to gradually improve the prompt.

+There's also two steps to writing prompts, constructing the prompt, by providing relevant context and the second part is _optimization_, how to gradually improve the prompt.

At this point, we have some basic understanding of how to write prompts, but we need to go deeper. In this chapter, you will go from trying out various prompts to understanding why one prompt is better than another. You will learn how to construct prompts following some basic techniques than can be applied to any LLM.

@@ -54,7 +54,7 @@ So far, we've seen a simple prompt example, but generative AI is capable of much

### Techniques for prompting

-First, we need to understand that prompting is an *emergent* property of an LLM meaning that this is not a feature that is built into the model but rather something we discover as we use the model.

+First, we need to understand that prompting is an _emergent_ property of an LLM meaning that this is not a feature that is built into the model but rather something we discover as we use the model.

There are some basic techniques that we can use to prompt an LLM. Let's explore them.

@@ -83,9 +83,9 @@ LLM answers with 5, which is incorrect. Correct answer is 1 apple, given the cal

So how can we teach the LLM to do this correctly?

-Let's try chain-of-thought. Applying chain-of-thought means:

+Let's try chain-of-thought. Applying chain-of-thought means:

-1. Give the LLM a similar example.

+1. Give the LLM a similar example.

1. Show the calculation, and how to calculate it correctly.

1. Provide the original prompt.

@@ -104,73 +104,73 @@ As you can see chain-of-thought is a very powerful technique.

### Generated knowledge

-Many times when you want to construct a prompt, you want to do so using your own company's data. You want part of the prompt to be from the company and the other part should be the actual prompt you're interested in.

+Many times when you want to construct a prompt, you want to do so using your own company's data. You want part of the prompt to be from the company and the other part should be the actual prompt you're interested in.

As an example, this is what your prompt then can look like if you're in the insurance business:

-```text

-{{company}}: {{company_name}}

-{{products}}:

-{{products_list}}

-Please suggest an insurance given the following budget and requirements:

-Budget: {{budget}}

-Requirements: {{requirements}}

-```

+ ```text

+ {{company}}: {{company_name}}

+ {{products}}:

+ {{products_list}}

+ Please suggest an insurance given the following budget and requirements:

+ Budget: {{budget}}

+ Requirements: {{requirements}}

+ ```

Above, you see how the prompt is constructed using a template. In the template there's a number of variables, denoted by `{{variable}}`, that will be replaced with actual values from a company API.

Here's an example of how the prompt could look like once the variables have been replaced by content from your company:

-```text

-Insurance company: ACME Insurance

-Insurance products (cost per month):

-- Car, cheap, 500 USD

-- Car, expensive, 1100 USD

-- Home, cheap, 600 USD

-- Home, expensive, 1200 USD

-- Life, cheap, 100 USD

-

-Please suggest an insurance given the following budget and requirements:

-Budget: $1000

-Requirements: Car, Home

-```

+ ```text

+ Insurance company: ACME Insurance

+ Insurance products (cost per month):

+ - Car, cheap, 500 USD

+ - Car, expensive, 1100 USD

+ - Home, cheap, 600 USD

+ - Home, expensive, 1200 USD

+ - Life, cheap, 100 USD

+

+ Please suggest an insurance given the following budget and requirements:

+ Budget: $1000

+ Requirements: Car, Home

+ ```

Running this prompt through an LLM will produce a response like this:

-```text

-, and Life insurance

-

-Given the budget and requirements, we suggest the following insurance package from ACME Insurance:

-- Car, cheap, 500 USD

-- Home, cheap, 600 USD

-- Life, cheap, 100 USD

-Total cost: $1,200 USD

-```

-

-As you can see, it also suggests the Life insurance, which it shouldn't. This result is an indication that we need to optimize the prompt by changing the prompt to be clearer on what it can allow. After som *trial and error*, we arrive at the following prompt:

-

-```text

-Insurance company: ACME Insurance

-Insurance products (cost per month):

-- type: Car, cheap, cost: 500 USD

-- type: Car, expensive, cost: 1100 USD

-- type: Home, cheap, cost: 600 USD

-- type: Home, expensive, cost: 1200 USD

-- type: Life, cheap, cost: 100 USD

-

-Please suggest an insurance given the following budget and requirements:

-Budget: $1000 restrict choice to types: Car, Home

-```

+ ```output

+ , and Life insurance

+

+ Given the budget and requirements, we suggest the following insurance package from ACME Insurance:

+ - Car, cheap, 500 USD

+ - Home, cheap, 600 USD

+ - Life, cheap, 100 USD

+ Total cost: $1,200 USD

+ ```

+

+As you can see, it also suggests the Life insurance, which it shouldn't. This result is an indication that we need to optimize the prompt by changing the prompt to be clearer on what it can allow. After som _trial and error_, we arrive at the following prompt:

+

+ ```text

+ Insurance company: ACME Insurance

+ Insurance products (cost per month):

+ - type: Car, cheap, cost: 500 USD

+ - type: Car, expensive, cost: 1100 USD

+ - type: Home, cheap, cost: 600 USD

+ - type: Home, expensive, cost: 1200 USD

+ - type: Life, cheap, cost: 100 USD

+

+ Please suggest an insurance given the following budget and requirements:

+ Budget: $1000 restrict choice to types: Car, Home

+ ```

-Note how adding *type* and *cost* and also using the keyword *restrict* helps the LLM to understand what we want.

+Note how adding _type_ and _cost_ and also using the keyword _restrict_ helps the LLM to understand what we want.

Now we get the following response:

-```text

-Given the budget and requirements, we suggest the Car, Cheap insurance product which costs 500 USD per month.

-```

+ ```output

+ Given the budget and requirements, we suggest the Car, Cheap insurance product which costs 500 USD per month.

+ ```

-The point of this example was to show that even though we're using a basic technique like *generated knowledge*, we still need to optimize the prompt in most cases to get the desired outcome.

+The point of this example was to show that even though we're using a basic technique like _generated knowledge_, we still need to optimize the prompt in most cases to get the desired outcome.

### Least-to-most

@@ -178,7 +178,7 @@ The idea with Least-to-most prompting is to break down a bigger problem into sub

> Prompt: How to perform data science in 5 steps?

-With your AI assistant answering with:

+With your AI assistant answering with:

1. Collect data

1. Clean data

@@ -205,52 +205,52 @@ Here's an example using this technique:

AI Answer:

-```python

-import flask

-app = Flask(__name__)

-

-@app.route('/products')

-def products():

- return 'Products'

-

-@app.route('/customers')

-def customers():

- return 'Customers'

-```

+ ```python

+ import flask

+ app = Flask(__name__)

+

+ @app.route('/products')

+ def products():

+ return 'Products'

+

+ @app.route('/customers')

+ def customers():

+ return 'Customers'

+ ```

> Prompt: suggest 3 improvements of the above code

AI Answer:

-```text

-Sure, here are three suggestions to improve the code:

-

-Import the correct module: The first line should import Flask instead of flask. The correct import statement should be from flask import Flask.

-

-Add a main function: Add a if __name__ == '__main__': block to the end of the file to run the application when the file is executed.

-

-Use f-strings: Use f-strings to format the return statements in the route functions. For example, return 'Products' can be changed to return f'This is the {request.path} page'.

-```

-

-```python

-from flask import Flask, request

-

-app = Flask(__name__)

-

-@app.route('/products')

-def products():

- return f'This is the {request.path} page'

-

-@app.route('/customers')

-def customers():

- return f'This is the {request.path} page'

+ ```text

+ Sure, here are three suggestions to improve the code:

+

+ Import the correct module: The first line should import Flask instead of flask. The correct import statement should be from flask import Flask.

+

+ Add a main function: Add a if __name__ == '__main__': block to the end of the file to run the application when the file is executed.

+

+ Use f-strings: Use f-strings to format the return statements in the route functions. For example, return 'Products' can be changed to return f'This is the {request.path} page'.

+ ```

-if __name__ == '__main__':

- app.run()

-```

+ ```python

+ from flask import Flask, request

+

+ app = Flask(__name__)

+

+ @app.route('/products')

+ def products():

+ return f'This is the {request.path} page'

+

+ @app.route('/customers')

+ def customers():

+ return f'This is the {request.path} page'

+

+ if __name__ == '__main__':

+ app.run()

+ ```

As you can see, the above AI response is improving the first suggested code thanks to critiquing the first answer.

-

+

### Maieutic prompting

Maieutic prompting is a technique that is similar to self-refine but it's more about asking the LLM to explain itself. The goal is to reduce inconsistencies in the LLM's output so to ensure it arrives at the correct answer. The workflow to follow is:

@@ -264,43 +264,43 @@ Repeat 2 and 3 until you've gone through all the parts and you're satisfied with

Here's an example prompt:

> prompt: How can I create a crisis plan to mitigate for a pandemic in 5 steps?

-LLM answer:

+LLM answer:

-```text

-1. Identify the risks

-1. Identify the stakeholders

-1. Identify the resources

-1. Identify the actions

-1. Identify the communication channels

-```

+ ```text

+ 1. Identify the risks

+ 1. Identify the stakeholders

+ 1. Identify the resources

+ 1. Identify the actions

+ 1. Identify the communication channels

+ ```

It's identified 5 steps, but can we determine if this is correct? Let's ask the LLM to explain each step:

> prompt: Explain the first step in more detail, what are the risks in detail with a pandemic?

-```text

-The risks are the following:

-

-- Loss of life

-- Loss of business

-- Loss of reputation

-- Loss of customers

-- Loss of employees

-```

+ ```text

+ The risks are the following:

+

+ - Loss of life

+ - Loss of business

+ - Loss of reputation

+ - Loss of customers

+ - Loss of employees

+ ```

At this point, we want to make sure the LLM is correct so we ask it to explain the first risk in more detail and hope it's consistent with the answer above:

> prompt: In a Pandemic, which is the biggest risk and why?

-```text

-The biggest risk is loss of life because it's the most important thing to protect.

-```

+ ```text

+ The biggest risk is loss of life because it's the most important thing to protect.

+ ```

> What are the two biggest risks in a pandemic?

-```text

-The two biggest risks are loss of life and loss of business.

-```

+ ```text

+ The two biggest risks are loss of life and loss of business.

+ ```

At this point, the LLM is consistent and mentions "life" and "business" as top two risks. We can now continue to the next step and feel fairly confident. However, we shouldn't trust the LLM blindly, we should always verify the output.

@@ -310,107 +310,107 @@ LLMs are non deterministic by nature, meaning that you will get different result

> "Generate code for a Python Web API"

-```text

-# Import necessary modules

-import flask

-from flask import request, jsonify

-

-# Create a Flask app

-app = flask.Flask(__name__)

-

-# Create a route for the API

-@app.route('/api', methods=['GET'])

-def api():

- # Get the data from the request

- data = request.args

-

- # Process the data

- result = process_data(data)

-

- # Return the result as JSON

- return jsonify(result)

-

-# Function to process the data

-def process_data(data):

- # Do something with the data

- result = data + 1

-

- # Return the result

- return result

-

-# Run the app

-if __name__ == '__main__':

- app.run()

-

-```

+ ```text

+ # Import necessary modules

+ import flask

+ from flask import request, jsonify

+

+ # Create a Flask app

+ app = flask.Flask(__name__)

+

+ # Create a route for the API

+ @app.route('/api', methods=['GET'])

+ def api():

+ # Get the data from the request

+ data = request.args

+

+ # Process the data

+ result = process_data(data)

+

+ # Return the result as JSON

+ return jsonify(result)

+

+ # Function to process the data

+ def process_data(data):

+ # Do something with the data

+ result = data + 1

+

+ # Return the result

+ return result

+

+ # Run the app

+ if __name__ == '__main__':

+ app.run()

+

+ ```

Running the same prompt again generates a slightly different response:

-```text

-#import necessary packages

-import flask

-from flask import request, jsonify

-

-#create the Flask application

-app = flask.Flask(__name__)

-app.config["DEBUG"] = True

-

-#create a list of books

-books = [

- {'id': 0,

- 'title': 'A Fire Upon the Deep',

- 'author': 'Vernor Vinge',

- 'first_sentence': 'The coldsleep itself was dreamless.',

- 'year_published': '1992'},

- {'id': 1,

- 'title': 'The Ones Who Walk Away From Omelas',

- 'author': 'Ursula K. Le Guin',

- 'first_sentence': 'With a clamor of bells that set the swallows soaring, the Festival of Summer came to the city Omelas, bright-towered by the sea.',

- 'published': '1973'},

- {'id': 2,

- 'title': 'Dhalgren',

- 'author': 'Samuel R. Delany',

- 'first_sentence': 'to wound the autumnal city.',

- 'published': '1975'}

-]

-

-#create an endpoint for the API

-@app.route('/', methods=['GET'])

-def home():

- return '''Books API

-A prototype API for retrieving books.

'''

-

-#create an endpoint to return all books

-@app.route('/api/v1/resources/books/all', methods=['GET'])

-def api_all():

- return jsonify(books)

-

-#create an endpoint to return a single book

-@app.route('/api/v1/resources/books', methods=['GET'])

-def api_id():

- #check if an ID was provided as part of the URL

- #if ID is provided, assign it to a variable

- #if no ID is provided, display an error

- if 'id' in request.args:

- id = int(request.args['id'])

- else:

- return "Error: No id field provided. Please specify an id."

-

- #create an empty list for our results

- results = []

-

- #loop through the data and match results that fit the requested ID

- #IDs are unique, but other fields might return many results

- for book in books:

- if book['id'] == id:

- results.append(book)

-

- #use the jsonify function from Flask to convert our list of

- #Python dictionaries to the JSON format

- return jsonify(results)

-

-app.run()

-```

+ ```text

+ #import necessary packages

+ import flask

+ from flask import request, jsonify

+

+ #create the Flask application

+ app = flask.Flask(__name__)

+ app.config["DEBUG"] = True

+

+ #create a list of books

+ books = [

+ {'id': 0,

+ 'title': 'A Fire Upon the Deep',

+ 'author': 'Vernor Vinge',

+ 'first_sentence': 'The coldsleep itself was dreamless.',

+ 'year_published': '1992'},

+ {'id': 1,

+ 'title': 'The Ones Who Walk Away From Omelas',

+ 'author': 'Ursula K. Le Guin',

+ 'first_sentence': 'With a clamor of bells that set the swallows soaring, the Festival of Summer came to the city Omelas, bright-towered by the sea.',

+ 'published': '1973'},

+ {'id': 2,

+ 'title': 'Dhalgren',

+ 'author': 'Samuel R. Delany',

+ 'first_sentence': 'to wound the autumnal city.',

+ 'published': '1975'}

+ ]

+

+ #create an endpoint for the API

+ @app.route('/', methods=['GET'])

+ def home():

+ return '''Books API

+ A prototype API for retrieving books.

'''

+

+ #create an endpoint to return all books

+ @app.route('/api/v1/resources/books/all', methods=['GET'])

+ def api_all():

+ return jsonify(books)

+

+ #create an endpoint to return a single book

+ @app.route('/api/v1/resources/books', methods=['GET'])

+ def api_id():

+ #check if an ID was provided as part of the URL

+ #if ID is provided, assign it to a variable

+ #if no ID is provided, display an error

+ if 'id' in request.args:

+ id = int(request.args['id'])

+ else:

+ return "Error: No id field provided. Please specify an id."

+

+ #create an empty list for our results

+ results = []

+

+ #loop through the data and match results that fit the requested ID

+ #IDs are unique, but other fields might return many results

+ for book in books:

+ if book['id'] == id:

+ results.append(book)

+

+ #use the jsonify function from Flask to convert our list of

+ #Python dictionaries to the JSON format

+ return jsonify(results)

+

+ app.run()

+ ```

> So is the varied output a problem?

@@ -424,137 +424,137 @@ Temperature is a value between 0 and 1, where 0 is the most deterministic and 1

> "Generate code for a Python Web API"

-```python

-#import necessary libraries

-import flask

-from flask import request, jsonify

-

-#create an instance of the Flask class

-app = flask.Flask(__name__)

-

-#create an endpoint for the API

-@app.route('/api/v1/endpoint', methods=['GET'])

-def api_endpoint():

- #get the request data

- data = request.get_json()

-

- #process the data

- result = process_data(data)

-

- #return the result

- return jsonify(result)

-

-#function to process the data

-def process_data(data):

- #process the data

- result = {'result': 'success'}

-

- #return the result

- return result

-

-#run the Flask app

-if __name__ == '__main__':

- app.run()

-

-```

+ ```python

+ #import necessary libraries

+ import flask

+ from flask import request, jsonify

+

+ #create an instance of the Flask class

+ app = flask.Flask(__name__)

+

+ #create an endpoint for the API

+ @app.route('/api/v1/endpoint', methods=['GET'])

+ def api_endpoint():

+ #get the request data

+ data = request.get_json()

+

+ #process the data

+ result = process_data(data)

+

+ #return the result

+ return jsonify(result)

+

+ #function to process the data

+ def process_data(data):

+ #process the data

+ result = {'result': 'success'}

+

+ #return the result

+ return result

+

+ #run the Flask app

+ if __name__ == '__main__':

+ app.run()

+

+ ```

Running the prompt again gives us this outcome:

-```python

-#import necessary libraries

-import flask

-from flask import request, jsonify

-

-#create a Flask app

-app = flask.Flask(__name__)

-

-#create a route for the API

-@app.route('/api', methods=['GET'])

-def api_call():

- #get the data from the request

- data = request.get_json()

+ ```python

+ #import necessary libraries

+ import flask

+ from flask import request, jsonify

- #process the data

- result = process_data(data)

+ #create a Flask app

+ app = flask.Flask(__name__)

- #return the result

- return jsonify(result)

-

-#define the process_data function

-def process_data(data):

- #do something with the data

- result = data + 1

+ #create a route for the API

+ @app.route('/api', methods=['GET'])

+ def api_call():

+ #get the data from the request

+ data = request.get_json()

+

+ #process the data

+ result = process_data(data)

+

+ #return the result

+ return jsonify(result)

- #return the result

- return result

-

-#run the app

-if __name__ == '__main__':

- app.run()

-

-```

+ #define the process_data function

+ def process_data(data):

+ #do something with the data

+ result = data + 1

+

+ #return the result

+ return result

+

+ #run the app

+ if __name__ == '__main__':

+ app.run()

+

+ ```

There's only a tiny difference between these two outputs. Let's do the opposite this time, let's set the temperature to 0.9:

-```python

-# Import necessary libraries

-import flask

-from flask import request, jsonify

-

-# Create a Flask app

-app = flask.Flask(__name__)

-

-# Create a route for the API

-@app.route('/api', methods=['GET'])

-def api_call():

- # Get the data from the request

- data = request.args

-

- # Process the data

- result = process_data(data)

-

- # Return the result

- return jsonify(result)

-

-# Function to process the data

-def process_data(data):

- # Do something with the data

- result = data + 1

-

- # Return the result

- return result

-

-# Run the app

-if __name__ == '__main__':

- app.run()

-

-```

+ ```python

+ # Import necessary libraries

+ import flask

+ from flask import request, jsonify

+

+ # Create a Flask app

+ app = flask.Flask(__name__)

+

+ # Create a route for the API

+ @app.route('/api', methods=['GET'])

+ def api_call():

+ # Get the data from the request

+ data = request.args

+

+ # Process the data

+ result = process_data(data)

+

+ # Return the result

+ return jsonify(result)

+

+ # Function to process the data

+ def process_data(data):

+ # Do something with the data

+ result = data + 1

+

+ # Return the result

+ return result

+

+ # Run the app

+ if __name__ == '__main__':

+ app.run()

+

+ ```

and the second attempt at 0.9 as temperature value:

-```python

-import flask

-from flask import request, jsonify

-

-# create the Flask app

-app = flask.Flask(__name__)

-app.config['DEBUG'] = True

-

-# create some test data

-books = [

- {'id': 0, 'title': 'A Fire Upon The Deep', 'author': 'Vernor Vinge', 'first_sentence': 'The coldsleep itself was dreamless.', 'year_published': '1992'},

- {'id': 1, 'title': 'The Ones Who Walk Away From Omelas', 'author': 'Ursula K. Le Guin', 'first_sentence': 'With a clamor of bells that set the swallows soaring, the Festival of Summer came to the city Omelas, bright-towered by the sea.', 'published': '1973'},

- {'id': 2, 'title': 'Dhalgren', 'author': 'Samuel R. Delany', 'first_sentence': 'to wound the autumnal city.', 'published': '1975'}

-]

-

-# create an endpoint

-@app.route('/', methods=['GET'])

-def home():

- return '''Welcome to our book API!

'''

-

-@app.route('/api/v1/resources/books

-

-```