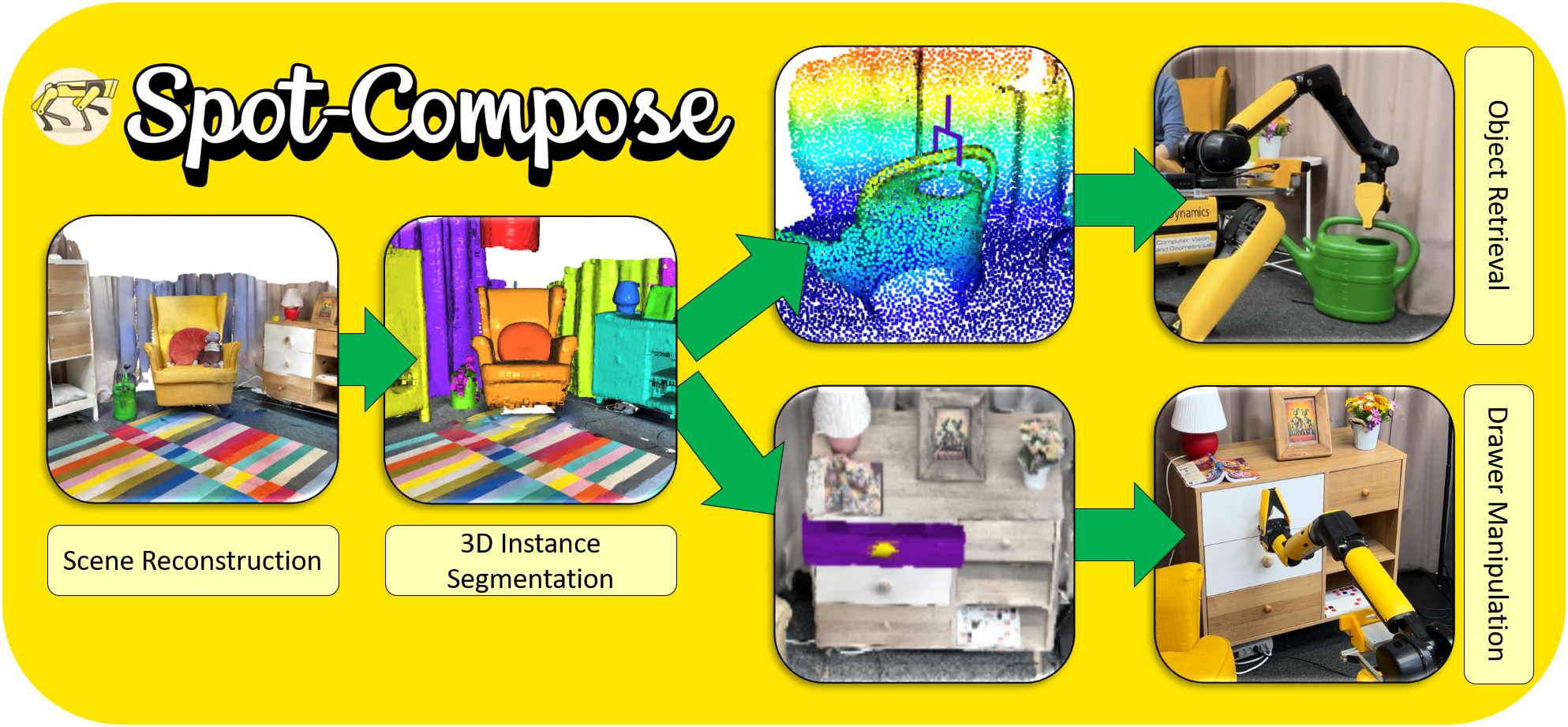

Spot-Compose: A Framework for Open-Vocabulary Object Retrieval and Drawer Manipulation in Point Clouds

Oliver Lemke1, Zuria Bauer1, René Zurbrügg1, Marc Pollefeys1,2, Francis Engelmann1,, Hermann Blum1,

1ETH Zurich 2Microsoft Mixed Reality & AI Labs *Equal contribution

Spot-Compose presents a comprehensive framework for integration of modern machine perception techniques with Spot, showing experiments with object grasping and dynamic drawer manipulation.

[Project Webpage] [Paper] [Teaser Video]

- April 23rd: release of teaser video.

- April 22nd: release on arXiv.

- March 13th 2024: Code released.

spot-compose/

├── source/ # All source code

│ ├── utils/ # General utility functions

│ │ ├── coordinates.py # Coordinate calculations (poses, translations, etc.)

│ │ ├── docker_communication.py # Communication with docker servers

│ │ ├── environment.py # API keys, env variables

│ │ ├── files.py # File system handling

│ │ ├── graspnet_interface.py # Communication with graspnet server

│ │ ├── importer.py # Config-based importing

│ │ ├── mask3D_interface.py # Handling of Mask3D instance segmentation

│ │ ├── point_clouds.py # Point cloud computations

│ │ ├── recursive_config.py # Recursive configuration files

│ │ ├── scannet_200_labels.py # Scannet200 labels (for Mask3D)

│ │ ├── singletons.py # Singletons for global unique access

│ │ ├── user_input.py # Handle user input

│ │ ├── vis.py # Handle visualizations

│ │ ├── vitpose_interface.py # Handle communications with VitPose docker server

│ │ └── zero_shot_object_detection.py # Object detections from images

│ ├── robot_utils/ # Utility functions specific to spot functionality

│ │ ├── base.py # Framework and wrapper for all scripts

│ │ ├── basic_movements.py # Basic robot commands (moving body / arm, stowing, etc.)

│ │ ├── advanced_movements.py # Advanced robot commands (planning, complex movements)

│ │ ├── frame_transformer.py # Simplified transformation between frames of reference

│ │ ├── video.py # Handle actions that require access to robot cameras

│ │ └── graph_nav.py # Handle actions that require access to GraphNav service

│ └── scripts/

│ ├── my_robot_scripts/

│ │ ├── estop_nogui.py # E-Stop

│ │ └── ... # Other action scripts

│ └── point_cloud_scripts/

│ ├── extract_point_cloud.py # Extract point cloud from Boston Dynamics autowalk

│ ├── full_align.py # Align autowalk and scanned point cloud

│ └── vis_ply_point_clouds_with_coordinates.py # Visualize aligned point cloud

├── data/

│ ├── autowalk/ # Raw autowalk data

│ ├── point_clouds/ # Extracted point clouds from autowalks

│ ├── prescans/ # Raw prescan data

│ ├── aligned_point_clouds/ # Prescan point clouds aligned with extracted autowalk clouds

│ └── masked/ # Mask3D output given aligned point clouds

├── configs/ # configs

│ └── config.yaml # Uppermost level of recursive configurations (see configs sections for more info)

├── shells/

│ ├── estop.sh # E-Stop script

│ ├── mac_routing.sh # Set up networking on workstation Mac

│ ├── ubuntu_routing.sh # Set up networking on workstation Ubuntu

│ ├── robot_routing.sh # Set up networking on NUC

│ └── start.sh # Convenient script execution

├── README.md # Project documentation

├── requirements.txt # pip requirements file

├── pyproject.toml # Formatter and linter specs

└── LICENSE

The main dependencies of the project are the following:

python: 3.8You can set up a pip environment as follows :

git clone --recurse-submodules [email protected]:oliver-lemke/spot-compose.git

cd spot-compose

virtualenv --python="/usr/bin/python3.8" "venv/"

source venv/bin/activate

pip install -r requirements.txtThe pre-trained model weigts for Yolov-based drawer detection is available here.

Docker containers are used to run external neural networks. This allows for easy modularity when working with multiple methods, without tedious setup.

Each docker container funtions as a self-contained server, answering requests. Please refer to utils/docker_communication.py for your own custon setup, or to the respective files in utils/ for existing containers.

To run the respective docker container, please first pull the desired image via

docker pull [Link]Once docker has finished pulling the image, you can start a container via the Run Command.

When you are inside the container shell, simply run the Start Command to start the server.

| Name | Link | Run Command | Start Command |

|---|---|---|---|

| AnyGrasp | craiden/graspnet:v1.0 | docker run -p 5000:5000 --gpus all -it craiden/graspnet:v1.0 |

python3 app.py |

| OpenMask3D | craiden/openmask:v1.0 | docker run -p 5001:5001 --gpus all -it craiden/openmask:v1.0 |

python3 app.py |

| ViTPose | craiden/vitpose:v1.0 | docker run -p 5002:5002 --gpus all -it craiden/vitpose:v1.0 |

easy_ViTPose/venv/bin/python app.py |

| DrawerDetection | craiden/yolodrawer:v1.0 | docker run -p 5004:5004 --gpus all -it craiden/yolodrawer:v1.0 |

python3 app.py |

For this project, we require two point clouds for navigation (low resolution, captured by Spot) and segmentation (high resolution, capture by commodity scanner). The former is used for initial localization and setting the origin at the apriltag fiducial. The latter is used for accurate segmentation.

To capture the point cloud please position Spot in front of your AptrilTag and start the autowalk.

Zip the resulting and data and unzip it into the data/autowalk folder.

Fill in the name of the unzipped folder in the config file under pre_scanned_graphs/low_res.

To capture the point cloud we use the 3D Scanner App on iOS.

Make sure the fiducial is visible during the scan for initialization.

Once the scan is complete, click on Share and export two things:

All DataPoint Cloud/PLYwith theHigh Densitysetting enabled andZ axis updisabled

Unzip the All Data zip file into the data/prescans folder. Rename the point cloud to pcd.ply and copy it into the folder, such that the resulting directory structure looks like the following:

prescans/

├── all_data_folder/

│ ├── annotations.json

│ ├── export.obj

│ ├── export_refined.obj

│ ├── frame_00000.jpg

│ ├── frame_00000.json

│ ├── ...

│ ├── info.json

│ ├── pcd.ply.json

│ ├── textured_output.jpg

│ ├── textured_output.mtl

│ ├── textured_output.obj

│ ├── thumb_00000.jpg

│ └── world_map.arkit

Finally, fill in the name of your all_data_folder in the config file under pre_scanned_graphs/high_res.

In our project setup, we connect the robot via a NUC on Spot's back. The NUC is connected to Spot via cable, and to a router via WiFi.

However, since the robot is not directly accessible to the router, we have to (a) tell the workstation where to send information to the robot, and (b) tell the NUC to work as a bridge. You may have to adjust the addresses in the scripts to fit your setup.

On the workstation run ./shells/ubuntu_routing.sh (or ./shells/mac_routing.sh depending on your workstation operating system).

First, ssh into the NUC, followed by running ./robot_routing.sh to configure the NUC as a network bridge.

The base config file can be found under configs/config.yaml.

However, our config system allows for dynamically extending and inheriting from configs, if you have different setups on different workstations.

To do this, simply specify the bottom-most file in the inheritance tree when creating the Config() object. Each config file specifies the file it inherits from in an extends field.

In our example, the overwriting config is specified in configs/template_extension.yaml, meaning the inheritance graph looks like:

template_extension.yaml ---overwrites---> config.yaml

In this example, we would specify Config(file='configs/template_extension.yaml'), which then overwrites all the config files it extends.

However, this functionality is not necessary for this project to work, so simply working with the config.yaml file as you are used to is supported by default.

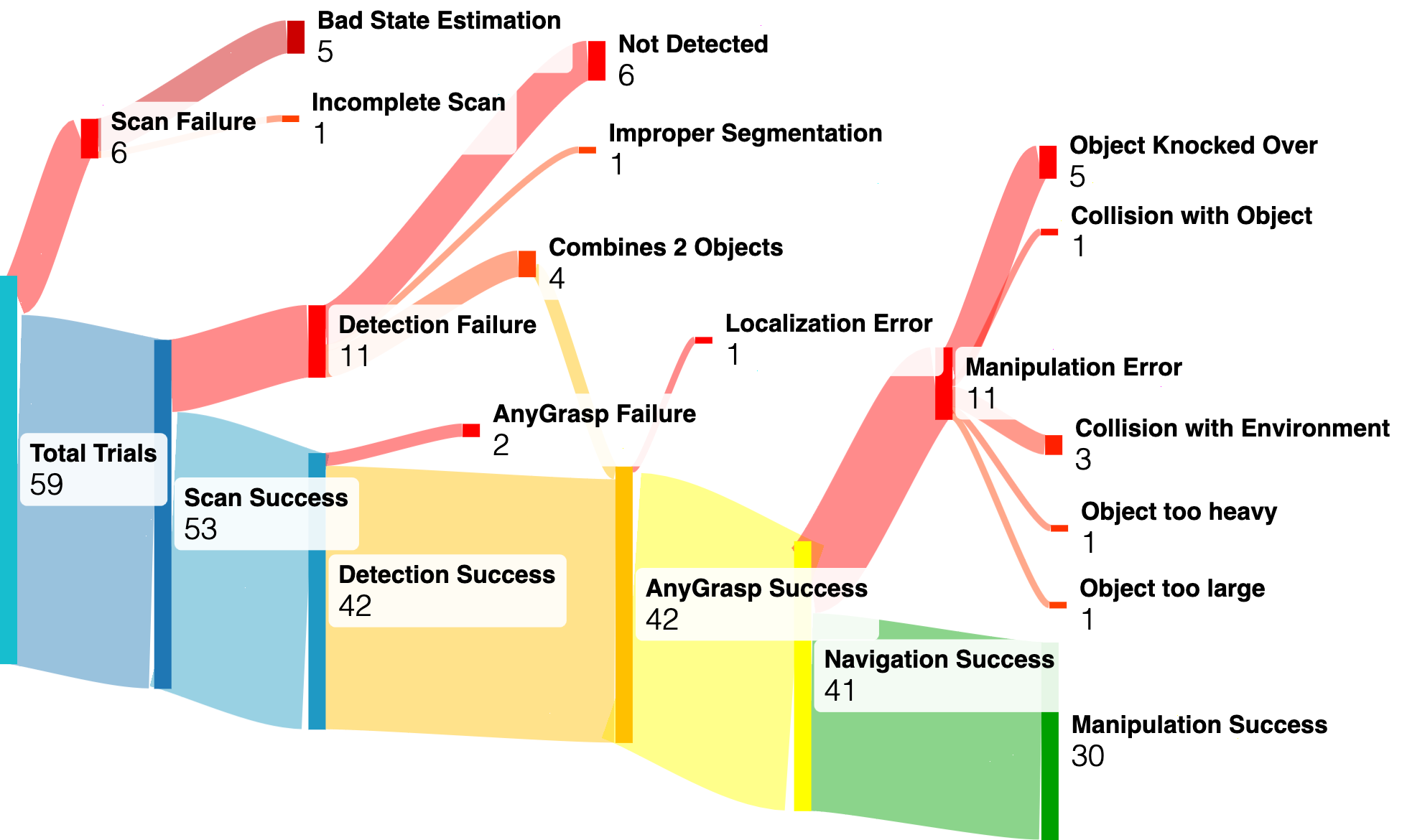

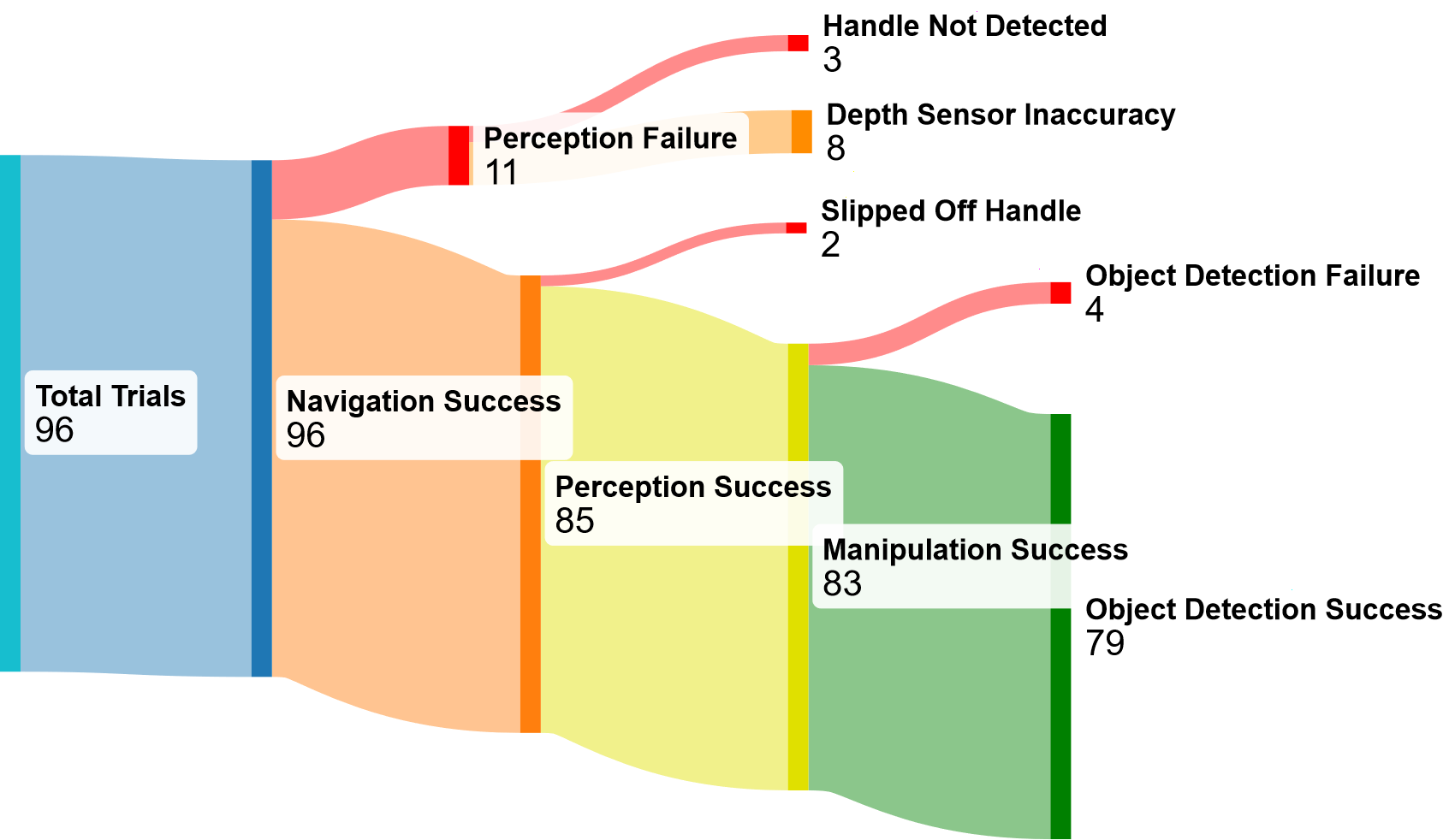

We provide detailed results here.

- Finish Documentation

@inproceedings{lemke2024spotcompose,

title={Spot-Compose: A Framework for Open-Vocabulary Object Retrieval and Drawer Manipulation in Point Clouds},

author={Oliver Lemke and Zuria Bauer and Ren{\'e} Zurbr{\"u}gg and Marc Pollefeys and Francis Engelmann and Hermann Blum},

booktitle={2nd Workshop on Mobile Manipulation and Embodied Intelligence at ICRA 2024},

year={2024},

}