| OpenJDK |

It is necessary for building Java API.

- See Java API build for building tutorials.

+ See Java API build for building tutorials.

|

diff --git a/docs/en/01-how-to-build/build_from_docker.md b/docs/en/01-how-to-build/build_from_docker.md

index 816c2c77b1..22d9acbab7 100644

--- a/docs/en/01-how-to-build/build_from_docker.md

+++ b/docs/en/01-how-to-build/build_from_docker.md

@@ -51,7 +51,7 @@ docker run --gpus all -it mmdeploy:master-gpu

As described [here](https://forums.developer.nvidia.com/t/cuda-error-the-provided-ptx-was-compiled-with-an-unsupported-toolchain/185754), update the GPU driver to the latest one for your GPU.

-2. docker: Error response from daemon: could not select device driver "" with capabilities: \[gpu\].

+2. docker: Error response from daemon: could not select device driver "" with capabilities: [gpu].

```

# Add the package repositories

diff --git a/docs/en/01-how-to-build/build_from_source.md b/docs/en/01-how-to-build/build_from_source.md

index 6fa742c7dd..2c272e3db7 100644

--- a/docs/en/01-how-to-build/build_from_source.md

+++ b/docs/en/01-how-to-build/build_from_source.md

@@ -3,7 +3,7 @@

## Download

```shell

-git clone -b 1.x git@github.com:open-mmlab/mmdeploy.git --recursive

+git clone -b main git@github.com:open-mmlab/mmdeploy.git --recursive

```

Note:

@@ -26,7 +26,7 @@ Note:

- If it fails when `git clone` via `SSH`, you can try the `HTTPS` protocol like this:

```shell

- git clone -b 1.x https://github.com/open-mmlab/mmdeploy.git --recursive

+ git clone -b main https://github.com/open-mmlab/mmdeploy.git --recursive

```

## Build

diff --git a/docs/en/01-how-to-build/jetsons.md b/docs/en/01-how-to-build/jetsons.md

index 03ce9db7b2..01a5b049e0 100644

--- a/docs/en/01-how-to-build/jetsons.md

+++ b/docs/en/01-how-to-build/jetsons.md

@@ -237,7 +237,7 @@ It takes about 15 minutes to install ppl.cv on a Jetson Nano. So, please be pati

## Install MMDeploy

```shell

-git clone -b 1.x --recursive https://github.com/open-mmlab/mmdeploy.git

+git clone -b main --recursive https://github.com/open-mmlab/mmdeploy.git

cd mmdeploy

export MMDEPLOY_DIR=$(pwd)

```

@@ -305,7 +305,7 @@ pip install -v -e . # or "python setup.py develop"

2. Follow [this document](../02-how-to-run/convert_model.md) on how to convert model files.

-For this example, we have used [retinanet_r18_fpn_1x_coco.py](https://github.com/open-mmlab/mmdetection/blob/3.x/configs/retinanet/retinanet_r18_fpn_1x_coco.py) as the model config, and [this file](https://download.openmmlab.com/mmdetection/v2.0/retinanet/retinanet_r18_fpn_1x_coco/retinanet_r18_fpn_1x_coco_20220407_171055-614fd399.pth) as the corresponding checkpoint file. Also for deploy config, we have used [detection_tensorrt_dynamic-320x320-1344x1344.py](https://github.com/open-mmlab/mmdeploy/tree/1.x/configs/mmdet/detection/detection_tensorrt_dynamic-320x320-1344x1344.py)

+For this example, we have used [retinanet_r18_fpn_1x_coco.py](https://github.com/open-mmlab/mmdetection/blob/3.x/configs/retinanet/retinanet_r18_fpn_1x_coco.py) as the model config, and [this file](https://download.openmmlab.com/mmdetection/v2.0/retinanet/retinanet_r18_fpn_1x_coco/retinanet_r18_fpn_1x_coco_20220407_171055-614fd399.pth) as the corresponding checkpoint file. Also for deploy config, we have used [detection_tensorrt_dynamic-320x320-1344x1344.py](https://github.com/open-mmlab/mmdeploy/tree/main/configs/mmdet/detection/detection_tensorrt_dynamic-320x320-1344x1344.py)

```shell

python ./tools/deploy.py \

diff --git a/docs/en/01-how-to-build/rockchip.md b/docs/en/01-how-to-build/rockchip.md

index 3ecb645490..431b5d346d 100644

--- a/docs/en/01-how-to-build/rockchip.md

+++ b/docs/en/01-how-to-build/rockchip.md

@@ -140,7 +140,7 @@ label: 65, score: 0.95

- MMDet models.

- YOLOV3 & YOLOX: you may paste the following partition configuration into [detection_rknn_static-320x320.py](https://github.com/open-mmlab/mmdeploy/blob/1.x/configs/mmdet/detection/detection_rknn-int8_static-320x320.py):

+ YOLOV3 & YOLOX: you may paste the following partition configuration into [detection_rknn_static-320x320.py](https://github.com/open-mmlab/mmdeploy/blob/main/configs/mmdet/detection/detection_rknn-int8_static-320x320.py):

```python

# yolov3, yolox for rknn-toolkit and rknn-toolkit2

@@ -156,7 +156,7 @@ label: 65, score: 0.95

])

```

- RTMDet: you may paste the following partition configuration into [detection_rknn-int8_static-640x640.py](https://github.com/open-mmlab/mmdeploy/blob/dev-1.x/configs/mmdet/detection/detection_rknn-int8_static-640x640.py):

+ RTMDet: you may paste the following partition configuration into [detection_rknn-int8_static-640x640.py](https://github.com/open-mmlab/mmdeploy/blob/main/configs/mmdet/detection/detection_rknn-int8_static-640x640.py):

```python

# rtmdet for rknn-toolkit and rknn-toolkit2

@@ -172,7 +172,7 @@ label: 65, score: 0.95

])

```

- RetinaNet & SSD & FSAF with rknn-toolkit2, you may paste the following partition configuration into [detection_rknn_static-320x320.py](https://github.com/open-mmlab/mmdeploy/blob/dev-1.x/configs/mmdet/detection/detection_rknn-int8_static-320x320.py). Users with rknn-toolkit can directly use default config.

+ RetinaNet & SSD & FSAF with rknn-toolkit2, you may paste the following partition configuration into [detection_rknn_static-320x320.py](https://github.com/open-mmlab/mmdeploy/blob/main/configs/mmdet/detection/detection_rknn-int8_static-320x320.py). Users with rknn-toolkit can directly use default config.

```python

# retinanet, ssd for rknn-toolkit2

diff --git a/docs/en/02-how-to-run/prebuilt_package_windows.md b/docs/en/02-how-to-run/prebuilt_package_windows.md

index 6952ad4b7b..5cb513f806 100644

--- a/docs/en/02-how-to-run/prebuilt_package_windows.md

+++ b/docs/en/02-how-to-run/prebuilt_package_windows.md

@@ -21,7 +21,7 @@

______________________________________________________________________

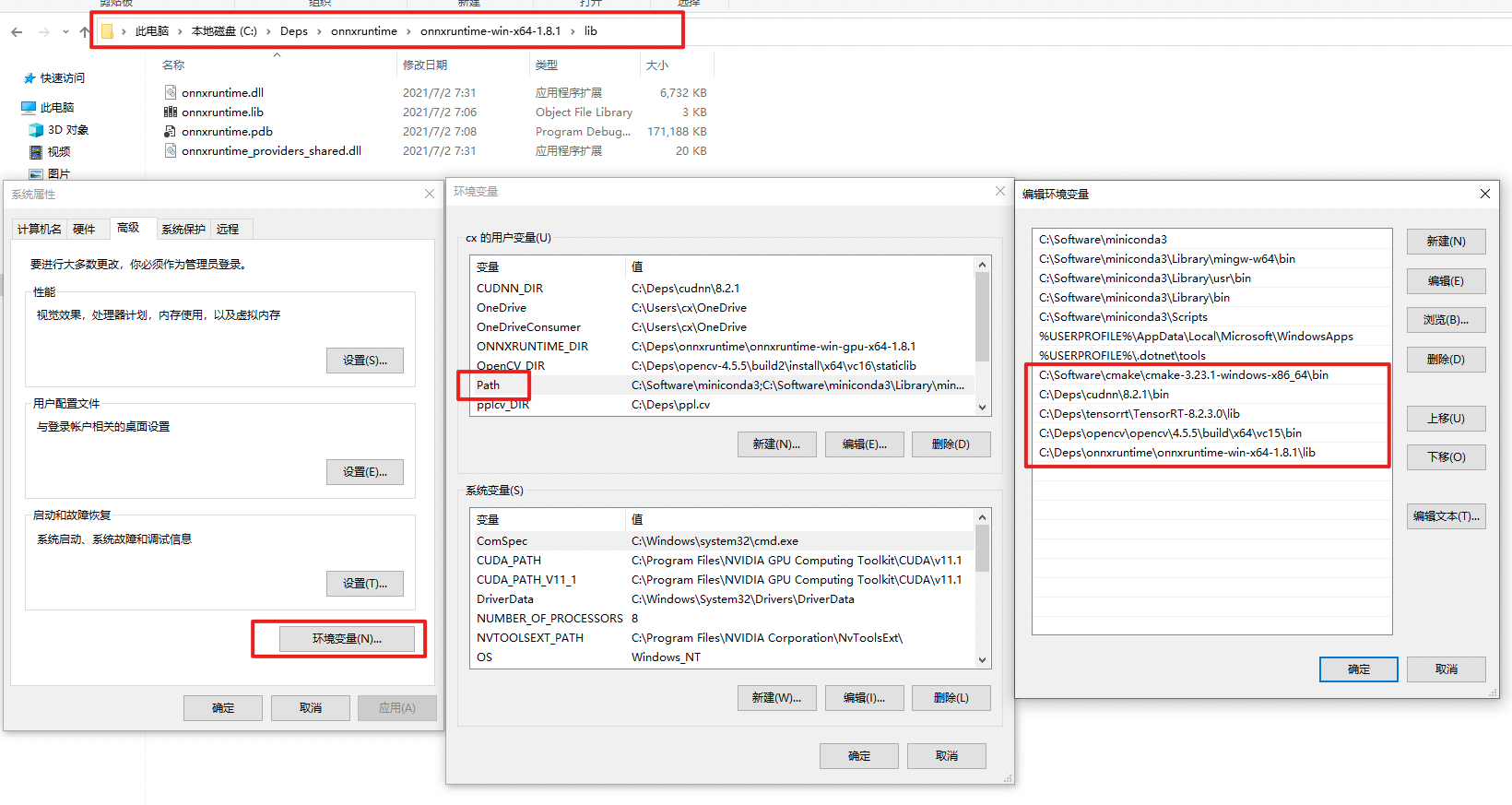

-This tutorial takes `mmdeploy-1.0.0rc3-windows-amd64.zip` and `mmdeploy-1.0.0rc3-windows-amd64-cuda11.3.zip` as examples to show how to use the prebuilt packages. The former support onnxruntime cpu inference, the latter support onnxruntime-gpu and tensorrt inference.

+This tutorial takes `mmdeploy-1.0.0-windows-amd64.zip` and `mmdeploy-1.0.0-windows-amd64-cuda11.3.zip` as examples to show how to use the prebuilt packages. The former support onnxruntime cpu inference, the latter support onnxruntime-gpu and tensorrt inference.

The directory structure of the prebuilt package is as follows, where the `dist` folder is about model converter, and the `sdk` folder is related to model inference.

@@ -48,7 +48,7 @@ In order to use the prebuilt package, you need to install some third-party depen

2. Clone the mmdeploy repository

```bash

- git clone -b 1.x https://github.com/open-mmlab/mmdeploy.git

+ git clone -b main https://github.com/open-mmlab/mmdeploy.git

```

:point_right: The main purpose here is to use the configs, so there is no need to compile `mmdeploy`.

@@ -56,7 +56,7 @@ In order to use the prebuilt package, you need to install some third-party depen

3. Install mmclassification

```bash

- git clone -b 1.x https://github.com/open-mmlab/mmclassification.git

+ git clone -b main https://github.com/open-mmlab/mmclassification.git

cd mmclassification

pip install -e .

```

@@ -81,8 +81,8 @@ In order to use `ONNX Runtime` backend, you should also do the following steps.

5. Install `mmdeploy` (Model Converter) and `mmdeploy_runtime` (SDK Python API).

```bash

- pip install mmdeploy==1.0.0rc3

- pip install mmdeploy-runtime==1.0.0rc3

+ pip install mmdeploy==1.0.0

+ pip install mmdeploy-runtime==1.0.0

```

:point_right: If you have installed it before, please uninstall it first.

@@ -100,7 +100,7 @@ In order to use `ONNX Runtime` backend, you should also do the following steps.

:exclamation: Restart powershell to make the environment variables setting take effect. You can check whether the settings are in effect by `echo $env:PATH`.

-8. Download SDK C/cpp Library mmdeploy-1.0.0rc3-windows-amd64.zip

+8. Download SDK C/cpp Library mmdeploy-1.0.0-windows-amd64.zip

### TensorRT

@@ -109,8 +109,8 @@ In order to use `TensorRT` backend, you should also do the following steps.

5. Install `mmdeploy` (Model Converter) and `mmdeploy_runtime` (SDK Python API).

```bash

- pip install mmdeploy==1.0.0rc3

- pip install mmdeploy-runtime-gpu==1.0.0rc3

+ pip install mmdeploy==1.0.0

+ pip install mmdeploy-runtime-gpu==1.0.0

```

:point_right: If you have installed it before, please uninstall it first.

@@ -129,7 +129,7 @@ In order to use `TensorRT` backend, you should also do the following steps.

7. Install pycuda by `pip install pycuda`

-8. Download SDK C/cpp Library mmdeploy-1.0.0rc3-windows-amd64-cuda11.3.zip

+8. Download SDK C/cpp Library mmdeploy-1.0.0-windows-amd64-cuda11.3.zip

## Model Convert

@@ -141,7 +141,7 @@ After preparation work, the structure of the current working directory should be

```

..

-|-- mmdeploy-1.0.0rc3-windows-amd64

+|-- mmdeploy-1.0.0-windows-amd64

|-- mmclassification

|-- mmdeploy

`-- resnet18_8xb32_in1k_20210831-fbbb1da6.pth

@@ -189,7 +189,7 @@ After installation of mmdeploy-tensorrt prebuilt package, the structure of the c

```

..

-|-- mmdeploy-1.0.0rc3-windows-amd64-cuda11.3

+|-- mmdeploy-1.0.0-windows-amd64-cuda11.3

|-- mmclassification

|-- mmdeploy

`-- resnet18_8xb32_in1k_20210831-fbbb1da6.pth

@@ -252,8 +252,8 @@ The structure of current working directory:

```

.

-|-- mmdeploy-1.0.0rc3-windows-amd64-cuda11.1-tensorrt8.2.3.0

-|-- mmdeploy-1.0.0rc3-windows-amd64-onnxruntime1.8.1

+|-- mmdeploy-1.0.0-windows-amd64

+|-- mmdeploy-1.0.0-windows-amd64-cuda11.3

|-- mmclassification

|-- mmdeploy

|-- resnet18_8xb32_in1k_20210831-fbbb1da6.pth

@@ -324,7 +324,7 @@ The following describes how to use the SDK's C API for inference

It is recommended to use `CMD` here.

- Under `mmdeploy-1.0.0rc3-windows-amd64\\example\\cpp\\build\\Release` directory:

+ Under `mmdeploy-1.0.0-windows-amd64\\example\\cpp\\build\\Release` directory:

```

.\image_classification.exe cpu C:\workspace\work_dir\onnx\resnet\ C:\workspace\mmclassification\demo\demo.JPEG

@@ -344,7 +344,7 @@ The following describes how to use the SDK's C API for inference

It is recommended to use `CMD` here.

- Under `mmdeploy-1.0.0rc3-windows-amd64-cuda11.3\\example\\cpp\\build\\Release` directory

+ Under `mmdeploy-1.0.0-windows-amd64-cuda11.3\\example\\cpp\\build\\Release` directory

```

.\image_classification.exe cuda C:\workspace\work_dir\trt\resnet C:\workspace\mmclassification\demo\demo.JPEG

diff --git a/docs/en/02-how-to-run/useful_tools.md b/docs/en/02-how-to-run/useful_tools.md

index ee69c51a2b..a402f2113d 100644

--- a/docs/en/02-how-to-run/useful_tools.md

+++ b/docs/en/02-how-to-run/useful_tools.md

@@ -83,7 +83,7 @@ python tools/onnx2pplnn.py \

- `onnx_path`: The path of the `ONNX` model to convert.

- `output_path`: The converted `PPLNN` algorithm path in json format.

- `device`: The device of the model during conversion.

-- `opt-shapes`: Optimal shapes for PPLNN optimization. The shape of each tensor should be wrap with "\[\]" or "()" and the shapes of tensors should be separated by ",".

+- `opt-shapes`: Optimal shapes for PPLNN optimization. The shape of each tensor should be wrap with "[]" or "()" and the shapes of tensors should be separated by ",".

- `--log-level`: To set log level which in `'CRITICAL', 'FATAL', 'ERROR', 'WARN', 'WARNING', 'INFO', 'DEBUG', 'NOTSET'`. If not specified, it will be set to `INFO`.

## onnx2tensorrt

diff --git a/docs/en/03-benchmark/benchmark.md b/docs/en/03-benchmark/benchmark.md

index 18ef2faa3b..933b8519f4 100644

--- a/docs/en/03-benchmark/benchmark.md

+++ b/docs/en/03-benchmark/benchmark.md

@@ -2009,7 +2009,7 @@ Users can directly test the performance through [how_to_evaluate_a_model.md](../

-

-  -

-  +

+  +

+  +

+

+## Highlights

+

+The MMDeploy 1.x has been released, which is adapted to upstream codebases from OpenMMLab 2.0. Please **align the version** when using it.

+The default branch has been switched to `main` from `master`. MMDeploy 0.x (`master`) will be deprecated and new features will only be added to MMDeploy 1.x (`main`) in future.

+

+| mmdeploy | mmengine | mmcv | mmdet | others |

+| :------: | :------: | :------: | :------: | :----: |

+| 0.x.y | - | \<=1.x.y | \<=2.x.y | 0.x.y |

+| 1.x.y | 0.x.y | 2.x.y | 3.x.y | 1.x.y |

+

## Introduction

MMDeploy is an open-source deep learning model deployment toolset. It is a part of the [OpenMMLab](https://openmmlab.com/) project.

@@ -73,24 +83,24 @@ The supported Device-Platform-InferenceBackend matrix is presented as following,

The benchmark can be found from [here](docs/en/03-benchmark/benchmark.md)

-| Device / Platform | Linux | Windows | macOS | Android |

-| ----------------- | ------------------------------------------------------------------------ | --------------------------------------- | -------- | ---------------- |

-| x86_64 CPU | ✔️ONNX Runtime

+## Highlights

+

+The MMDeploy 1.x has been released, which is adapted to upstream codebases from OpenMMLab 2.0. Please **align the version** when using it.

+The default branch has been switched to `main` from `master`. MMDeploy 0.x (`master`) will be deprecated and new features will only be added to MMDeploy 1.x (`main`) in future.

+

+| mmdeploy | mmengine | mmcv | mmdet | others |

+| :------: | :------: | :------: | :------: | :----: |

+| 0.x.y | - | \<=1.x.y | \<=2.x.y | 0.x.y |

+| 1.x.y | 0.x.y | 2.x.y | 3.x.y | 1.x.y |

+

## Introduction

MMDeploy is an open-source deep learning model deployment toolset. It is a part of the [OpenMMLab](https://openmmlab.com/) project.

@@ -73,24 +83,24 @@ The supported Device-Platform-InferenceBackend matrix is presented as following,

The benchmark can be found from [here](docs/en/03-benchmark/benchmark.md)

-| Device / Platform | Linux | Windows | macOS | Android |

-| ----------------- | ------------------------------------------------------------------------ | --------------------------------------- | -------- | ---------------- |

-| x86_64 CPU | ✔️ONNX Runtime -

-  -

-  +

+  +

+  +

+