Description

Expanded from this thread on Zulip.

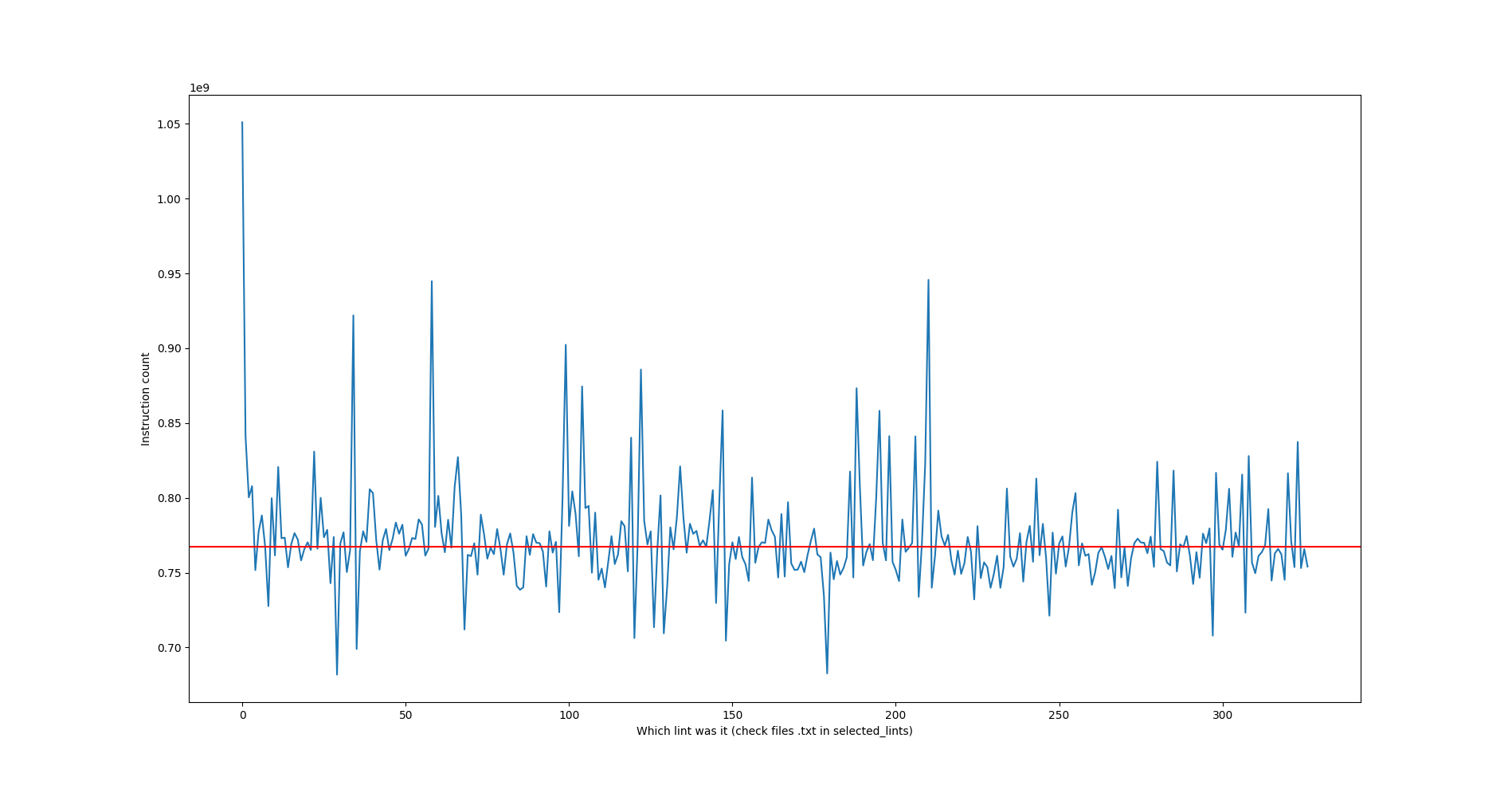

I've come up with a way to isolate lints, and run them individually in a lintcheck --perf run. Here are the results:

The five lints with the highest impact are (in order or importance)

ctfe::ClippyCtfewith 1.05e9 instructionsminmax::MinMaxPasswith 0.94e9needless_late_init::NeedlessLateInitmethods::Methodswith 0.92e9explicit_write::ExplicitWritewith 0.90e9

Here they are represented by the five biggest peaks:

Note that I could only perform the experiment on 326 out of the 344 existing passes because of experiment issues, there may exist hidden performance-intensive lints. Still, we can focus on fixing those five before any others.

Spiritual successor to #12188

HOW TO REPRODUCE

- Clone my

isolate-lintsbranch (or manually copy-paste the changes into your branch).

Then, ideally get a server to do this work because it will be like 10 hours of PC work (ideally without any background processes).

- Run

pypy.py, it will extract all passes fromclippy_lints/src/lib.rsinto about 350 files. - Check the last file and remove that unnecessary

}, if you don't do this it will result in a compilation file in that last pass.

See lib.rs? It has an include!(var!(SELECTED_LINT_FILE)), we'll use that.

The following code block is written in bash, translate it into your preferred terminal language.

for f in ./*.txt; do

SELECTED_LINTS_FILE=../../$f cargo lintcheck --perf --crates-toml=lintcheck/tokio_benchmark.toml && rm -r target/release/ ;

doneIt will generate 344 perf.data files, we now need to convert this into readable scripts.

for f in ./perf.data*; do

perf script -i $f > scripted/$f.scripted

done

This will generate about 344 perf.x.scripted. You can now use data-crunch/pypy.py to get the results (note that if the python data-crunch/pypy.py prints 37, you'll have to go to 38.txt to find which lint that corresponds to because the file-making script starts from 1.txt)