<xarray.DataArray 'zwattablrt' (time: 14608, y: 15360, x: 18432)> Size: 33TB +dask.array<getitem, shape=(14608, 15360, 18432), dtype=float64, chunksize=(224, 350, 350), chunktype=numpy.ndarray> +Coordinates: (3) +Attributes: (4)

+

+## What's next?

+

+flox' ability to do cleanly infer an optimal strategy relies entirely on the input chunking making such optimization possible.

+This is a big knob.

+A brand new [Xarray feature](https://docs.xarray.dev/en/stable/user-guide/groupby.html#grouper-objects) does make such rechunking

+a lot easier for time grouping in particular:

+

+```python

+from xarray.groupers import TimeResampler

+

+rechunked = ds.chunk(time=TimeResampler("YE"))

+```

+

+will rechunk so that a year of data is in a single chunk.

+Even so, it would be nice to automatically rechunk to minimize number of cohorts detected, or to a perfectly blockwise application when that's cheap.

+

+A challenge here is that we have lost _context_ when moving from Xarray to flox.

+The string `"time.month"` tells Xarray that I am grouping a perfectly periodic array with period 12; similarly

+the _string_ `"time.dayofyear"` tells Xarray that I am grouping by a (quasi-)periodic array with period 365, and that group `366` may occur occasionally (depending on calendar).

+But Xarray passes flox an array of integer group labels `[1, 2, 3, 4, 5, ..., 1, 2, 3, 4, 5]`.

+It's hard to infer the context from that!

+Though one approach might frame the problem as: what rechunking would transform `C` to a block diagonal matrix.

+_[Get in touch](https://github.com/xarray-contrib/flox/issues) if you have ideas for how to do this inference._

+

+One way to preserve context may be be to have Xarray's new Grouper objects report ["preferred chunks"](https://github.com/pydata/xarray/blob/main/design_notes/grouper_objects.md#the-preferred_chunks-method-) for a particular grouping.

+This would allow a downstream system like `flox` or `cubed` or `dask-expr` to take this in to account later (or even earlier!) in the pipeline.

+That is an experiment for another day.

+

+## Appendix: automatically detecting group patterns

+

+### Problem statement

+

+Fundamentally, we know:

+

+1. the data layout or chunking.

+2. the array we are grouping by, and can detect possible patterns in that array.

+

+We want to find all sets of groups that occupy similar sets of chunks.

+For groups `A,B,C,D` that occupy the following chunks (chunk 0 is the first chunk along the core-dimension or the axis of reduction)

+

+```

+A: [0, 1, 2]

+B: [1, 2, 3, 4]

+C: [5, 6, 7, 8]

+D: [8]

+X: [0, 4]

+```

+

+We want to detect the cohorts `{A,B,X}` and `{C, D}` with the following chunks.

+

+```

+[A, B, X]: [0, 1, 2, 3, 4]

+[C, D]: [5, 6, 7, 8]

+```

+

+Importantly, we do _not_ want to be dependent on detecting exact patterns, and prefer approximate solutions and heuristics.

+

+### The solution

+

+After a fun exploration involving such fun ideas as [locality-sensitive hashing](http://ekzhu.com/datasketch/lshensemble.html), and [all-pairs set similarity search](https://www.cse.unsw.edu.au/~lxue/WWW08.pdf), I settled on the following algorithm.

+

+I use set _containment_, or a "normalized intersection", to determine the similarity between the sets of chunks occupied by two different groups (`Q` and `X`).

+

+```

+C = |Q ∩ X| / |Q| ≤ 1; (∩ is set intersection)

+```

+

+Unlike [Jaccard similarity](https://en.wikipedia.org/wiki/Jaccard_index), _containment_ [isn't skewed](http://ekzhu.com/datasketch/lshensemble.html) when one of the sets is much larger than the other.

+

+The steps are as follows:

+

+1. First determine which labels are present in each chunk. The distribution of labels across chunks

+ is represented internally as a 2D boolean sparse array `S[chunks, labels]`. `S[i, j] = 1` when

+ label `j` is present in chunk `i`.

+1. Now invert `S` to compute an initial set of cohorts whose groups are in the same exact chunks (this is another groupby!).

+ Later we will want to merge together the detected cohorts when they occupy _approximately_ the same chunks, using the containment metric.

+1. Now we can quickly determine a number of special cases and exit early:

+ 1. Use `"blockwise"` when every group is contained to one block each.

+ 1. Use `"cohorts"` when

+ 1. every chunk only has a single group, but that group might extend across multiple chunks; and

+ 1. existing cohorts don't overlap at all.

+ 1. [and more](https://github.com/xarray-contrib/flox/blob/e6159a657c55fa4aeb31bcbcecb341a4849da9fe/flox/core.py#L408-L426)

+

+If we haven't exited yet, then we want to merge together any detected cohorts that substantially overlap with each other using the containment metric.

+

+1. For that we first quickly compute containment for all groups `i` against all other groups `j` as `C = S.T @ S / number_chunks_per_group`.

+1. To choose between `"map-reduce"` and `"cohorts"`, we need a summary measure of the degree to which the labels overlap with

+ each other. We use _sparsity_ --- the number of non-zero elements in `C` divided by the number of elements in `C`, `C.nnz/C.size`.

+ When sparsity is relatively high, we use `"map-reduce"`, otherwise we use `"cohorts"`.

+1. If the sparsity is low enough, we merge together similar cohorts using a for-loop.

+

+For more detail [see the docs](https://flox.readthedocs.io/en/latest/implementation.html#heuristics) or [the code](https://github.com/xarray-contrib/flox/blob/e6159a657c55fa4aeb31bcbcecb341a4849da9fe/flox/core.py#L336).

+Suggestions and improvements are very welcome!

+

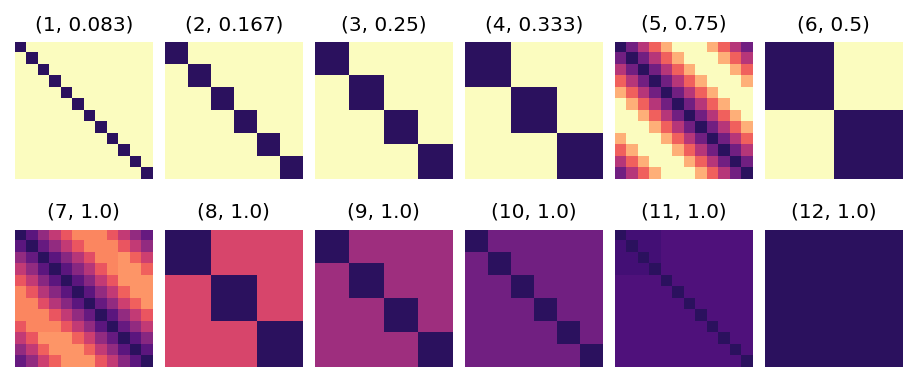

+Here is containment `C[i, j]` for a range of chunk sizes from 1 to 12 for computing `groupby("time.month")` of a monthly mean dataset.

+These panels are colored so that light yellow is `C=0`, and dark purple is `C=1`.

+`C[i,j] = 1` when the chunks occupied by group `i` perfectly overlaps with those occupied by group `j` (so the diagonal elements

+are always 1).

+Since there are 12 groups, `C` is a 12x12 matrix.

+The title on each image is `(chunk size, sparsity)`.

+

+

+When the chunksize _is_ a divisor of the period 12, `C` is a [block diagonal](https://en.wikipedia.org/wiki/Block_matrix) matrix.

+When the chunksize _is not_ a divisor of the period 12, `C` is much less sparse in comparison.

+Given the above `C`, flox will choose `"cohorts"` for chunk sizes (1, 2, 3, 4, 6), and `"map-reduce"` for the rest.

+

+Cool, isn't it?!

+

+Importantly this inference is fast — [~250ms for the US county](https://flox.readthedocs.io/en/latest/implementation.html#example-spatial-grouping) GroupBy problem in our [previous post](https://xarray.dev/blog/flox) where approximately 3000 groups are distributed over 2500 chunks; and ~1.25s for grouping by US watersheds ~87000 groups across 640 chunks.

+

+## What's next?

+

+flox' ability to do cleanly infer an optimal strategy relies entirely on the input chunking making such optimization possible.

+This is a big knob.

+A brand new [Xarray feature](https://docs.xarray.dev/en/stable/user-guide/groupby.html#grouper-objects) does make such rechunking

+a lot easier for time grouping in particular:

+

+```python

+from xarray.groupers import TimeResampler

+

+rechunked = ds.chunk(time=TimeResampler("YE"))

+```

+

+will rechunk so that a year of data is in a single chunk.

+Even so, it would be nice to automatically rechunk to minimize number of cohorts detected, or to a perfectly blockwise application when that's cheap.

+

+A challenge here is that we have lost _context_ when moving from Xarray to flox.

+The string `"time.month"` tells Xarray that I am grouping a perfectly periodic array with period 12; similarly

+the _string_ `"time.dayofyear"` tells Xarray that I am grouping by a (quasi-)periodic array with period 365, and that group `366` may occur occasionally (depending on calendar).

+But Xarray passes flox an array of integer group labels `[1, 2, 3, 4, 5, ..., 1, 2, 3, 4, 5]`.

+It's hard to infer the context from that!

+Though one approach might frame the problem as: what rechunking would transform `C` to a block diagonal matrix.

+_[Get in touch](https://github.com/xarray-contrib/flox/issues) if you have ideas for how to do this inference._

+

+One way to preserve context may be be to have Xarray's new Grouper objects report ["preferred chunks"](https://github.com/pydata/xarray/blob/main/design_notes/grouper_objects.md#the-preferred_chunks-method-) for a particular grouping.

+This would allow a downstream system like `flox` or `cubed` or `dask-expr` to take this in to account later (or even earlier!) in the pipeline.

+That is an experiment for another day.

+

+## Appendix: automatically detecting group patterns

+

+### Problem statement

+

+Fundamentally, we know:

+

+1. the data layout or chunking.

+2. the array we are grouping by, and can detect possible patterns in that array.

+

+We want to find all sets of groups that occupy similar sets of chunks.

+For groups `A,B,C,D` that occupy the following chunks (chunk 0 is the first chunk along the core-dimension or the axis of reduction)

+

+```

+A: [0, 1, 2]

+B: [1, 2, 3, 4]

+C: [5, 6, 7, 8]

+D: [8]

+X: [0, 4]

+```

+

+We want to detect the cohorts `{A,B,X}` and `{C, D}` with the following chunks.

+

+```

+[A, B, X]: [0, 1, 2, 3, 4]

+[C, D]: [5, 6, 7, 8]

+```

+

+Importantly, we do _not_ want to be dependent on detecting exact patterns, and prefer approximate solutions and heuristics.

+

+### The solution

+

+After a fun exploration involving such fun ideas as [locality-sensitive hashing](http://ekzhu.com/datasketch/lshensemble.html), and [all-pairs set similarity search](https://www.cse.unsw.edu.au/~lxue/WWW08.pdf), I settled on the following algorithm.

+

+I use set _containment_, or a "normalized intersection", to determine the similarity between the sets of chunks occupied by two different groups (`Q` and `X`).

+

+```

+C = |Q ∩ X| / |Q| ≤ 1; (∩ is set intersection)

+```

+

+Unlike [Jaccard similarity](https://en.wikipedia.org/wiki/Jaccard_index), _containment_ [isn't skewed](http://ekzhu.com/datasketch/lshensemble.html) when one of the sets is much larger than the other.

+

+The steps are as follows:

+

+1. First determine which labels are present in each chunk. The distribution of labels across chunks

+ is represented internally as a 2D boolean sparse array `S[chunks, labels]`. `S[i, j] = 1` when

+ label `j` is present in chunk `i`.

+1. Now invert `S` to compute an initial set of cohorts whose groups are in the same exact chunks (this is another groupby!).

+ Later we will want to merge together the detected cohorts when they occupy _approximately_ the same chunks, using the containment metric.

+1. Now we can quickly determine a number of special cases and exit early:

+ 1. Use `"blockwise"` when every group is contained to one block each.

+ 1. Use `"cohorts"` when

+ 1. every chunk only has a single group, but that group might extend across multiple chunks; and

+ 1. existing cohorts don't overlap at all.

+ 1. [and more](https://github.com/xarray-contrib/flox/blob/e6159a657c55fa4aeb31bcbcecb341a4849da9fe/flox/core.py#L408-L426)

+

+If we haven't exited yet, then we want to merge together any detected cohorts that substantially overlap with each other using the containment metric.

+

+1. For that we first quickly compute containment for all groups `i` against all other groups `j` as `C = S.T @ S / number_chunks_per_group`.

+1. To choose between `"map-reduce"` and `"cohorts"`, we need a summary measure of the degree to which the labels overlap with

+ each other. We use _sparsity_ --- the number of non-zero elements in `C` divided by the number of elements in `C`, `C.nnz/C.size`.

+ When sparsity is relatively high, we use `"map-reduce"`, otherwise we use `"cohorts"`.

+1. If the sparsity is low enough, we merge together similar cohorts using a for-loop.

+

+For more detail [see the docs](https://flox.readthedocs.io/en/latest/implementation.html#heuristics) or [the code](https://github.com/xarray-contrib/flox/blob/e6159a657c55fa4aeb31bcbcecb341a4849da9fe/flox/core.py#L336).

+Suggestions and improvements are very welcome!

+

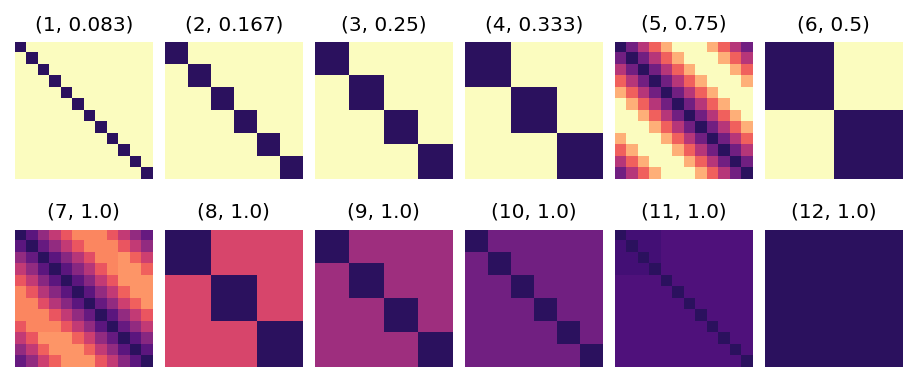

+Here is containment `C[i, j]` for a range of chunk sizes from 1 to 12 for computing `groupby("time.month")` of a monthly mean dataset.

+These panels are colored so that light yellow is `C=0`, and dark purple is `C=1`.

+`C[i,j] = 1` when the chunks occupied by group `i` perfectly overlaps with those occupied by group `j` (so the diagonal elements

+are always 1).

+Since there are 12 groups, `C` is a 12x12 matrix.

+The title on each image is `(chunk size, sparsity)`.

+

+

+When the chunksize _is_ a divisor of the period 12, `C` is a [block diagonal](https://en.wikipedia.org/wiki/Block_matrix) matrix.

+When the chunksize _is not_ a divisor of the period 12, `C` is much less sparse in comparison.

+Given the above `C`, flox will choose `"cohorts"` for chunk sizes (1, 2, 3, 4, 6), and `"map-reduce"` for the rest.

+

+Cool, isn't it?!

+

+Importantly this inference is fast — [~250ms for the US county](https://flox.readthedocs.io/en/latest/implementation.html#example-spatial-grouping) GroupBy problem in our [previous post](https://xarray.dev/blog/flox) where approximately 3000 groups are distributed over 2500 chunks; and ~1.25s for grouping by US watersheds ~87000 groups across 640 chunks.