forked from ultralytics/ultralytics

-

Notifications

You must be signed in to change notification settings - Fork 0

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

ultralytics 8.0.108 add Meituan YOLOv6 models (ultralytics#2811)

Co-authored-by: Michael Currie <[email protected]> Co-authored-by: pre-commit-ci[bot] <66853113+pre-commit-ci[bot]@users.noreply.github.com> Co-authored-by: Hicham Talaoubrid <[email protected]> Co-authored-by: Zlobin Vladimir <[email protected]> Co-authored-by: Szymon Mikler <[email protected]>

- Loading branch information

1 parent

07b57c0

commit ffc0e8c

Showing

18 changed files

with

233 additions

and

45 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,81 @@ | ||

| --- | ||

| comments: true | ||

| description: Discover Meituan YOLOv6, a robust real-time object detector. Learn how to utilize pre-trained models with Ultralytics Python API for a variety of tasks. | ||

| --- | ||

|

|

||

| # Meituan YOLOv6 | ||

|

|

||

| ## Overview | ||

|

|

||

| [Meituan](https://about.meituan.com/) YOLOv6 is a cutting-edge object detector that offers remarkable balance between speed and accuracy, making it a popular choice for real-time applications. This model introduces several notable enhancements on its architecture and training scheme, including the implementation of a Bi-directional Concatenation (BiC) module, an anchor-aided training (AAT) strategy, and an improved backbone and neck design for state-of-the-art accuracy on the COCO dataset. | ||

|

|

||

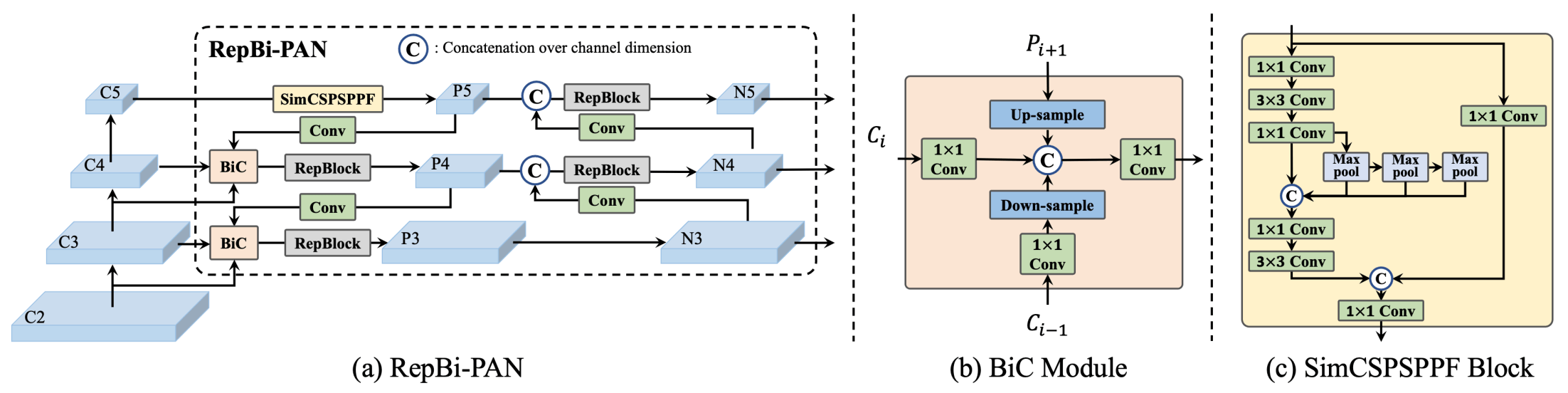

|  | ||

|  | ||

| **Overview of YOLOv6.** Model architecture diagram showing the redesigned network components and training strategies that have led to significant performance improvements. (a) The neck of YOLOv6 (N and S are shown). Note for M/L, RepBlocks is replaced with CSPStackRep. (b) The | ||

| structure of a BiC module. (c) A SimCSPSPPF block. ([source](https://arxiv.org/pdf/2301.05586.pdf)). | ||

|

|

||

| ### Key Features | ||

|

|

||

| - **Bi-directional Concatenation (BiC) Module:** YOLOv6 introduces a BiC module in the neck of the detector, enhancing localization signals and delivering performance gains with negligible speed degradation. | ||

| - **Anchor-Aided Training (AAT) Strategy:** This model proposes AAT to enjoy the benefits of both anchor-based and anchor-free paradigms without compromising inference efficiency. | ||

| - **Enhanced Backbone and Neck Design:** By deepening YOLOv6 to include another stage in the backbone and neck, this model achieves state-of-the-art performance on the COCO dataset at high-resolution input. | ||

| - **Self-Distillation Strategy:** A new self-distillation strategy is implemented to boost the performance of smaller models of YOLOv6, enhancing the auxiliary regression branch during training and removing it at inference to avoid a marked speed decline. | ||

|

|

||

| ## Pre-trained Models | ||

|

|

||

| YOLOv6 provides various pre-trained models with different scales: | ||

|

|

||

| - YOLOv6-N: 37.5% AP on COCO val2017 at 1187 FPS with NVIDIA Tesla T4 GPU. | ||

| - YOLOv6-S: 45.0% AP at 484 FPS. | ||

| - YOLOv6-M: 50.0% AP at 226 FPS. | ||

| - YOLOv6-L: 52.8% AP at 116 FPS. | ||

| - YOLOv6-L6: State-of-the-art accuracy in real-time. | ||

|

|

||

| YOLOv6 also provides quantized models for different precisions and models optimized for mobile platforms. | ||

|

|

||

| ## Usage | ||

|

|

||

| ### Python API | ||

|

|

||

| ```python | ||

| from ultralytics import YOLO | ||

|

|

||

| model = YOLO("yolov6n.yaml") # build new model from scratch | ||

| model.info() # display model information | ||

| model.predict("path/to/image.jpg") # predict | ||

| ``` | ||

|

|

||

| ### Supported Tasks | ||

|

|

||

| | Model Type | Pre-trained Weights | Tasks Supported | | ||

| |------------|---------------------|------------------| | ||

| | YOLOv6-N | `yolov6-n.pt` | Object Detection | | ||

| | YOLOv6-S | `yolov6-s.pt` | Object Detection | | ||

| | YOLOv6-M | `yolov6-m.pt` | Object Detection | | ||

| | YOLOv6-L | `yolov6-l.pt` | Object Detection | | ||

| | YOLOv6-L6 | `yolov6-l6.pt` | Object Detection | | ||

|

|

||

| ## Supported Modes | ||

|

|

||

| | Mode | Supported | | ||

| |------------|--------------------| | ||

| | Inference | :heavy_check_mark: | | ||

| | Validation | :heavy_check_mark: | | ||

| | Training | :heavy_check_mark: | | ||

|

|

||

| ## Citations and Acknowledgements | ||

|

|

||

| We would like to acknowledge the authors for their significant contributions in the field of real-time object detection: | ||

|

|

||

| ```bibtex | ||

| @misc{li2023yolov6, | ||

| title={YOLOv6 v3.0: A Full-Scale Reloading}, | ||

| author={Chuyi Li and Lulu Li and Yifei Geng and Hongliang Jiang and Meng Cheng and Bo Zhang and Zaidan Ke and Xiaoming Xu and Xiangxiang Chu}, | ||

| year={2023}, | ||

| eprint={2301.05586}, | ||

| archivePrefix={arXiv}, | ||

| primaryClass={cs.CV} | ||

| } | ||

| ``` | ||

|

|

||

| The original YOLOv6 paper can be found on [arXiv](https://arxiv.org/abs/2301.05586). The authors have made their work publicly available, and the codebase can be accessed on [GitHub](https://github.com/meituan/YOLOv6). We appreciate their efforts in advancing the field and making their work accessible to the broader community. |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,51 @@ | ||

| # Ultralytics YOLO 🚀, AGPL-3.0 license | ||

| # YOLOv6 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect | ||

|

|

||

| # Parameters | ||

| act: nn.ReLU() | ||

| nc: 80 # number of classes | ||

| scales: # model compound scaling constants, i.e. 'model=yolov6n.yaml' will call yolov8.yaml with scale 'n' | ||

| # [depth, width, max_channels] | ||

| n: [ 0.33, 0.25, 1024 ] | ||

| s: [ 0.33, 0.50, 1024 ] | ||

| m: [ 0.67, 0.75, 768 ] | ||

| l: [ 1.00, 1.00, 512 ] | ||

| x: [ 1.00, 1.25, 512 ] | ||

|

|

||

| # YOLOv6-3.0s backbone | ||

| backbone: | ||

| # [from, repeats, module, args] | ||

| - [ -1, 1, Conv, [ 64, 3, 2 ] ] # 0-P1/2 | ||

| - [ -1, 1, Conv, [ 128, 3, 2 ] ] # 1-P2/4 | ||

| - [ -1, 6, Conv, [ 128, 3, 1 ] ] | ||

| - [ -1, 1, Conv, [ 256, 3, 2 ] ] # 3-P3/8 | ||

| - [ -1, 12, Conv, [ 256, 3, 1 ] ] | ||

| - [ -1, 1, Conv, [ 512, 3, 2 ] ] # 5-P4/16 | ||

| - [ -1, 18, Conv, [ 512, 3, 1 ] ] | ||

| - [ -1, 1, Conv, [ 1024, 3, 2 ] ] # 7-P5/32 | ||

| - [ -1, 9, Conv, [ 1024, 3, 1 ] ] | ||

| - [ -1, 1, SPPF, [ 1024, 5 ] ] # 9 | ||

|

|

||

| # YOLOv6-3.0s head | ||

| head: | ||

| - [ -1, 1, nn.ConvTranspose2d, [ 256, 2, 2, 0 ] ] | ||

| - [ [ -1, 6 ], 1, Concat, [ 1 ] ] # cat backbone P4 | ||

| - [ -1, 1, Conv, [ 256, 3, 1 ] ] | ||

| - [ -1, 9, Conv, [ 256, 3, 1 ] ] # 13 | ||

|

|

||

| - [ -1, 1, nn.ConvTranspose2d, [ 128, 2, 2, 0 ] ] | ||

| - [ [ -1, 4 ], 1, Concat, [ 1 ] ] # cat backbone P3 | ||

| - [ -1, 1, Conv, [ 128, 3, 1 ] ] | ||

| - [ -1, 9, Conv, [ 128, 3, 1 ] ] # 17 | ||

|

|

||

| - [ -1, 1, Conv, [ 128, 3, 2 ] ] | ||

| - [ [ -1, 12 ], 1, Concat, [ 1 ] ] # cat head P4 | ||

| - [ -1, 1, Conv, [ 256, 3, 1 ] ] | ||

| - [ -1, 9, Conv, [ 256, 3, 1 ] ] # 21 | ||

|

|

||

| - [ -1, 1, Conv, [ 256, 3, 2 ] ] | ||

| - [ [ -1, 9 ], 1, Concat, [ 1 ] ] # cat head P5 | ||

| - [ -1, 1, Conv, [ 512, 3, 1 ] ] | ||

| - [ -1, 9, Conv, [ 512, 3, 1 ] ] # 25 | ||

|

|

||

| - [ [ 17, 21, 25 ], 1, Detect, [ nc ] ] # Detect(P3, P4, P5) |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Oops, something went wrong.