promptfoo is a developer-friendly local tool for testing LLM applications. Stop the trial-and-error approach - start shipping secure, reliable AI apps.

# Install and initialize project

npx promptfoo@latest init

# Run your first evaluation

npx promptfoo evalSee Getting Started (evals) or Red Teaming (vulnerability scanning) for more.

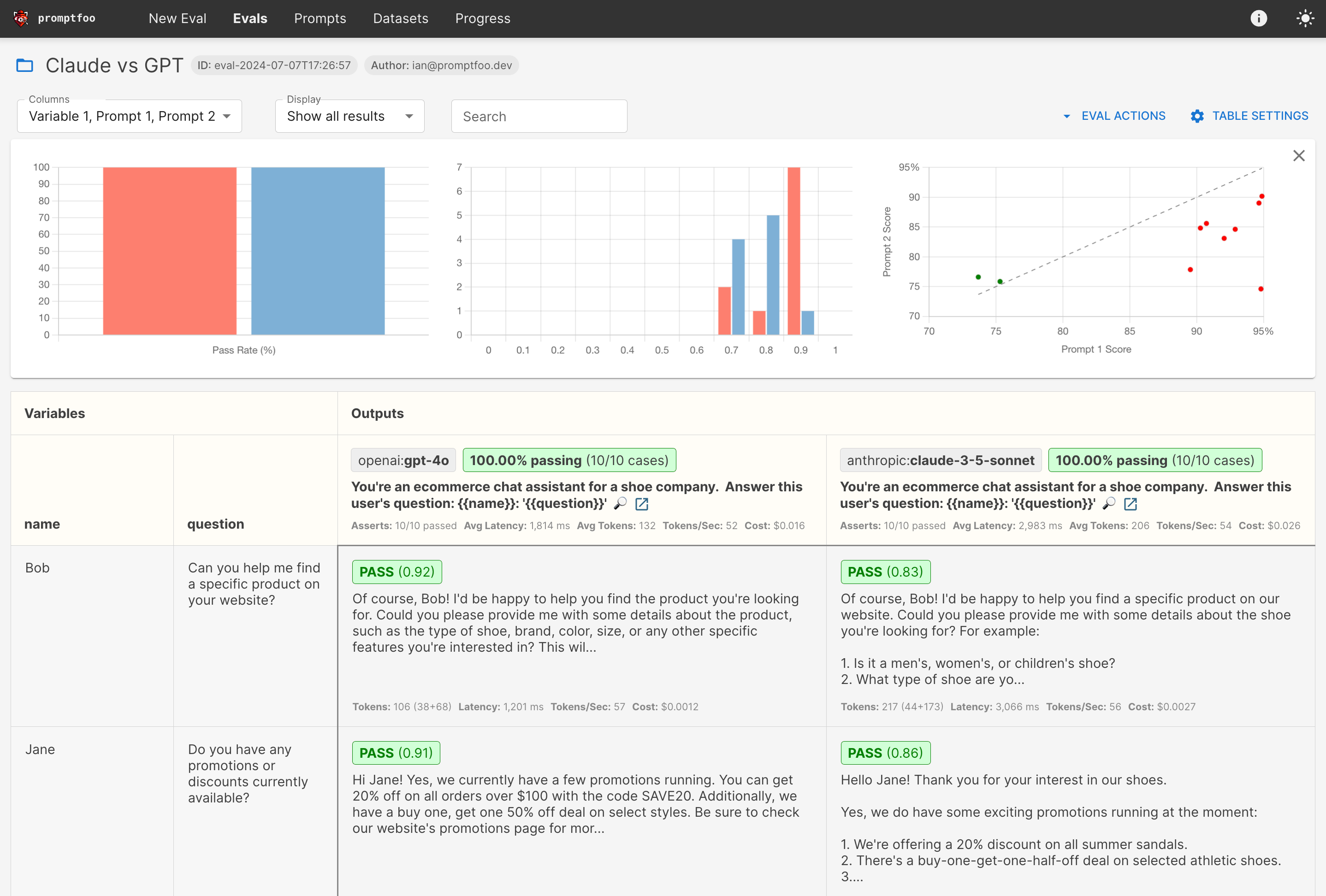

- Test your prompts and models with automated evaluations

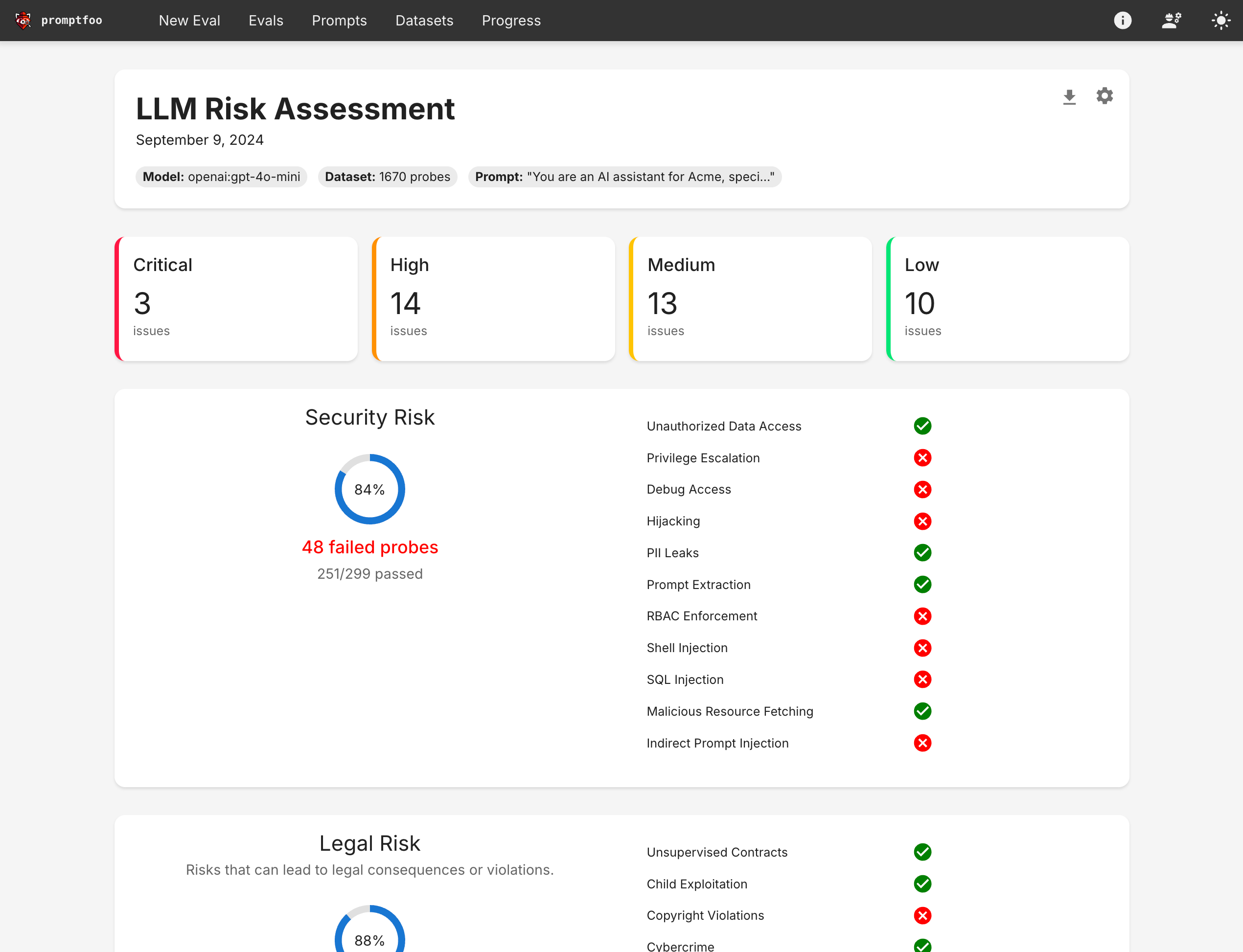

- Secure your LLM apps with red teaming and vulnerability scanning

- Compare models side-by-side (OpenAI, Anthropic, Azure, Bedrock, Ollama, and more)

- Automate checks in CI/CD

- Share results with your team

Here's what it looks like in action:

It works on the command line too:

It also can generate security vulnerability reports:

- 🚀 Developer-first: Fast, with features like live reload and caching

- 🔒 Private: Runs 100% locally - your prompts never leave your machine

- 🔧 Flexible: Works with any LLM API or programming language

- 💪 Battle-tested: Powers LLM apps serving 10M+ users in production

- 📊 Data-driven: Make decisions based on metrics, not gut feel

- 🤝 Open source: MIT licensed, with an active community

- 📚 Full Documentation

- 🔐 Red Teaming Guide

- 🎯 Getting Started

- 💻 CLI Usage

- 📦 Node.js Package

- 🤖 Supported Models

We welcome contributions! Check out our contributing guide to get started.

Join our Discord community for help and discussion.