A Docker-powered microservice for intelligent PDF document layout analysis, OCR, and content extraction

Built with ❤️ by HURIDOCS

⭐ Star us on GitHub • 🐳 Pull from Docker Hub • 🤗 View on Hugging Face

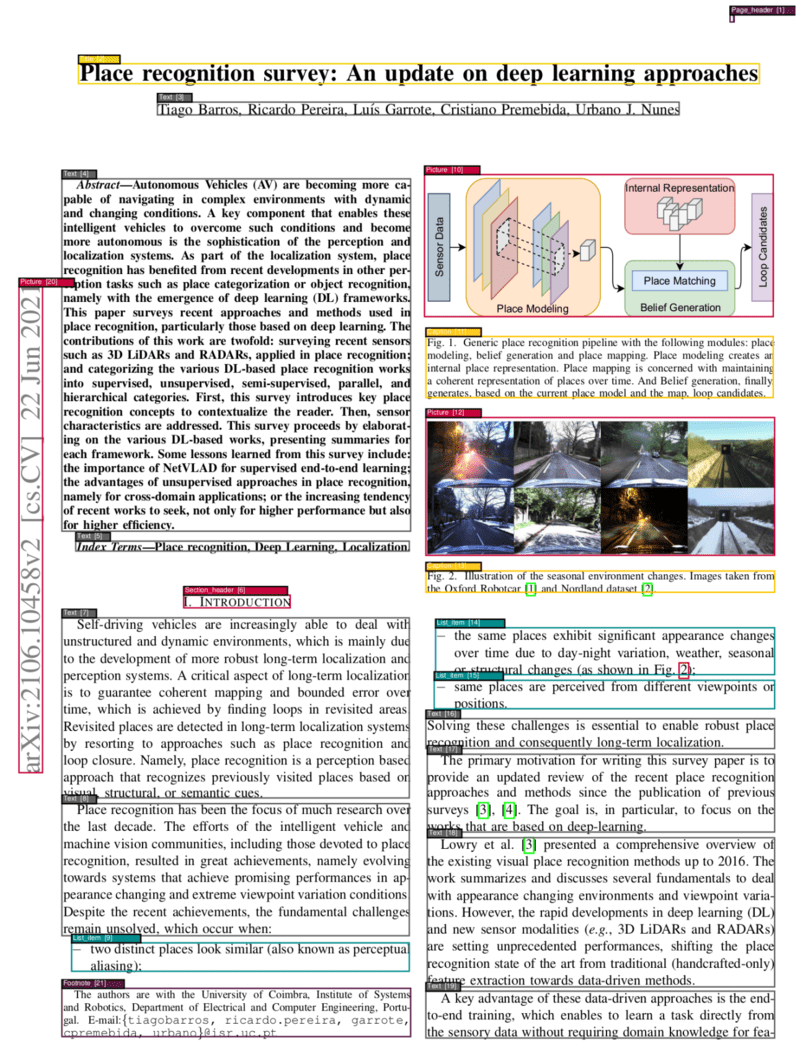

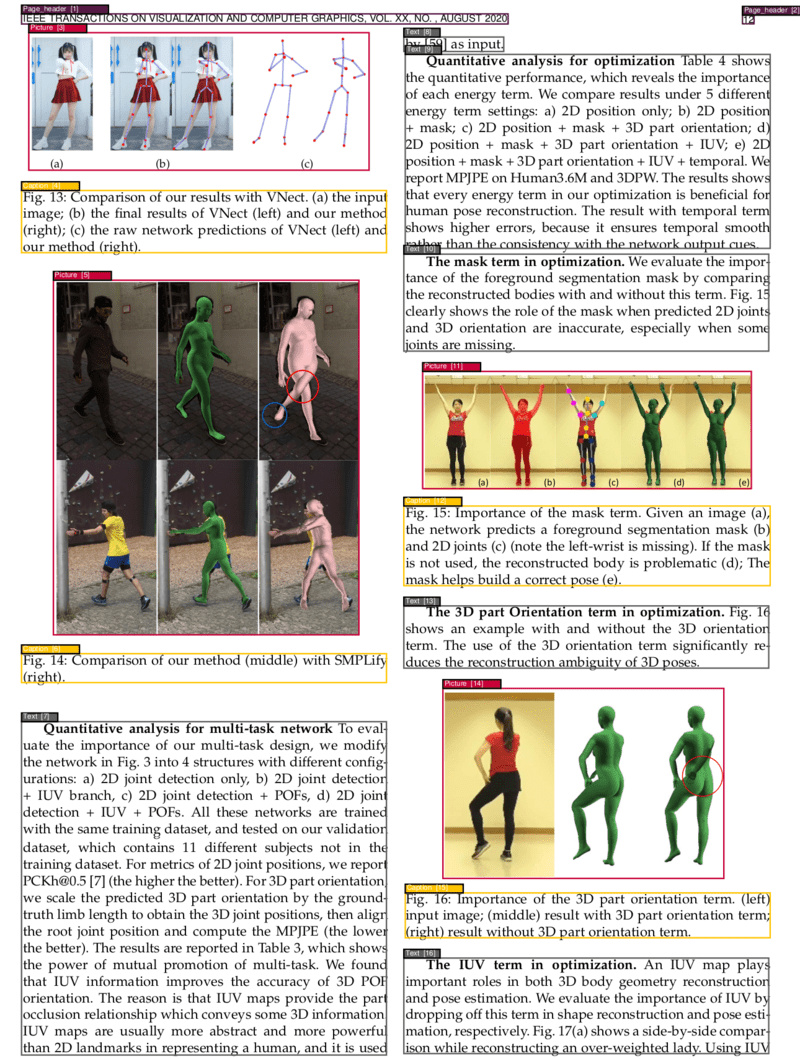

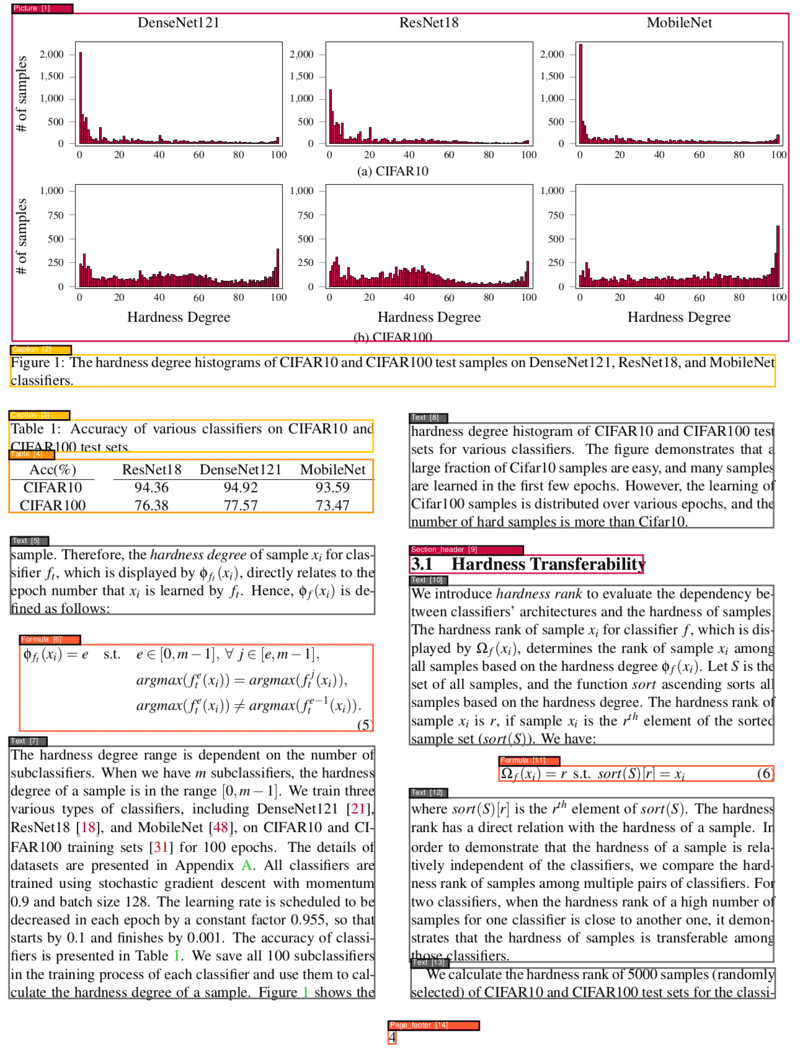

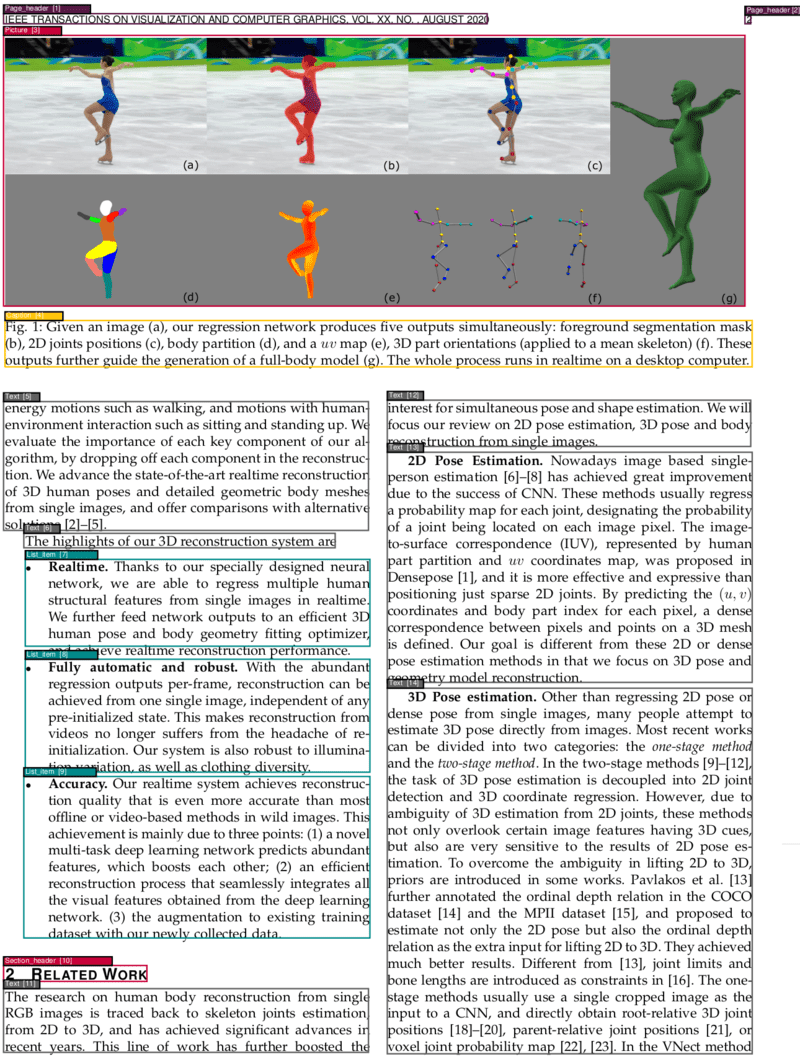

This project provides a powerful and flexible PDF analysis microservice built with Clean Architecture principles. The service enables OCR, segmentation, and classification of different parts of PDF pages, identifying elements such as texts, titles, pictures, tables, formulas, and more. Additionally, it determines the correct reading order of these identified elements and can convert PDFs to various formats including Markdown and HTML.

- 🔍 Advanced PDF Layout Analysis - Segment and classify PDF content with high accuracy

- 🖼️ Visual & Fast Models - Choose between VGT (Vision Grid Transformer) for accuracy or LightGBM for speed

- 📝 Multi-format Output - Export to JSON, Markdown, HTML, and visualize PDF segmentations

- 🌐 OCR Support - 150+ language support with Tesseract OCR

- 📊 Table & Formula Extraction - Extract tables as HTML and formulas as LaTeX

- 🏗️ Clean Architecture - Modular, testable, and maintainable codebase

- 🐳 Docker-Ready - Easy deployment with GPU support

- ⚡ RESTful API - Comprehensive API with 10+ endpoints

|

|

|

|

- GitHub: pdf-document-layout-analysis

- HuggingFace: pdf-document-layout-analysis

- DockerHub: pdf-document-layout-analysis

With GPU support (recommended for better performance):

make startWithout GPU support:

make start_no_gpuThe service will be available at http://localhost:5060

Check service status:

curl http://localhost:5060/infoAnalyze a PDF document (VGT model - high accuracy):

curl -X POST -F 'file=@/path/to/your/document.pdf' http://localhost:5060Fast analysis (LightGBM models - faster processing):

curl -X POST -F 'file=@/path/to/your/document.pdf' -F "fast=true" http://localhost:5060make stop💡 Tip: Replace

/path/to/your/document.pdfwith the actual path to your PDF file. The service will return a JSON response with segmented content and metadata.

- 🚀 Quick Start

- ⚙️ Dependencies

- 📋 Requirements

- 📚 API Reference

- 💡 Usage Examples

- 🏗️ Architecture

- 🤖 Models

- 📊 Data

- 🔧 Development

- 📈 Benchmarks

- 🌐 Installation of More Languages for OCR

- 🔗 Related Services

- 🤝 Contributing

- Docker Desktop 4.25.0+ - Installation Guide

- Python 3.10+ (for local development)

- NVIDIA Container Toolkit - Installation Guide (for GPU support)

- RAM: 2 GB minimum

- GPU Memory: 5 GB (optional, will fallback to CPU if unavailable)

- Disk Space: 10 GB for models and dependencies

- CPU: Multi-core recommended for better performance

- Docker Engine 20.10+

- Docker Compose 2.0+

The service provides a comprehensive RESTful API with the following endpoints:

| Endpoint | Method | Description | Parameters |

|---|---|---|---|

/ |

POST | Analyze PDF layout and extract segments | file, fast, ocr_tables |

/save_xml/{filename} |

POST | Analyze PDF and save XML output | file, xml_file_name, fast |

/get_xml/{filename} |

GET | Retrieve saved XML analysis | xml_file_name |

| Endpoint | Method | Description | Parameters |

|---|---|---|---|

/text |

POST | Extract text by content types | file, fast, types |

/toc |

POST | Extract table of contents | file, fast |

/toc_legacy_uwazi_compatible |

POST | Extract TOC (Uwazi compatible) | file |

| Endpoint | Method | Description | Parameters |

|---|---|---|---|

/markdown |

POST | Convert PDF to Markdown (includes segmentation data in zip) | file, fast, extract_toc, dpi, output_file |

/html |

POST | Convert PDF to HTML (includes segmentation data in zip) | file, fast, extract_toc, dpi, output_file |

/visualize |

POST | Visualize segmentation results on the PDF | file, fast |

| Endpoint | Method | Description | Parameters |

|---|---|---|---|

/ocr |

POST | Apply OCR to PDF | file, language |

/info |

GET | Get service information | - |

/ |

GET | Health check and system info | - |

/error |

GET | Test error handling | - |

file: PDF file to process (multipart/form-data)fast: Use LightGBM models instead of VGT (boolean, default: false)ocr_tables: Apply OCR to table regions (boolean, default: false)language: OCR language code (string, default: "en")types: Comma-separated content types to extract (string, default: "all")extract_toc: Include table of contents at the beginning of the output (boolean, default: false)dpi: Image resolution for conversion (integer, default: 120)

Standard analysis with VGT model:

curl -X POST \

-F '[email protected]' \

http://localhost:5060Fast analysis with LightGBM models:

curl -X POST \

-F '[email protected]' \

-F 'fast=true' \

http://localhost:5060Analysis with table OCR:

curl -X POST \

-F '[email protected]' \

-F 'ocr_tables=true' \

http://localhost:5060Extract all text:

curl -X POST \

-F '[email protected]' \

-F 'types=all' \

http://localhost:5060/textExtract specific content types:

curl -X POST \

-F '[email protected]' \

-F 'types=title,text,table' \

http://localhost:5060/textConvert to Markdown:

curl -X POST http://localhost:5060/markdown \

-F '[email protected]' \

-F 'extract_toc=true' \

-F 'output_file=document.md' \

--output 'document.zip'Convert to HTML:

curl -X POST http://localhost:5060/html \

-F '[email protected]' \

-F 'extract_toc=true' \

-F 'output_file=document.html' \

--output 'document.zip'📋 Segmentation Data: Format conversion endpoints automatically include detailed segmentation data in the zip output. The resulting zip file contains a

{filename}_segmentation.jsonfile with information about each detected document segment including:

- Coordinates:

left,top,width,height- Page information:

page_number,page_width,page_height- Content:

textcontent and segmenttype(e.g., "Title", "Text", "Table", "Picture")

OCR in English:

curl -X POST \

-F 'file=@scanned_document.pdf' \

-F 'language=en' \

http://localhost:5060/ocr \

--output ocr_processed.pdfOCR in other languages:

# French

curl -X POST \

-F 'file=@document_french.pdf' \

-F 'language=fr' \

http://localhost:5060/ocr \

--output ocr_french.pdf

# Spanish

curl -X POST \

-F 'file=@document_spanish.pdf' \

-F 'language=es' \

http://localhost:5060/ocr \

--output ocr_spanish.pdfGenerate visualization PDF:

curl -X POST \

-F '[email protected]' \

http://localhost:5060/visualize \

--output visualization.pdfExtract structured TOC:

curl -X POST \

-F '[email protected]' \

http://localhost:5060/tocAnalyze and save XML:

curl -X POST \

-F '[email protected]' \

http://localhost:5060/save_xml/my_analysisRetrieve saved XML:

curl http://localhost:5060/get_xml/my_analysis.xmlGet service info and supported languages:

curl http://localhost:5060/infoHealth check:

curl http://localhost:5060/Most endpoints return JSON with segment information:

[

{

"left": 72.0,

"top": 84.0,

"width": 451.2,

"height": 23.04,

"page_number": 1,

"page_width": 595.32,

"page_height": 841.92,

"text": "Document Title",

"type": "Title"

},

{

"left": 72.0,

"top": 120.0,

"width": 451.2,

"height": 200.0,

"page_number": 1,

"page_width": 595.32,

"page_height": 841.92,

"text": "This is the main text content...",

"type": "Text"

}

]Caption- Image and table captionsFootnote- Footnote textFormula- Mathematical formulasList item- List items and bullet pointsPage footer- Footer contentPage header- Header contentPicture- Images and figuresSection header- Section headingsTable- Table contentText- Regular text paragraphsTitle- Document and section titles

This project follows Clean Architecture principles, ensuring separation of concerns, testability, and maintainability. The codebase is organized into distinct layers:

src/

├── domain/ # Enterprise Business Rules

│ ├── PdfImages.py # PDF image handling domain logic

│ ├── PdfSegment.py # PDF segment entity

│ ├── Prediction.py # ML prediction entity

│ └── SegmentBox.py # Core segment box entity

├── use_cases/ # Application Business Rules

│ ├── pdf_analysis/ # PDF analysis use case

│ ├── text_extraction/ # Text extraction use case

│ ├── toc_extraction/ # Table of contents extraction

│ ├── visualization/ # PDF visualization use case

│ ├── ocr/ # OCR processing use case

│ ├── markdown_conversion/ # Markdown conversion use case

│ └── html_conversion/ # HTML conversion use case

├── adapters/ # Interface Adapters

│ ├── infrastructure/ # External service adapters

│ ├── ml/ # Machine learning model adapters

│ ├── storage/ # File storage adapters

│ └── web/ # Web framework adapters

├── ports/ # Interface definitions

│ ├── services/ # Service interfaces

│ └── repositories/ # Repository interfaces

└── drivers/ # Frameworks & Drivers

└── web/ # FastAPI application setup

- Domain Layer: Contains core business entities and rules independent of external concerns

- Use Cases Layer: Orchestrates domain entities to fulfill specific application requirements

- Adapters Layer: Implements interfaces defined by inner layers and adapts external frameworks

- Drivers Layer: Contains frameworks, databases, and external agency configurations

- 🔄 Dependency Inversion: High-level modules don't depend on low-level modules

- 🧪 Testability: Easy to unit test business logic in isolation

- 🔧 Maintainability: Changes to external frameworks don't affect business rules

- 📈 Scalability: Easy to add new features without modifying existing code

The service offers two complementary model approaches, each optimized for different use cases:

Overview: A state-of-the-art visual model developed by Alibaba Research Group that "sees" the entire page layout.

Key Features:

- 🎯 High Accuracy: Best-in-class performance on document layout analysis

- 👁️ Visual Understanding: Analyzes the entire page context including spatial relationships

- 📊 Trained on DocLayNet: Uses the comprehensive DocLayNet dataset

- 🔬 Research-Backed: Based on Advanced Literate Machinery

Resource Requirements:

- GPU: 5GB+ VRAM (recommended)

- CPU: Falls back automatically if GPU unavailable

- Processing Speed: ~1.75 seconds/page (GPU [GTX 1070]) or ~13.5 seconds/page (CPU [i7-8700])

Overview: Lightweight ensemble of two specialized models using XML-based features from Poppler.

Key Features:

- ⚡ High Speed: ~0.42 seconds per page on CPU (i7-8700)

- 💾 Low Resource Usage: CPU-only, minimal memory footprint

- 🔄 Dual Model Approach:

- Token Type Classifier: Identifies content types (title, text, table, etc.)

- Segmentation Model: Determines proper content boundaries

- 📄 XML-Based: Uses Poppler's PDF-to-XML conversion for feature extraction

Trade-offs:

- Slightly lower accuracy compared to VGT

- No visual context understanding

- Excellent for batch processing and resource-constrained environments

Both models integrate seamlessly with OCR capabilities:

- Engine: Tesseract OCR

- Processing: ocrmypdf

- Languages: 150+ supported languages

- Output: Searchable PDFs with preserved layout

| Use Case | Recommended Model | Reason |

|---|---|---|

| High accuracy requirements | VGT | Superior visual understanding |

| Batch processing | LightGBM | Faster processing, lower resources |

| GPU available | VGT | Leverages GPU acceleration |

| CPU-only environment | LightGBM | Optimized for CPU processing |

| Real-time applications | LightGBM | Consistent fast response times |

| Research/analysis | VGT | Best accuracy for detailed analysis |

Both model types are trained on the comprehensive DocLayNet dataset, a large-scale document layout analysis dataset containing over 80,000 document pages.

The models can identify and classify 11 distinct content types:

| ID | Category | Description |

|---|---|---|

| 1 | Caption | Image and table captions |

| 2 | Footnote | Footnote references and text |

| 3 | Formula | Mathematical equations and formulas |

| 4 | List item | Bulleted and numbered list items |

| 5 | Page footer | Footer content and page numbers |

| 6 | Page header | Header content and titles |

| 7 | Picture | Images, figures, and graphics |

| 8 | Section header | Section and subsection headings |

| 9 | Table | Tabular data and structures |

| 10 | Text | Regular paragraph text |

| 11 | Title | Document and chapter titles |

- Domain Coverage: Academic papers, technical documents, reports

- Language: Primarily English with multilingual support

- Quality: High-quality annotations with bounding boxes and labels

- Diversity: Various document layouts, fonts, and formatting styles

For detailed information about the dataset, visit the DocLayNet repository.

-

Clone the repository:

git clone https://github.com/huridocs/pdf-document-layout-analysis.git cd pdf-document-layout-analysis -

Create virtual environment:

make install_venv

-

Activate environment:

make activate # or manually: source .venv/bin/activate -

Install dependencies:

make install

Format code:

make formatterCheck formatting:

make check_formatRun tests:

make testIntegration tests:

# Tests are located in src/tests/integration/

python -m pytest src/tests/integration/test_end_to_end.pyBuild and start (detached mode):

# With GPU

make start_detached_gpu

# Without GPU

make start_detachedClean up Docker resources:

# Remove containers

make remove_docker_containers

# Remove images

make remove_docker_imagespdf-document-layout-analysis/

├── src/ # Source code

│ ├── domain/ # Business entities

│ ├── use_cases/ # Application logic

│ ├── adapters/ # External integrations

│ ├── ports/ # Interface definitions

│ └── drivers/ # Framework configurations

├── test_pdfs/ # Test PDF files

├── models/ # ML model storage

├── docker-compose.yml # Docker configuration

├── Dockerfile # Container definition

├── Makefile # Development commands

├── pyproject.toml # Python project configuration

└── requirements.txt # Python dependencies

Key configuration options:

# OCR configuration

OCR_SOURCE=/tmp/ocr_source

# Model paths (auto-configured)

MODELS_PATH=./models

# Service configuration

HOST=0.0.0.0

PORT=5060- Domain Logic: Add entities in

src/domain/ - Use Cases: Implement business logic in

src/use_cases/ - Adapters: Create integrations in

src/adapters/ - Ports: Define interfaces in

src/ports/ - Controllers: Add endpoints in

src/adapters/web/

View logs:

docker compose logs -fAccess container:

docker exec -it pdf-document-layout-analysis /bin/bashFree up disk space:

make free_up_spaceThe service returns SegmentBox elements in a carefully determined reading order:

- Poppler Integration: Uses Poppler PDF-to-XML conversion to establish initial token reading order

- Segment Averaging: Calculates average reading order for multi-token segments

- Type-Based Sorting: Prioritizes content types:

- Headers placed first

- Main content in reading order

- Footers and footnotes placed last

For segments without text (e.g., images):

- Processed after text-based sorting

- Positioned based on nearest text segment proximity

- Uses spatial distance as the primary criterion

- Formulas: Automatically extracted as LaTeX format in the

textproperty - Tables: Basic text extraction included by default

OCR tables and extract them in HTML format by setting ocr_tables=true:

curl -X POST -F '[email protected]' -F 'ocr_tables=true' http://localhost:5060- Formulas: LaTeX-OCR

- Tables: RapidTable

VGT model performance on PubLayNet dataset:

| Metric | Overall | Text | Title | List | Table | Figure |

|---|---|---|---|---|---|---|

| F1 Score | 0.962 | 0.950 | 0.939 | 0.968 | 0.981 | 0.971 |

📊 Comparison: View comprehensive model comparisons at Papers With Code

Performance benchmarks on 15-page academic documents:

| Model | Hardware | Speed (sec/page) | Use Case |

|---|---|---|---|

| LightGBM | CPU (i7-8700 3.2GHz) | 0.42 | Fast processing |

| VGT | GPU (GTX 1070) | 1.75 | High accuracy |

| VGT | CPU (i7-8700 3.2GHz) | 13.5 | CPU fallback |

- GPU Available: Use VGT for best accuracy-speed balance

- CPU Only: Use LightGBM for optimal performance

- Batch Processing: LightGBM for consistent throughput

- High Accuracy: VGT with GPU for best results

The service uses Tesseract OCR with support for 150+ languages. The Docker image includes only common languages to minimize image size.

docker exec -it --user root pdf-document-layout-analysis /bin/bash# Install specific language

apt-get update

apt-get install tesseract-ocr-[LANGCODE]# Korean

apt-get install tesseract-ocr-kor

# German

apt-get install tesseract-ocr-deu

# French

apt-get install tesseract-ocr-fra

# Spanish

apt-get install tesseract-ocr-spa

# Chinese Simplified

apt-get install tesseract-ocr-chi-sim

# Arabic

apt-get install tesseract-ocr-ara

# Japanese

apt-get install tesseract-ocr-jpncurl http://localhost:5060/infoFind Tesseract language codes in the ISO to Tesseract mapping.

Common language codes:

eng- Englishfra- Frenchdeu- Germanspa- Spanishita- Italianpor- Portugueserus- Russianchi-sim- Chinese Simplifiedchi-tra- Chinese Traditionaljpn- Japanesekor- Koreanara- Arabichin- Hindi

# OCR with specific language

curl -X POST \

-F '[email protected]' \

-F 'language=fr' \

http://localhost:5060/ocr \

--output french_ocr.pdfExplore our ecosystem of PDF processing services built on this foundation:

🔍 Purpose: Intelligent extraction of structured table of contents from PDF documents

Key Features:

- Leverages layout analysis for accurate TOC identification

- Hierarchical structure recognition

- Multiple output formats supported

- Integration-ready API

📝 Purpose: Advanced text extraction with layout awareness

Key Features:

- Content-type aware extraction

- Preserves document structure

- Reading order optimization

- Clean text output with metadata

These services work seamlessly together:

- Shared Analysis: Reuse layout analysis results across services

- Consistent Output: Standardized JSON format for easy integration

- Scalable Architecture: Deploy services independently or together

- Docker Ready: All services containerized for easy deployment

We welcome contributions to improve the PDF Document Layout Analysis service!

-

Fork the Repository

git clone https://github.com/your-username/pdf-document-layout-analysis.git

-

Create a Feature Branch

git checkout -b feature/your-feature-name

-

Set Up Development Environment

make install_venv make install

-

Make Your Changes

- Follow the Clean Architecture principles

- Add tests for new features

- Update documentation as needed

-

Run Tests and Quality Checks

make test make check_format -

Submit a Pull Request

- Provide clear description of changes

- Include test results

- Reference any related issues

- Python: Follow PEP 8 with 125-character line length

- Architecture: Maintain Clean Architecture boundaries

- Testing: Include unit tests for new functionality

- Documentation: Update README and docstrings

- 🐛 Bug Fixes: Report and fix issues

- ✨ New Features: Add new endpoints or functionality

- 📚 Documentation: Improve guides and examples

- 🧪 Testing: Expand test coverage

- 🚀 Performance: Optimize processing speed

- 🌐 Internationalization: Add language support

- Issue First: Create or comment on relevant issues

- Small PRs: Keep pull requests focused and manageable

- Clean Commits: Use descriptive commit messages

- Documentation: Update relevant documentation

- Testing: Ensure all tests pass

- 📚 Documentation: Check this README and inline docs

- 💬 Issues: Search existing issues or create new ones

- 🔍 Code: Explore the codebase structure

- 📧 Contact: Reach out to maintainers for guidance

This project is licensed under the terms specified in the LICENSE file.